On this Page

...

| Pipeline | Description |

|---|---|

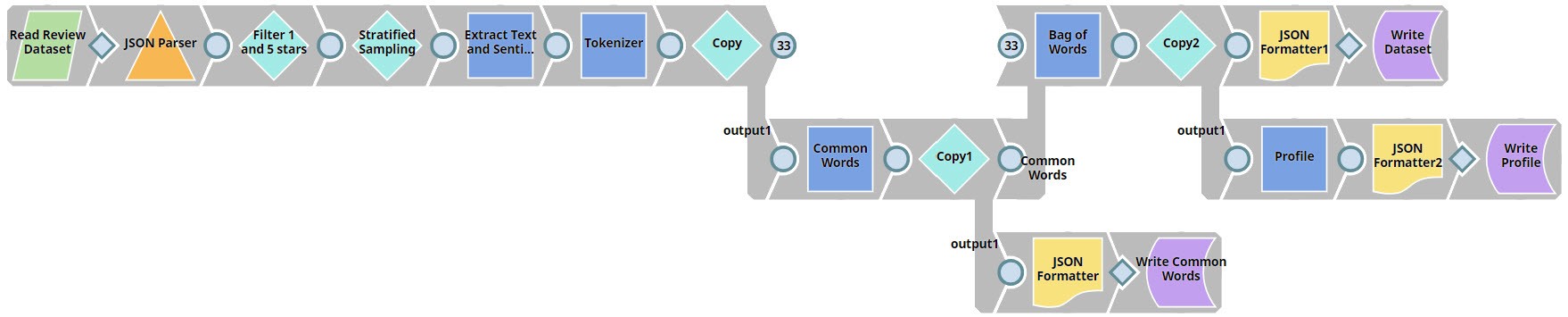

Data Preparation:

| |

Cross Validation. We have 2 pipelines in this step. The top pipeline (child pipeline) performs k-fold cross validation using a specific ML algorithm. The pipeline at the bottom (the parent pipeline) uses the Pipeline Execute Snap to automate the process of performing k-fold cross validation on multiple algorithms.

| |

Model Building. Based on the cross validation result, we can see that the logistic regression and support vector machines algorithms perform the best.

| |

Model Hosting. This pipeline is scheduled as an Ultra Task to offer sentiment analysis as a REST-API-driven service to external applications.

For more information on how to offer an ML ultra task as a REST API, see SnapLogic Pipeline Configuration as a REST API. | |

API Testing. This pipeline takes a sample request, sends it as a REST API request, and displays the results received.

|

...

| Note |

|---|

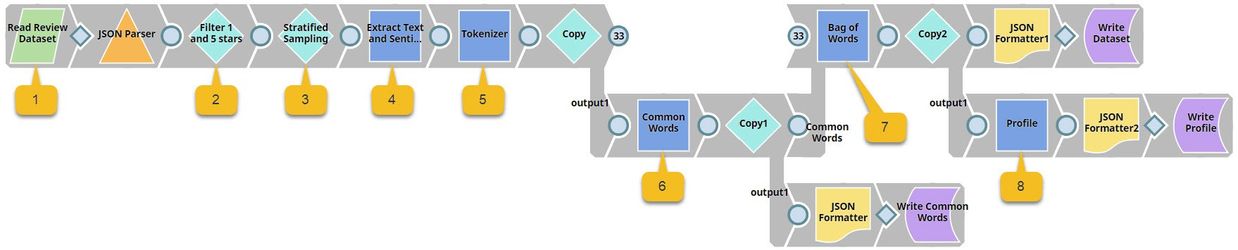

This is where it gets technical. We have highlighted the key Snaps in the image below to simplify understanding. |

This pipeline contains the following key Snaps:

| Snap Label | Snap Name | Description | |

|---|---|---|---|

| 1 | Read Review Dataset | File Reader | Reads an extract of the Yelp dataset containing 10,000 reviews from the SnapLogic File System (SLFS). |

| 2 | Filter 1 and 5 Stars | Filter | Retains only 1-star and 5-star reviews. |

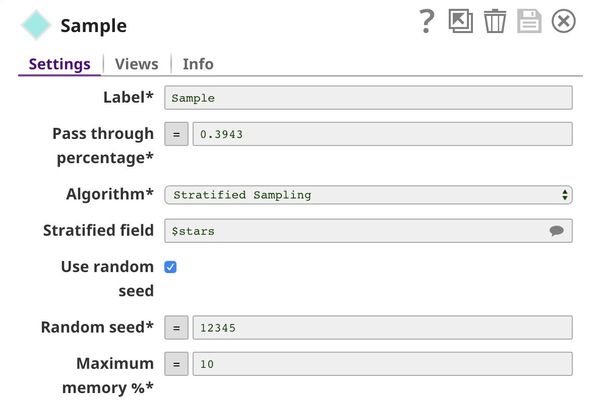

| 3 | Stratified Sampling | Sample | Applies stratified sampling to balance the ratio of 1-star and 5-star reviews. |

| 4 | Extract Text and Sentiment | Mapper | Maps $stars to $sentiment and replaces 1 (star) with negative, and 5 (star) with positive. It also allows the input data ($text) to pass through unchanged to the downstream Snap. |

| 5 | Tokenizer | Tokenizer | Breaks each review into an array of words, of which two copies are made. |

| 6 | Common Words | Common Words | Computes the frequency of the top 200 most common words in one copy of the array of words. |

| 7 | Bag of Words | Bag of Words | Converts the second copy of the array of words into a vector of word frequencies. |

| 8 | Profile | Profile | Computes data statistics using the output from the Common Words Snap. |

Key Data Preparation Snaps

Filter

This Snap keeps 1-star and 5-star reviews while dropping all others (2-star, 3-star, and 4-star reviews). This brings the number of useful reviews down from 10000 to 4428.

...

In the filtered list of 4428 reviews, there are 3555 5-star reviews and 873 1-star reviews. If we train the ML model on this dataset, the model will be biased towards positive (5-star) reviews, because it will get to sample more positive reviews than negative ones. This is what we call an unbalanced problem. There are many methods of dealing with unbalanced datasets, including downsampling (reducing the number of samples that form the majority until a balance is reached) and upsampling (duplicating samples that form the minority). In this case, we use the downsampling method.

We keep all the 873 1-star reviews and the same number of 5-star reviews. The Pass through percentage is (873 + 873) / 4428 = 0.3943. We specify $stars in the Stratified field property and select Stratified Sampling as the Algorithm. Stratified sampling is a technique that maintains the same number of documents of each class; in this case, the class is $stars.

| Expand | ||

|---|---|---|

| ||

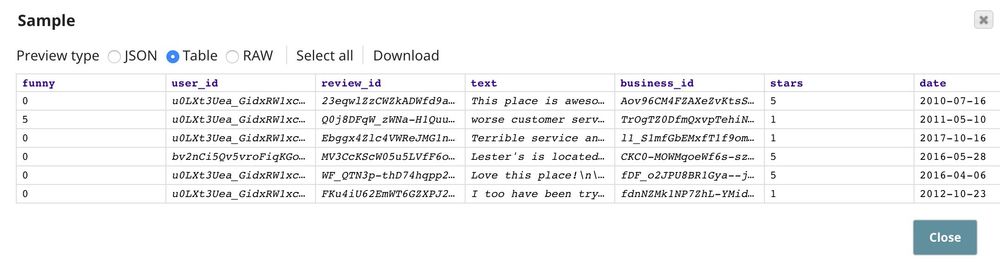

The output contains fewer documents; however, the list contains an equal number of 1-star and 5-star reviews. |

...

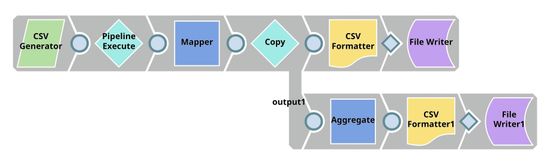

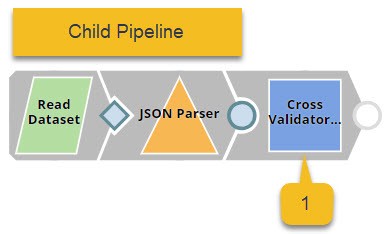

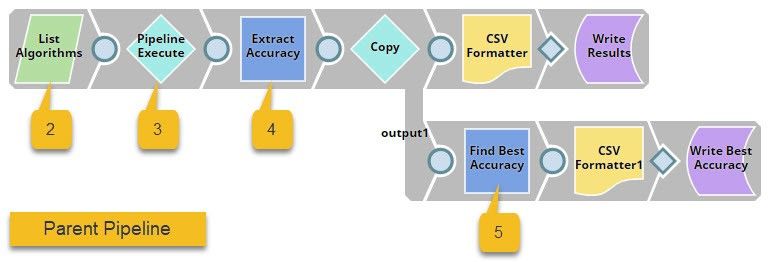

We need two pipelines for cross-validation, and design them as shown below:

The pipeline displayed at the top (the child pipeline) performs k-fold cross validation using a specific ML algorithm. The pipeline at the bottom (the parent pipeline) uses the Pipeline Execute Snap to automate the process of performing k-fold cross validation on multiple algorithms.

The Pipeline Execute Snap in the parent pipeline spawns and executes the child pipeline multiple times with different algorithms. Instances of the child pipeline can be executed sequentially or in parallel to speed up the process. The Aggregate Snap applies a max function to find the algorithm that offers the best result.

This pipeline contains the following key Snaps:

- Cross Validator: Performs 10-fold cross validation using various algorithms.

- CSV Generator: Lists out the algorithms that must be used for cross validation.

- Pipeline Execute: Spawns and executes the child pipeline 7 times, each time with a different algorithm.

- Mapper: Lists out each algorithm with its accuracy numbers.

- Aggregate: Applies the max function to find the algorithm with the greatest accuracy.

Key Cross Validation Snaps

...

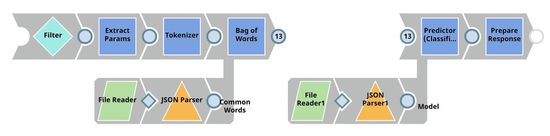

The Cross Validation pipeline (above) demonstrated to us that the logistic regression and support vector machines algorithms offered the greatest cross-validation accuracy for our dataset. In the Model Building pipeline, we use the Trainer (Classification) Snap to train a logistic regression model, which we write to SLFS using the JSON Formatter and File Writer Snaps.

Key Model Building Snaps

Trainer (Classification)

...

Follow the instructions available here to schedule this pipeline as a REST API.

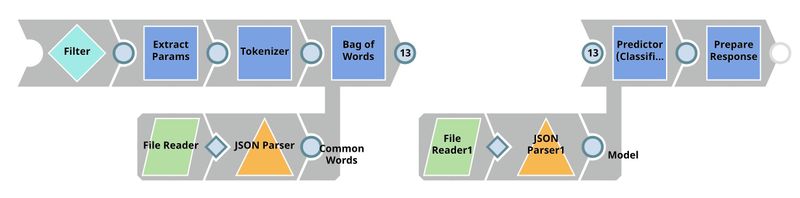

This pipeline is scheduled as an Ultra Task to provide REST API to external applications. The request comes into the open input view of the Filter Snap. The core Snap in this pipeline is Predictor (Classification), which hosts the ML model from JSON Parser1 Snap. The Tokenizer and Bag of Words Snaps prepare the input text. The File Reader Snap reads the common words that are required in the bag of words operation. See more information here.

Predictor (Classification)

...

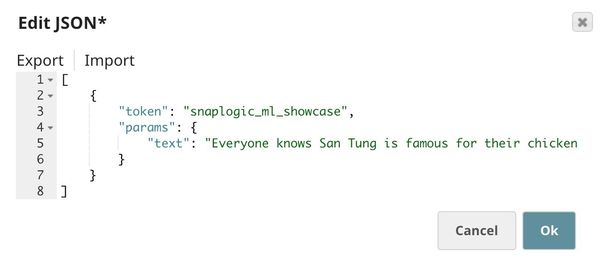

This Snap contains a sample request, which will be sent to the API by the REST Post Snap. The $params.text property in the document containing the request is the actual text whose sentiment we want the model to predict.

Downloads

| Attachments | upload | false|||

|---|---|---|---|---|

|