...

Snap Type:

Write

...

On this Page

| Table of Contents | ||||

|---|---|---|---|---|

|

Snap type: | Write | |

|---|---|---|

Description: | This Snap converts documents into the ORC format and writes the data to HDFS, S3, or the local file system.

| |

...

| |||

| Prerequisites: | [None] | ||

|---|---|---|---|

...

| Support and limitations: | |

|---|---|

...

|

...

| |||

| Account: | |||

|---|---|---|---|

Accounts are not used with this Snap.

The ORC Writer works with the following accounts: | ||||||||

| Views: |

| |||||||

|---|---|---|---|---|---|---|---|---|

Settings | ||

|---|---|---|

Label | ||

Required. The name for the Snap. You can modify this to be more specific, especially if you have more than one of the same Snap in your pipeline. |

Directory

...

Default Value: ORC Writer | ||

Directory | Required. The | |

|---|---|---|

...

path to a directory from which you want the ORC Reader Snap to read data. All files within the directory must be ORC formatted. Basic directory URI structure

|

...

The Directory property is not used in the pipeline execution or preview, and used only in the Suggest operation. When you press the Suggest icon, |

...

the Snap displays a list of subdirectories under the given directory. It generates the list by applying the value of the Filter property.

|

...

|

...

|

...

Filter

...

The glob pattern is used to display a list of directories or files when the Suggest icon is pressed in the Directory or File property. The complete glob pattern is formed by combining the value of the Directory property and the Filter property. If the value of the Directory property does not end with "/", the Snap appends one so that the value of the Filter property is applied to the directory specified by the Directory property.

The following rules are used to interpret glob patterns:

The * character matches zero or more characters of a name component without crossing directory boundaries. For example, *.csv matches a path that represents a filename ending in .csv and *.* matches file names containing a dot.

The ** characters matches zero or more characters crossing directory boundaries, therefore it matches all files or directories in the current directory as well as in all subdirectories. For example, /home/** matches all files and directories in the /home/ directory.

The ? character matches exactly one character of a name component. For example, foo.? matches file names starting with foo. and a single character extension.

The backslash character (\) is used to escape characters that would otherwise be interpreted as special characters. The expression \\ matches a single backslash and "\{" matches a left brace for example.

The [ ] characters are a bracket expression that match a single character of a name component out of a set of characters. For example, [abc] matches "a", "b", or "c". The hyphen (-) may be used to specify a range so [a-z] specifies a range that matches from "a" to "z" (inclusive). These forms can be mixed so [abce-g] matches "a", "b", "c", "e", "f" or "g". If the character after the [ is a ! then it is used for negation so [!a-c] matches any character except "a", "b", or "c".

Within a bracket expression the *, ? and \ characters match themselves. The (-) character matches itself if it is the first character within the brackets, or the first character after the ! if negating.

The { } characters are a group of subpatterns, where the group matches if any subpattern in the group matches. The "," character is used to separate the subpatterns. Groups cannot be nested. For example, *.{csv, json} matches file names ending with .csv or .json

Leading dot characters in file name are treated as regular characters in match operations. For example, the "*" glob pattern matches file name ".login".

All other characters match themselves.

File

...

Default value: hdfs://<hostname>:<port>/ | ||||||||||

Filter |

| |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

File | Required for standard mode. Filename or a relative path to a file under the directory given in the Directory property. It should not start with a URL separator "/". The File property can be a JavaScript expression which will be evaluated with values from the input view document. When you press the Suggest icon, it will display a list of regular files under the directory in the Directory property. It generates the list by applying the value of the Filter property. Use Hive tables if your input documents contains complex data types, such as maps and arrays. Example:

| |||||||||

...

Default value: [None] |

File action | Required. Select an action to take when the specified file already exists in the directory. Please note the Append file action is supported for SFTP, FTP, and FTPS protocols only. Default value: [None] | |

|---|---|---|

File permissions for various users | Set the user and desired permissions. Default value: [None] | |

...

Hive Metastore URL | |

|---|---|

...

This setting is used to assist in setting the schema along with the database and table setting. If the data being written has a Hive schema, then the Snap can be configured to read the schema instead of manually entering it. | ||

...

Set the value to a Hive Metastore |

...

URL where the schema is defined. |

...

Default value: [None] |

Database | The Hive Metastore database where the schema is defined. See the Hive Metastore URL setting for more information. | |

|---|---|---|

Table | The table | |

|---|---|---|

...

| from which the schema |

...

| in the Hive Metastore's |

...

| database must be read. See the Hive Metastore URL setting for more information. | ||

Compression | Required. | |

|---|---|---|

...

| The compression type |

...

| to |

...

| be used when writing the file. | ||

Column paths | Paths where the column values appear in the document. This property is required if the Hive Metastore URL property is empty. | |

|---|---|---|

...

Execute during preview

...

Enables you to execute the Snap during the Save operation so that the output view can produce the preview data.

Default value: Not selected

Troubleshooting

- Use Hive tables if your input documents contains complex data types such as maps and arrays.

- The Snap can only write data into HDFS and local file system.

- When executed in SnapReduce mode, the value of the File setting specifies the output directory of the MapReduce job.

...

Examples:

Default value: [None] |

|

| |||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

Troubleshooting

| Insert excerpt | ||||||

|---|---|---|---|---|---|---|

|

Multiexcerpt include macro name Temporary Files page Join

Examples

...

| Expand | ||

|---|---|---|

| ||

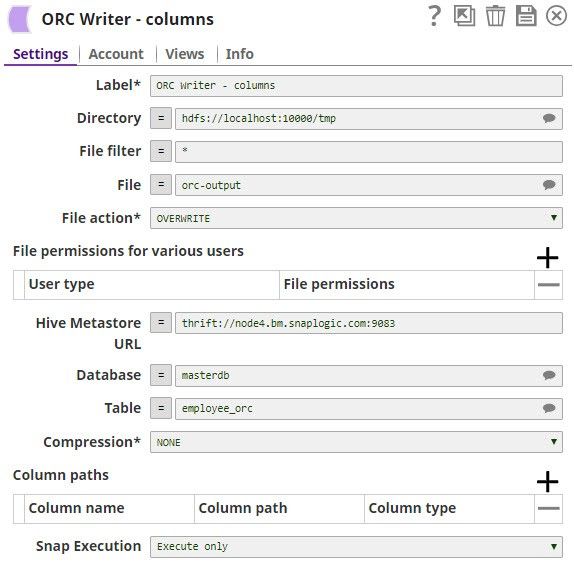

ORC Writer Writing to an HDFS InstanceHere is an example of a ORC Writer configured to write to a local instance of HDFS. The output is written to /tmp/orc-output. The Hive Metastore used reads the schema from the employee_orc table from the masterdb database. No column paths or compression are used. For an example of the Schema, see the documentation on the Schema setting. |

| Expand | ||

|---|---|---|

| ||

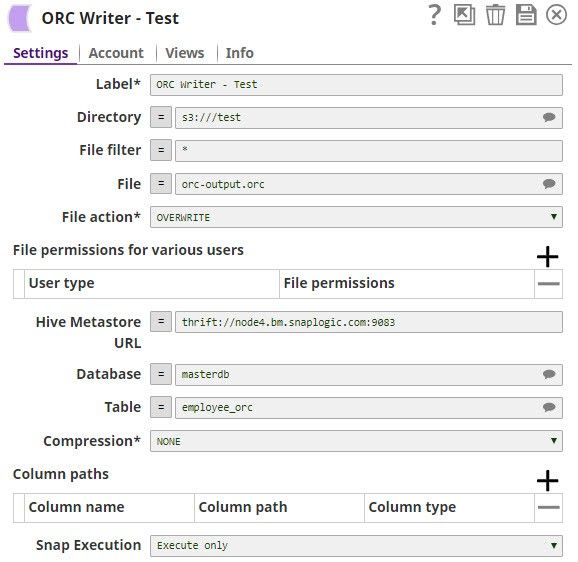

ORC Writer Writing to an S3 InstanceHere is an example of a ORC Writer configured to write to a local instance of |

...

S3. The output is written to /tmp/orc-output. The Hive Metastore used reads the schema from the employee_orc table from the masterdb database. No column paths or compression are used. For an example of the Schema, see the documentation on the Schema setting. |

...

See Also

Read more about ORC at the Apache project's website, https://orc.apache.org/

| Insert excerpt | ||||||

|---|---|---|---|---|---|---|

|