...

...

...

...

...

...

...

In this

...

article

| Table of Contents | ||

|---|---|---|

|

...

|

...

Snap type:

Transform

...

Description:

...

Overview

You can use this Snap to transform incoming data using the given mappings and produce new output data. This Snap evaluates an expression and writes the result to the

...

specified target path. If an expression fails to evaluate, use the Views tab to specify error handling

...

.

...

This Snap supports both binary and document data streams. The default input and output is document, but you can select Binary from the Views tab in the Snap's settings.

Structural Transformations

...

Structural Transformations

The following structural transformations from the Structure Snap are supported in the Mapper Snap:

Move - A move is equivalent to

...

a mapping without a pass-through. The source value is read from the input data and placed into the output data.

...

As the Pass through is disabled, the input data is not copied to the output. Also, the source value is treated as an expression in the Mapper, but it is a JSONPath in the Structure Snap. A jsonPath() function was added to the expression language that can be used to execute a JSONPath on a given value. If

...

Pass through is enabled, then you

...

should delete the old value.

Delete - Write a JSONPath in the source column and leave the target column blank.

Update - All of the cases for update can be handled by writing the appropriate JSONPath. For example:

Update value:

...

target path = $last_nameUpdate map:

...

target = $address.first_nameUpdate list:

...

target = $names[(value.length)]The '(value.length)' evaluates to the current length of the array, so the new value will be placed there at the end.

Update list of maps:

...

target = $customers[*].first_nameThis translates into "write the value into the 'first_name' field in all elements of the 'customers' array".

Update list of lists:

...

target = $lists_of_lists[*][(value.length)]

For performance reasons, the Mapper does not make a copy of any arrays or objects written to the Target Path. If you write the same array or object to more than one target path and plan to modify the object, make the copy yourself. For example, given the array "$myarray" and the following mappings: |

...

|

...

|

...

|

...

|

...

|

...

|

Any future changes made to either |

...

|

...

|

...

|

...

|

...

|

...

|

The same is true for objects, except you can make a copy using the ".extend()" method as shown below: |

...

|

...

|

...

|

...

|

...

|

...

|

...

[None]

...

Works in Ultra Pipelines.

...

Accounts are not used with this Snap.

...

This Snap has at most one document error view and produces zero or more documents in the view. If the Snap fails during the operation, an error document is sent to the error view containing the fields error, reason, original, resolution, and stacktrace:

| Code Block |

|---|

{ error: "$['SFDCID__c\"name'] is undefined" reason:

"$['SFDCID__c\"name'] was not found in the containing object." original: {[:{}

resolution: "Please check expression syntax and data types."

stacktrace: "com.Snaplogic.Snap.api.SnapDataException: ...

} |

Passing Binary Data

You would convert binary data to document data by preceding the Mapper Snap with the Binary-to-Document Snap. Likewise, to convert the document output of the Mapper Snap to binary data, you would add the Document-to-Binary Snap after the Mapper Snap.

Currently, you can do this transformation within the Mapper Snap itself. You set the Mapper Snap to take binary data as its input and output by using the $content expression.

| Note | ||

|---|---|---|

| ||

If you are only working with a binary stream as both input and output, you must set both source and target fields with $content, then manipulate the binary data using the Expression Builder. If you do not specify this mapping, then the binary stream from the binary input document is passed through unchanged. |

...

Settings

...

Label

...

Passing Binary Data

You can convert binary data to document data by adding the Binary-to-Document Snap upstream of the Mapper Snap. Similarly, to convert the document output of the Mapper Snap to binary data, add the Document-to-Binary Snap downstream of the Mapper Snap.

You can also transform binary data to document data within the Mapper Snap itself by using the

$contentexpression.

Binary Input and Output

If you are only working with a binary stream as both input and output, you must set both source and target fields with

$content, then manipulate the binary data using the Expression Builder. If you do not specify this mapping, then the binary stream from the binary input document is passed through without any change.

Snap Type

Mapper Snap is a TRANSFORM-type Snap that transforms data and passes it to the downstream Snap.

Prerequisites

None.

Support for Ultra Pipelines

Works in Ultra Pipelines.

Limitation

Expressions used in this Snap, downstream of any Snowflake Snaps, that evaluate to very large values such as EXP(900) are displayed as Infinity in the input/output previews. However, you can see the exact evaluated values in the validation previews. Learn more: Java Script Limitations in Displaying Numbers.

Snap Views

Type | Format | Number of Views | Examples of Upstream and Downstream Snaps | Description |

|---|---|---|---|---|

Input | Document |

| Binary-to-Document | This Snap can have a most one document or binary input view. If you do not specify an input view, the Snap generates a downstream flow of one row. |

Output | Document |

| Any Document Snap | This Snap has exactly one document or binary output view. |

Error | Error handling is a generic way to handle errors without losing data or failing the Snap execution. You can handle the errors that the Snap might encounter while running the Pipeline by choosing one of the following options from the When errors occur list under the Views tab. The available options are:

Learn more about Error handling in Pipelines. | |||

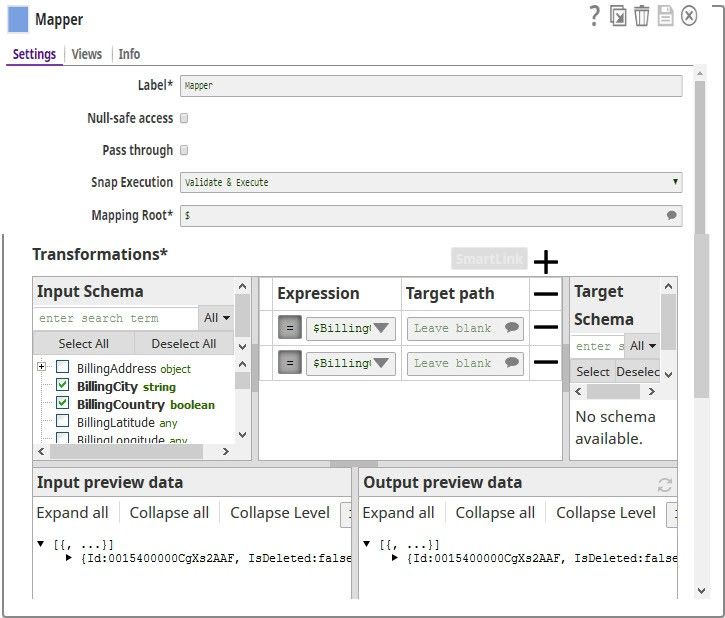

Snap Settings

Field Name | Field Type | Description | |

|---|---|---|---|

Label* Default Value: Mapper | String | The name for the Snap. You can modify this to be more specific, especially if you have more than one of the same Snap in your | |

...

Pipeline. | |

Null-safe access | |

|---|---|

...

Default Value: Deselected | Checkbox | Select this checkbox to set the target value to null in case the source path does not exist. For | |

|---|---|---|---|

...

example, |

...

|

...

in the source data. |

...

Selecting this checkbox allows the Snap to write null to |

...

displaying an error. |

...

If you deselect this checkbox, the Snap fails if the source path does not exist, ignores the record entirely, or writes the record to the error view depending on the setting of the error view |

...

field. | |

Pass through | |

|---|---|

...

Default Value:Deselected | Checkbox | This setting determines if data should be passed through or not. If you select this checkbox, then all the original input data is passed into the output document together with the data transformation results. | |

|---|---|---|---|

...

If you deselect this checkbox, then only the data transformation results that are defined in the mapping section |

...

appear in the output document and the input data |

...

is discarded.

|

Default: Not selected.

When to always select Pass through Always select Pass through if you plan to leave the Target path field blank |

...

; |

...

else, the Snap |

...

displays an error |

...

that the field that you want to delete |

...

does not exist. This is the expected behavior. |

...

For example, you have an input file that contains a number of attributes; but you need only two of these downstream. So, you connect a Mapper to the downstream Snap supplying the input file, select the two attributes you need by listing them in the Expression fields, leave the Target path field blank, and select Pass through. When you execute the |

...

Pipeline, |

...

this Snap evaluates the input documents/binary data and picks up the two attributes that you want, and passes the entire document/binary data |

...

through to the Target schema. From the list of available attributes in the Target Schema, the Mapper Snap picks up the two attributes you listed in the Expression fields, and passes them as output. However, if you |

...

had not selected the Pass through |

...

checkbox, the Target Schema would be empty, and the Mapper would |

...

Mapping Root

...

display a | |||

Transformations* | Use this field set to configure the settings for data transformations. | ||

|---|---|---|---|

Mapping Root Default Value: $ | String/Suggestion | Specify the sub-section of the input data to be mapped. | |

...

Learn More: Understanding the Mapping Root. |

Default: $

...

Required. Expression and target to write the result of the expression. Expressions that are evaluated will remove the source targets at the end of the run. For example:

Input Schema | Dropdown list | Select the input data (that comes from the upstream Snap) that you want to transform. Drag the item you want to map and place it under the Mapping table. | |

|---|---|---|---|

Mapping table | Use this field set to specify the source path, expression, and target path columns used to map schema structure. | ||

Expression Default Value: N/A | |||

...

|

...

String/Expression | Specify the expression to write to the target path. Expressions that are evaluated will remove the source targets at the end of the run.

|

...

|

...

|

...

|

...

Lear More: Understanding Expressions in SnapLogic |

...

and Using Expressions for usage guidelines. |

Target Path Recommendation

Iris simplifies configuring the Target path property in the Mapper Snap by recommending suggestions for the Expression and Target path property mapping. To make these suggestions, Iris analyzes Expression and Target path mappings in other Pipelines in your Org and suggests the exact matches for the Expressions in your current Pipeline. The suggestions are displayed upon clicking

For example, you have the Expression $Emp.Emp_Personal.FirstName in one of your Pipelines. And you have set the Target path for this expression as $FirstName. Now, if you use the expression $Emp.Emp_Personal.FirstName in a new Pipeline, then Iris suggests $FirstName as one of the recommended Target paths. This helps you standardize the naming standards within your org.

The following video illustrates how Iris recommends Target path in a Mapper Snap:

...

Managing Numeric Inputs in Mapper Expressions

...

Say the value being passed from upstream for $num is 20.05. You would expect the value of $numnew to now be 120.05. But, when you execute the Snap, the value of $numnew is shown as 20.05100.

This happens because, as of now, the Mapper Snap reads all incoming data as strings, unless they are expressly listed as integers (INT) or decimals (FLOAT). So, to ensure that the upstream numeric data is appropriately interpreted, parse the data as a float. This will convert the numeric data into a decimal; and all calculations performed on the upstream data in the Mapper Snap will work as expected:

...

The value of $numnew is now shown as 120.05.

...

| Multiexcerpt include macro | ||||

|---|---|---|---|---|

|

...

| Multiexcerpt include macro | ||||

|---|---|---|---|---|

|

Mapping Table

The mapping table makes it easier to do the following:

- Determine which fields in a schema are mapped or unmapped.

- Create and manage a large mapping table through drag-and-drop.

- Search for specific fields.

For more information, see Using the Mapping Table.

Example

Removing Columns from Excel Files Using Mapper

In this example, you read an Excel file from the SLDB and remove columns that you do not need from the file. You then write the updated data back into the SLDB as a JSON file.

...

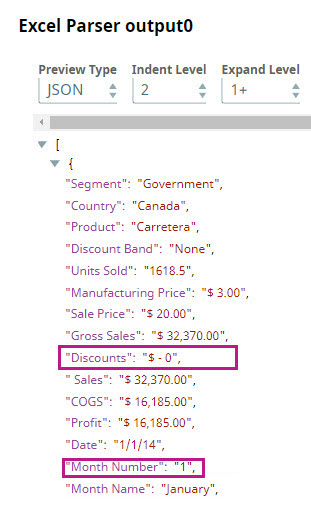

Parse the file using the Excel Parser Snap. You can preview the parsed data by clicking the icon.

...

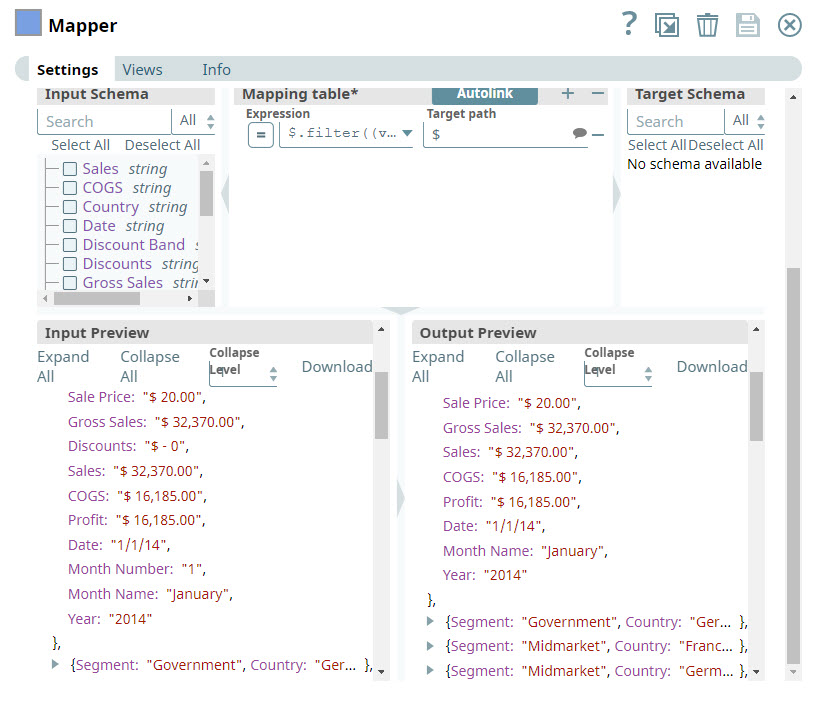

In the Expression field, you enter the criteria that you want to use to remove the Discounts and Month Name columns.

...

| ||||

Target path Default Value: N/A | String/Suggestion | Specify the target path at which the expression should be written. Target Path Recommendation

For example, you have the Expression $Emp.Emp_Personal.FirstName in one of your Pipelines. And you have set the Target path for this expression as $FirstName. Now, if you use the expression $Emp.Emp_Personal.FirstName in a new Pipeline, then Iris suggests $FirstName as one of the recommended Target paths. This helps you standardize the naming standards within your org. The following video illustrates how Iris recommends Target path in a Mapper Snap: | ||

Snap Execution | Dropdown list | Select one of the three modes in which the Snap executes. Available options are:

| ||

|---|---|---|---|---|

Mapping Table

The mapping table makes it easier to do the following:

Determine which fields in a schema are mapped or unmapped.

Create and manage a large mapping table through drag-and-drop.

Search for specific fields.

Learn More: Using the Mapping Table.

Examples

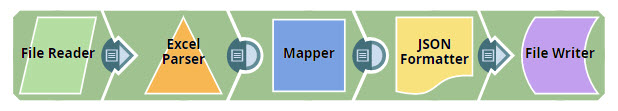

Removing Columns from Excel Files Using Mapper

In this example, you read an Excel file from the SLDB and remove columns that you do not need from the file. You then write the updated data back into the SLDB as a JSON file.

...

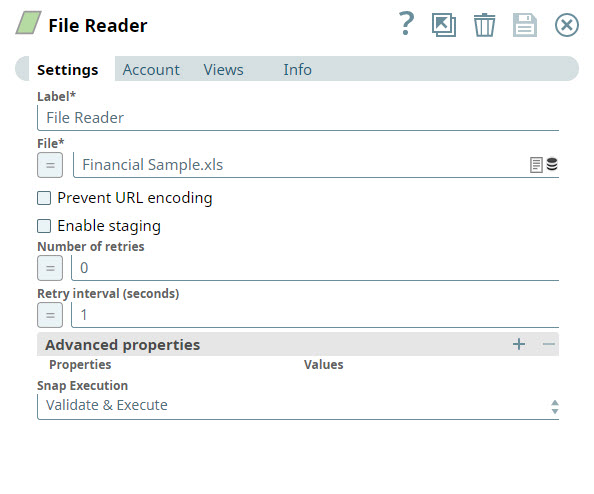

Add a File Reader Snap to the Canvas and configure it to read the Excel file from which you want to remove specific columns. | Parse the file using the Excel Parser Snap. You can preview the parsed data by clicking the |

From the preview file, you can see the columns that you want to remove. In this instance, you decide to remove the Discounts and Month Number columns. To do so, you add a Mapper Snap to the Pipeline. | In the Expression field, you enter the criteria (as shown below) that you want to use to remove the Discounts and Month Name columns. |

|

...

|

...

Enter $ in the Target field to indicate that you want to leave the other column names unchanged.

...

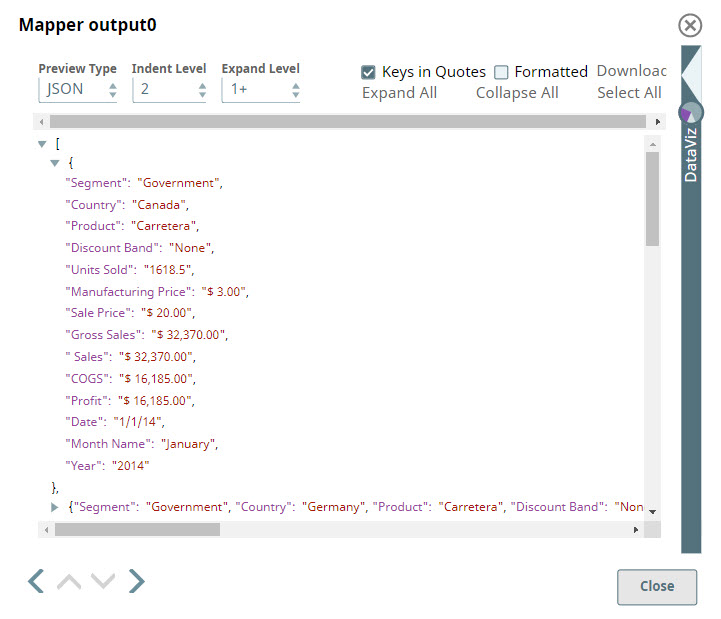

Validate the Snap,

...

you can see that the Discounts and Month Name columns are skipped.

...

You now need to write the updated data back into the SLDB as a JSON file.

...

Add a JSON Formatter Snap to the Pipeline to convert the documents coming in from the Mapper Snap into binary data.

...

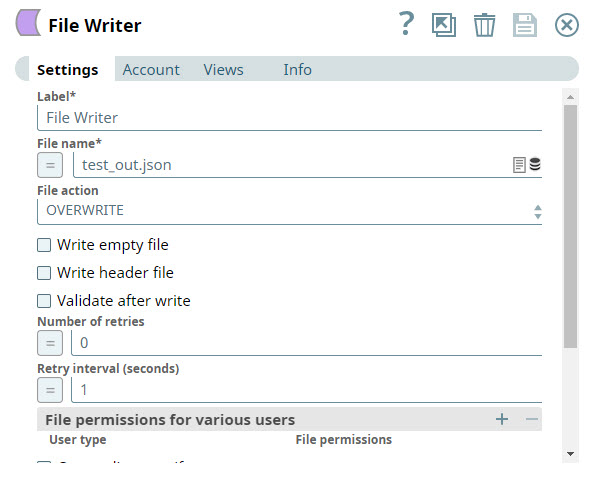

Then add a File Writer Snap and configure it to write the input streaming data to the SLDB.

...

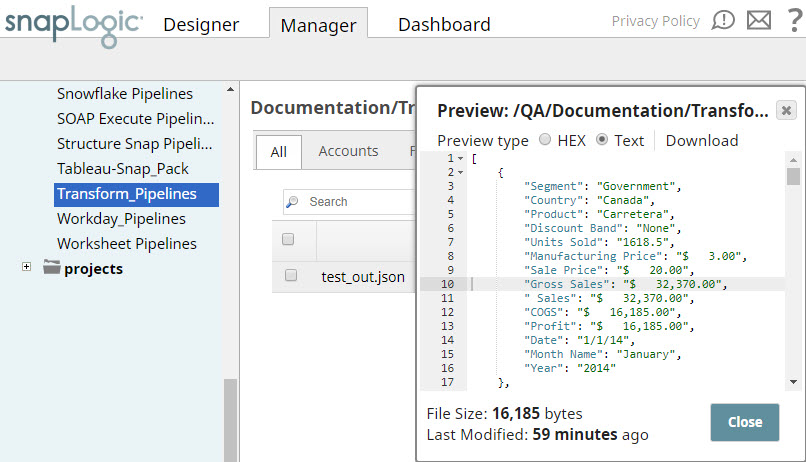

You can now view the saved file in the destination project in SnapLogic Manager.

...

Data Output Example

...

Successful Mapping | ||

|---|---|---|

If your source data looks | ||

...

like | And your mapping looks like | Your outgoing data will look like |

|

And your mapping looks like:

|

...

|

Your outgoing data will look like:

| ||

Unsuccessful Mapping | ||

...

If your source data looks like:

...

| ||

...

And your mapping looks like:

- Expression: $middle_name.concat(" ", $last_name)

- Target path: $full_name

An error will be thrown.

Example:

Escaping Special Characters in Source Data

This example demonstrates how you can use the Mapper Snap to customize source data containing special characters so that it is correctly read and interpreted by downstream Snaps.

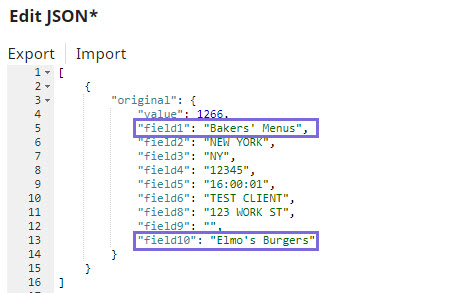

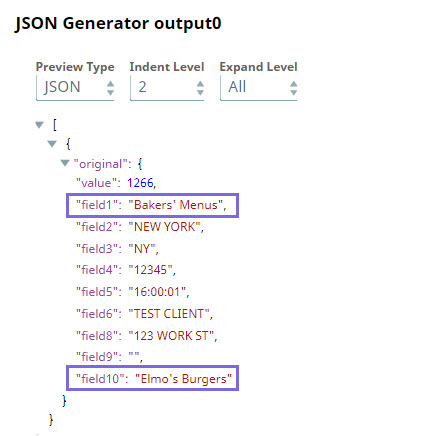

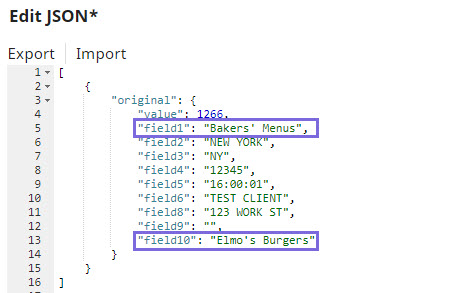

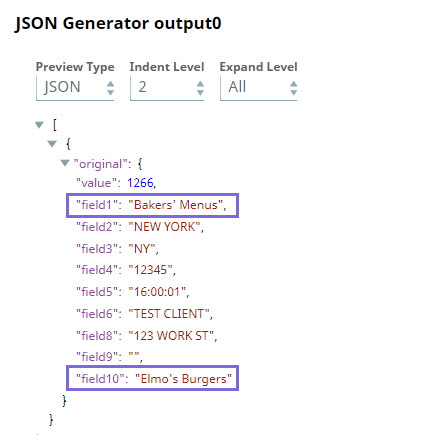

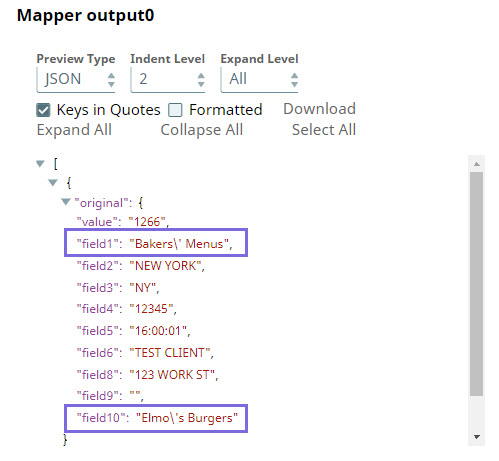

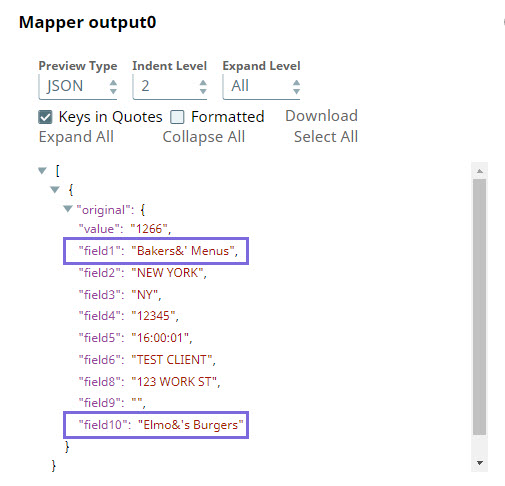

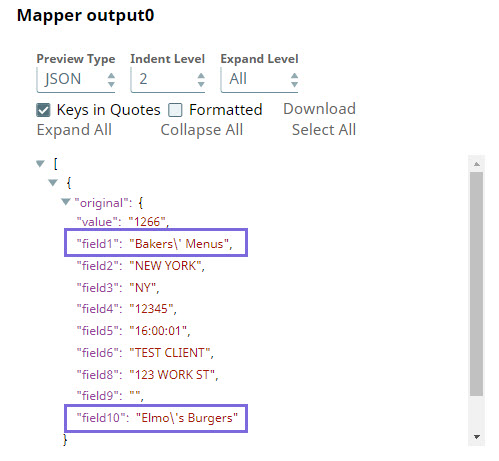

In the sample Pipeline, custom JSON data is provided in the JSON Generator Snap, wherein the values of field1 and field10 include the special character (').

The output preview of the JSON Generator Snap displays the special character correctly:

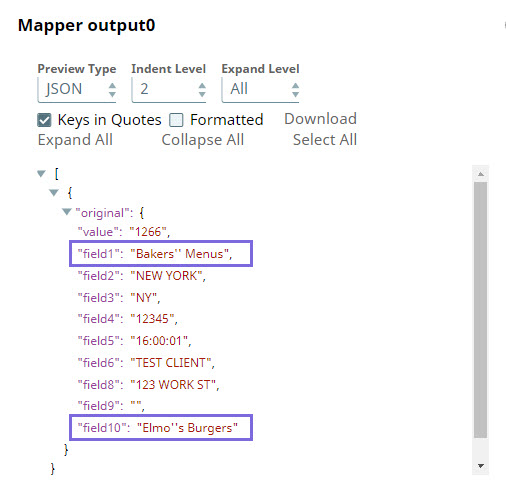

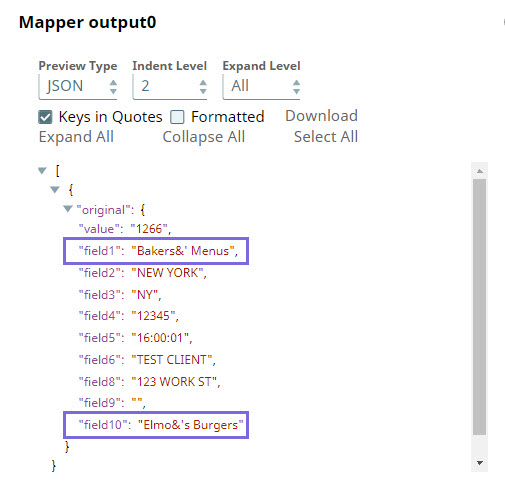

Before sending this data to downstream Snaps, you may need to prefix the special characters with an escape character so that downstream Snaps correctly interpret these.

You can do this using the Expression field in the Mapper Snap. Based on the accepted escape characters in the endpoint, you can select from the following expressions:

...

| An error is displayed. |

Escaping Special Characters in Source Data

This example demonstrates how you can use the Mapper Snap to customize source data containing special characters so that it is correctly read and interpreted by downstream Snaps.

...

Add custom JSON data in the JSON Generator Snap, wherein the values of | The output preview of the JSON Generator Snap displays the special character correctly: |

Before sending this data to downstream Snaps, you may need to prefix the special characters with an escape character so that downstream Snaps correctly interpret these. You can do this using the Expression field in the Mapper Snap. Based on the accepted escape characters in the endpoint, you can select from the following expressions:

If the Escape Character is | Use Expression | Sample Output |

|---|---|---|

Single quote (') | JSON: $original.mapValues((value,key)=> value.toString().replaceAll("'","''")) OR $original.mapValues((value,key)=> value.toString().replaceAll("'","\''")) CSV: $[' Business-Name'].replace ("'","''") | |

Ampersand (&) | JSON: $original.mapValues((value,key)=> value.toString().replaceAll("'"," |

...

\&'")) OR $original.mapValues((value,key)=> value.toString().replaceAll("'"," |

...

&'")) CSV: $[' Business-Name'].replace ("'"," |

...

&'") |

...

...

Backslash ( |

...

\) | JSON: $original.mapValues((value,key)=> value.toString().replaceAll("'","\ |

...

\'")) |

OR

CSV: $[' Business-Name'].replace ("'"," |

...

In this way, you can customize the data to be passed on to downstream Snaps using the Expression field in the Mapper Snap.

Refer to the Community discussion for more information.

See it in Action

The SnapLogic Data Mapper

...

\\'") |

...

Backslash (\)

JSON:

$original.mapValues((value,key)=> value.toString().replaceAll("'","\\'"))

| Info |

|---|

Backslash is configured as an escape character in SnapLogic. Therefore, it must itself be escaped to be displayed as text. |

CSV:

$[' Business-Name'].replace ("'","\\'")

In this way, you can customize the data to be passed on to downstream Snaps using the Expression field in the Mapper Snap.

Refer to the Community discussion for more information.

See it in Action

The SnapLogic Data Mapper

| Widget Connector | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|

|

SnapLogic Best Practices: Data Transformations and Mappings

| Widget Connector | ||||||||

|---|---|---|---|---|---|---|---|---|

|

...

SnapLogic Best Practices: Data Transformations and Mappings

| Widget Connector | ||

|---|---|---|

|

| View file | ||||

|---|---|---|---|---|

|

| Attachments | ||

|---|---|---|

|

See Also

...

|

| Attachments | ||

|---|---|---|

|

...

Related Links

Community Links:

| Insert excerpt | ||||||

|---|---|---|---|---|---|---|

|