On this Page

| Table of Contents | ||||||

|---|---|---|---|---|---|---|

|

Overview

Snap type: | Write | |||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

Description: | This Snap executes a Snowflake bulk upsert. The Snap bulk updates the records if present , or inserts records to the target Snowflake table. Incoming documents are first written to a staging file on Snowflake's internal staging area. A temporary table is created on Snowflake with the contents of the staging file. An update operation is then run to update existing records in the target table and/or an insert operation is run to insert new records into the target table. Depending on the account that you configure with the Snap, you can use the Snowflake Bulk Upsert Snap to upsert data into AWS S3 buckets or Microsoft Azure Storage Blobs.

| |||||||||||||

| Prerequisites: |

Security prerequisites: You should have the following permission in your Snowflake account to execute this Snap:

The following commands enable minimum privileges in the Snowflake Console:

For more information on Snowflake privileges, refer to Access Control Privileges. The below are mandatory when using an external staging location: When using an Amazon S3 bucket for storage:

When using a Microsoft Azure storage blob:

| |||||||||||||

| Internal SQL Commands | This Snap uses the following commands internally:

| |||||||||||||

| Support and limitations: |

| |||||||||||||

| Account: | This Snap uses account references created on the Accounts page of SnapLogic Manager to handle access to this endpoint. The S3 Bucket, S3 Access-key ID, and S3 Secret key properties are not needed for the Snowflake Bulk Upsert Snap as Snowflake uses its internal staging area to host the input data. See Configuring Snowflake Accounts for information on setting up this type of account. | |||||||||||||

| Views: |

| |||||||||||||

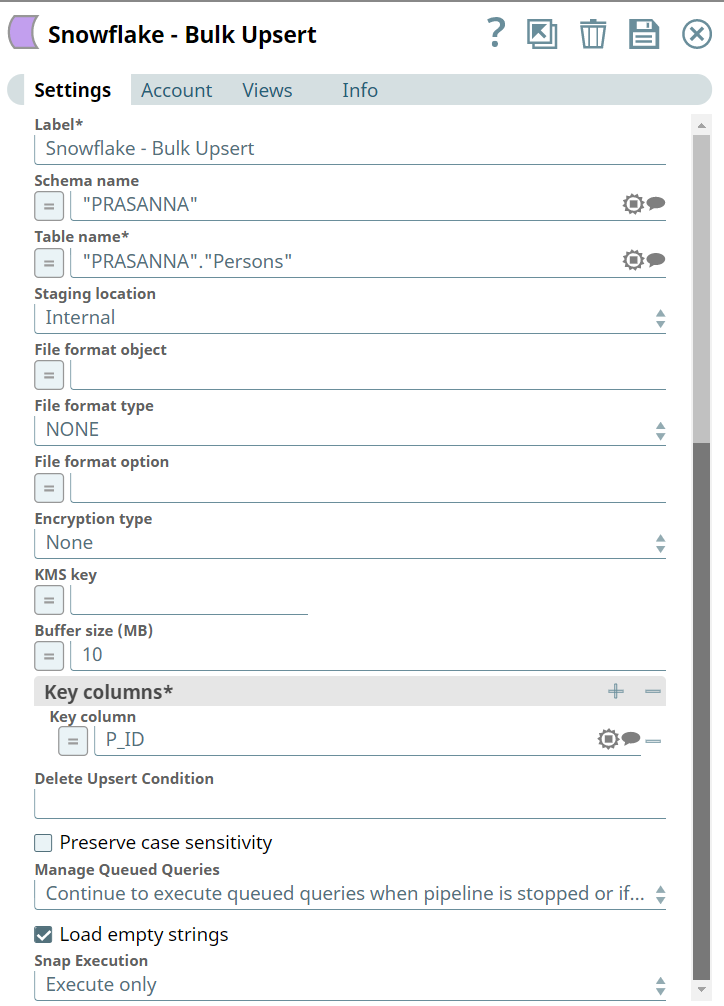

Settings | ||||||||||||||

Label | Required. The name for the Snap. You can modify this to be more specific, especially if you have more than one of the same Snap in your pipeline. | |||||||||||||

Schema name | The database schema name. Selecting a schema filters the Table name list to show only those tables within the selected schema.

| |||||||||||||

Table name | Required. Table on The table on which to execute the bulk load operation.

| |||||||||||||

| Staging location | The type of staging location that is used for data loading. The options available include:

Default value: Internal | |||||||||||||

| File format object | Specifies an existing file format object to use for loading data into the table. The specified file format object determines the format type such as CSV, JSON, XML, and AVRO, or other format options for data files. Default value: [None] | |||||||||||||

| File format type | Specifies a predefined file format object to use for loading data into the table. The available file formats include CSV, JSON, XML and AVRO. Default value: NONE | |||||||||||||

| File format option | Specifies the file format option; separate multiple options by using blank spaces and commas.

Default value: [None] | |||||||||||||

| Encryption type | Specifies the type of encryption to be used on the data. The available encryption options are:

Default value: No default value.

| |||||||||||||

| KMS key | The KMS key that you want to use for S3 encryption. For more information about the KMS key, see AWS KMS Overview and Using Server Side Encryption. Default value: No default value.

| |||||||||||||

| Buffer size (MB) | The size of each part, or chunk, of data being uploaded, in MB. Minimum value: 6 MB Maximum value: 5000 MB Default value: 10 MB The Snap uses the S3 Multipart Upload API to write files to S3. For more details, see Amazon S3 Multipart Upload Limits. | |||||||||||||

Key columns | Required. Columns to use to check for existing entries in the target table. Default: None | |||||||||||||

Delete Upsert Condition | Delete Upsert Condition when true, causes the case to be executed. | |||||||||||||

| Preserve case sensitivity |

| |||||||||||||

Manage Queued Queries | Select this property to decide whether the Snap should continue or cancel the execution of the queued Snowflake Execute SQL queries when you stop the pipeline.

Default value: Continue to execute queued queries when the pipeline is stopped or if it fails | |||||||||||||

| Load empty strings | If selected, empty string values in the input documents are loaded as empty strings to the string-type fields. Otherwise, empty string values in the input documents are loaded as null. Null values are loaded as null regardless. Default value: Selected | |||||||||||||

|

| |||||||||||||

Example

Upserting Records

This example Pipeline demonstrates how you can efficiently update and delete data (rows) using the Key Column ID field and Upsert Delete condition. We use Snowflake - Bulk Upsert Snap to accomplish this task.

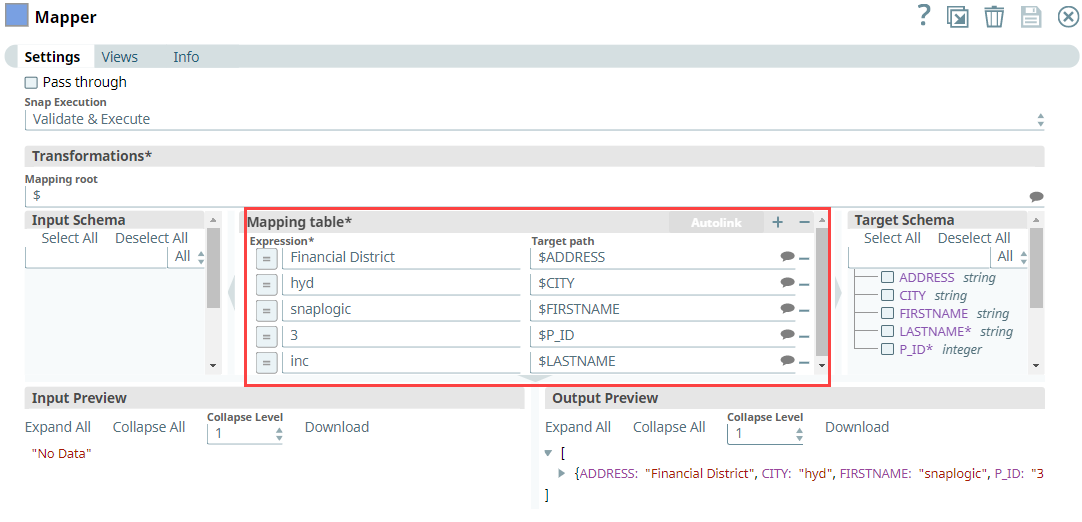

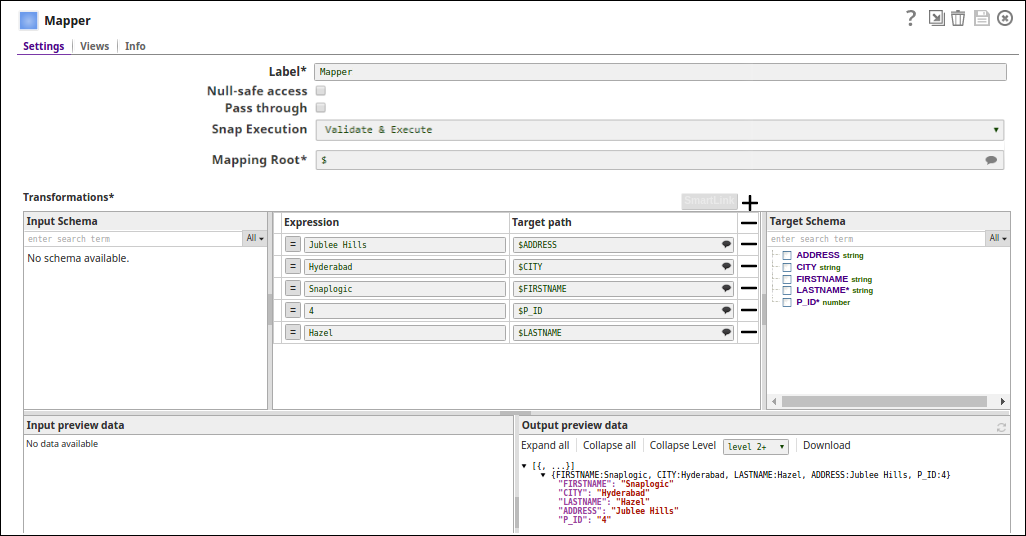

First, we configure the Mapper Snap with the required details to pass them as inputs to the downstream Snap.

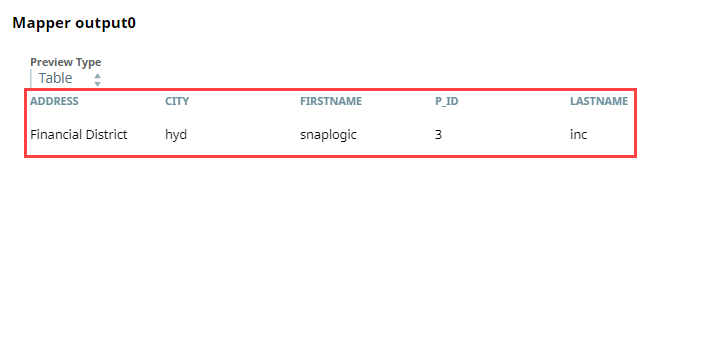

After validation, the Mapper Snap prepares the output as shown below to pass to the Snowflake Bulk - Upsert Snap.

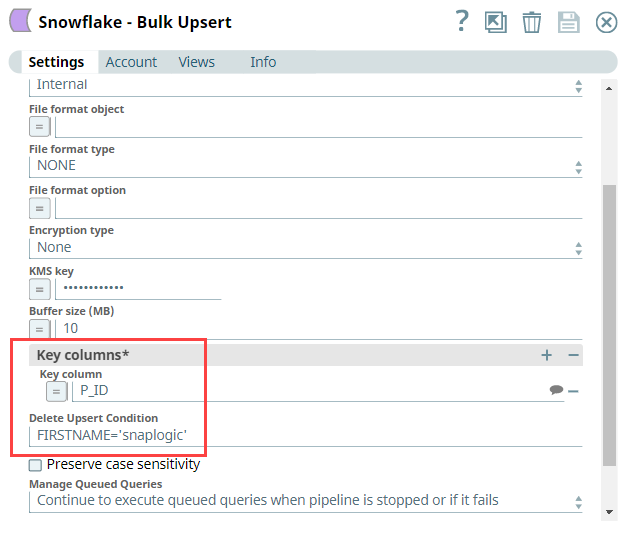

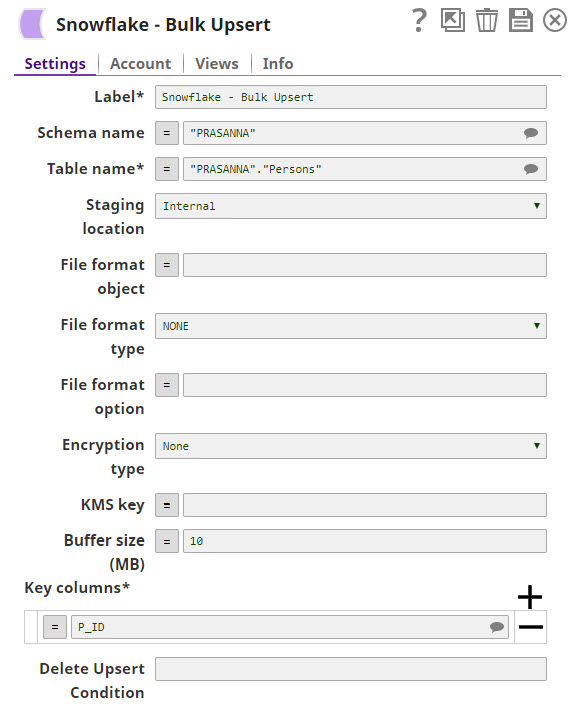

Next, we configure the Snowflake - Bulk Upsert Snap to:

- Upsert the existing row for P_ID column, (so, we provide P_ID in the Key column field).

- Delete the rows where the FIRSTNAME is snaplogic in the target table, (so, we specify FIRSTNAME = 'snaplogic' in the Delete Upsert Condition field).

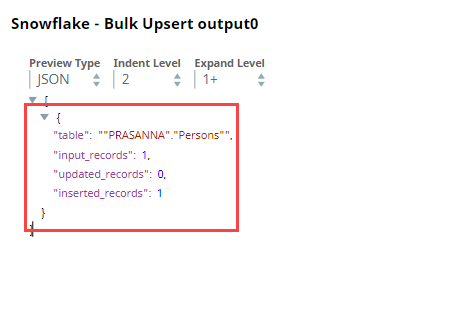

After execution, this Snap inserts a new record into the existing row for the P_ID key column in Snowflake.

| Inserted Records Output in JSON | Inserted Records in Snowflake |

|---|---|

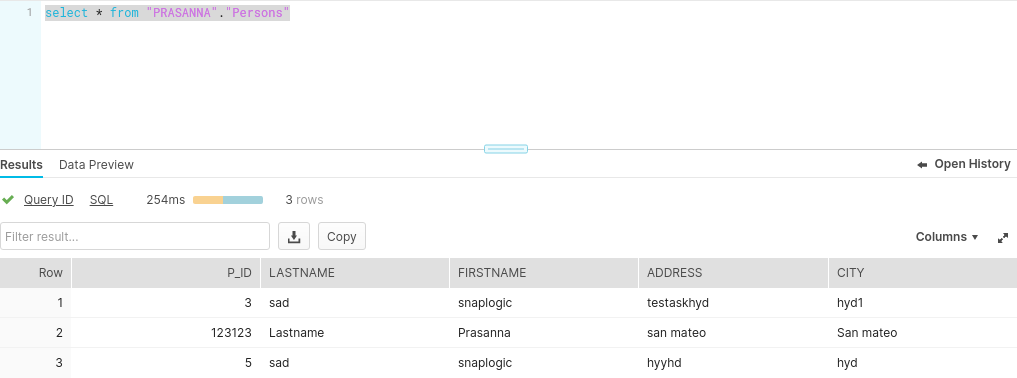

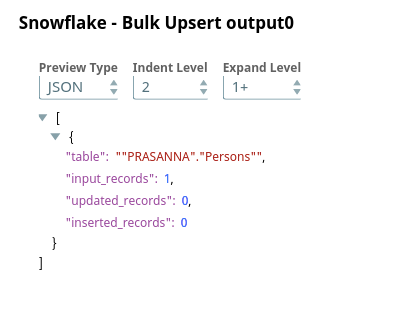

Upon execution, if the Delete Upsert condition is true, the Snap deletes the records in the target table as shown below.

| Output in JSON | Deleted Record in Snowflake |

|---|---|

Bulk Loading Records

In the following example, we update a record using the Snowflake Bulk Upsert Snap. The invalid records which cannot be inserted will be routed to an error view.

In the pipeline execution:

- The Mapper (Data) Snap maps the record details to the input fields of Snowflake Upsert Snap:

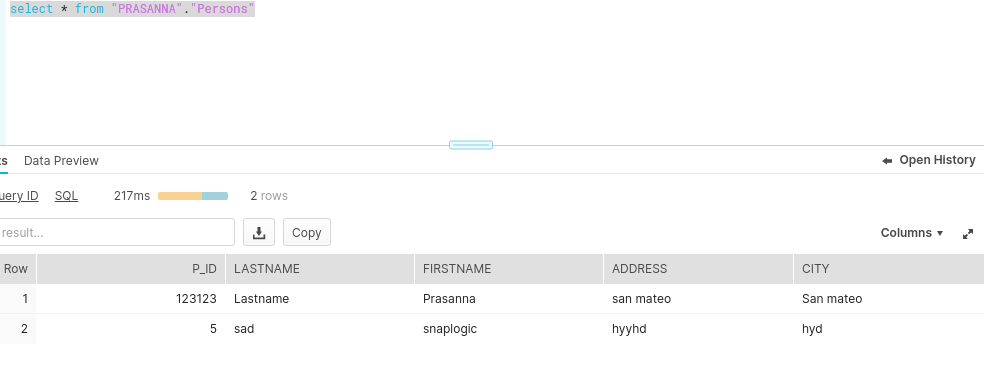

- Snowflake Upsert Snap updates the record using the "PRASANNA"."Persons" table object:

- Successful execution of the pipeline displays the following data preview (Note that one input has been made and two records with the name P_ID have been updated within the Persons table.):

| Attachments | ||

|---|---|---|

|

| Insert excerpt | ||||||

|---|---|---|---|---|---|---|

|

.png?version=1&modificationDate=1489733394354&cacheVersion=1&api=v2)

.png?version=1&modificationDate=1489733387721&cacheVersion=1&api=v2)