On this Page

| Table of Contents | ||||

|---|---|---|---|---|

|

Snap type: | Write | |||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

Description: | This Snap executes a Redshift bulk upsert. The Snap bulk updates the records if present, or, inserts records into the target table. Incoming documents are first written to a staging file on S3. A temporary table is created on Redshift with the contents of the staging file. An update operation is then run to update existing records in the target table and/or an insert operation is run to insert new records into the target table.

| |||||||||||||

| Prerequisites: |

| |||||||||||||

| Support and limitations: | Works in Does not work in Ultra Pipelines. | |||||||||||||

| Account: | This Snap uses account references created on the Accounts page of SnapLogic Manager to handle access to this endpoint. The S3 Bucket, S3 Access-key ID and S3 Secret key properties are required for the Redshift-Bulk Upsert Snap. The S3 Folder property may be used for the staging file. If the S3 Folder property is left blank, the staging file will be stored in the bucket. See Configuring Redshift Accounts for information on setting up this type of account. | |||||||||||||

| Views: |

| |||||||||||||

Settings | ||||||||||||||

Label | Required. The name for the Snap. You can modify this to be more specific, especially if you have more than one of the same Snap in your pipeline. | |||||||||||||

Schema name | The database schema name. Selecting a schema filters the Table name list to show only those tables within the selected schema.

| |||||||||||||

Table name | Required. Table on which to execute the bulk load operation.

| |||||||||||||

Key columns | Required. Columns to use to check for existing entries in the target table. Default: None | |||||||||||||

Validate input key value | If selected, all duplicates and null key-column values in the input data will be written to the error view and the bulk upsert operation will stop. Or else, duplicates are inserted into the target table unless same duplicate rows already exist in the target table and null key-column values may cause unexpected result. The detection of duplicates is performed after all data is copied to S3 and then to a temporary table in Redshift and before the data is updated or inserted upsert operation will stop. Or else, duplicates are inserted into the target table unless same duplicate rows already exist in the target table and null key-column values may cause unexpected result. The detection of duplicates is performed after all data is copied to S3 and then to a temporary table in Redshift and before the data is updated or inserted into the target table. Any two input documents with the same values for all key columns are considered 'duplicates'. If unchecked, duplicates in the input data will be inserted into the target table unless one or more duplicates already exist in the target table. Please note that Redshift allows duplicate rows to be inserted regardless of primary columns or key columns. Default: Not selected | |||||||||||||

Truncate data | Truncate existing data before performing data load. With the Bulk Update Snap, instead of doing truncate and then update, a Bulk Insert would be faster. Default: Not Selected | |||||||||||||

Update statistics | Update table statistics after data load by performing an Analyze operation on the table. Default value: Not selected | |||||||||||||

Accept invalid characters | Accept invalid characters in the input. Invalid UTF-8 characters are replaced with a question mark when loading. Default value: Selected | |||||||||||||

Maximum error count | Required. The Maximum number of rows which can fail before the bulk load operation is stopped. Example: 10 (if you want the pipeline execution to continue as far as the number of failed records is less than 10) | |||||||||||||

Truncate columns | Truncate column values which are larger than the maximum column length in the table Default value: Selected | |||||||||||||

Disable data compression | Disable compression of data being written to S3. Disabling compression will reduce CPU usage on the Snaplex machine, at the cost of increasing the size of data uploaded to S3. Default value: Not selected | |||||||||||||

Load empty strings | If selected, empty string values in the input documents are loaded as empty strings to the string-type fields. Otherwise, empty string values in the input documents are loaded as null. Null values are loaded as null regardless. Default value: Not selected | |||||||||||||

Additional options | Additional options to be passed to the COPY command. Check http://docs.aws.amazon.com/redshift/latest/dg/r_COPY.html for available options.

Example: Date format can be specified as DATEFORMAT 'MM-DD-YYYY' Default value: [None] | |||||||||||||

Parallelism | Defines how many files will be created in S3 per execution. If set to 1 then only one file will be created in S3 which will be used for the copy command. If set to n with n > 1, then n files will be created as part of a manifest copy command, allowing a concurrent copy as part of the Redshift load. The Snap itself will not stream concurrent to S3. It will use a round robin mechanism on the incoming documents to populate the n files. The order of the records is not preserved during the load. | |||||||||||||

IAM role | This property enables you to perform the bulk load using IAM role. If this option is selected, ensure that the AWS account ID, role name and region name are provided in the account. Default value: Not selected | |||||||||||||

Server-side encryption | This defines the S3 encryption type to use when temporarily uploading the documents to S3 before the insert into the Redshift. Default value: Not selected | |||||||||||||

| KMS Encryption type | Specifies the type of KMS S3 encryption to be used on the data. The available encryption options are:

Default value: None

| |||||||||||||

| KMS key | Conditional. This property applies only when the encryption type is set to Server-Side Encryption with KMS. This is the KMS key to use for the S3 encryption. For more information about the KMS key, refer to AWS KMS Overview and Using Server Side Encryption. Default value: [None] | |||||||||||||

| Vacuum type | Reclaims space and sorts rows in a specified table after the upsert operation. The available options to activate are FULL, SORT ONLY, DELETE ONLY and REINDEX. Refer to the AWS document on "Vacuuming Tables" for more information.

Default value: NONE | |||||||||||||

| Vacuum threshold (%) | Specifies the threshold above which VACUUM skips the sort phase. If this property is left empty, Redshift sets it to 95% by default. Default value: [None] | |||||||||||||

Encryption type | This defines the S3 encryption type to use when temporarily uploading the documents to S3 before the insert into Redshift. One of the following three options can be selected from the drop-down menu:

Default value: None | |||||||||||||

| KMS key | Conditional. This property only applies if encryption type is set to Server-Side Encryption with KMS. This is the KMS key to use for the S3 encryption. For more information about the KMS key refer to AWS KMS Overview and Using Server Side Encryption. Default value: [None] | |||||||||||||

|

When enabled, the SOAP request will be executed and if the Snap has an output view defined, then the response will be written to the output view of the Snap. | |||||||||||||

| Note |

|---|

| If string values in the input document contain the '\0' character (string terminator), the Redshift COPY command, which is used by the Snap internally, fails to handle them properly. Therefore, the Snap skips the '\0' characters when it writes CSV data into the temporary S3 files before the COPY command is executed. |

Redshift's Vacuum Command

In Redshift, when rows are DELETED or UPDATED against a table they are simply logically deleted (flagged for deletion), not physically removed from disk. This causes the rows to continue consuming disk space and those blocks are scanned when a query scans the table. This results in an increase in table storage space and degraded performance due to otherwise avoidable disk IO during scans. A vacuum recovers the space from deleted rows and restores the sort order.

Example

The following example will illustrate the usage of the Redshift Upsert Snap. In this example, we update a record using the Redshift Upsert Snap.

In the pipeline execution:

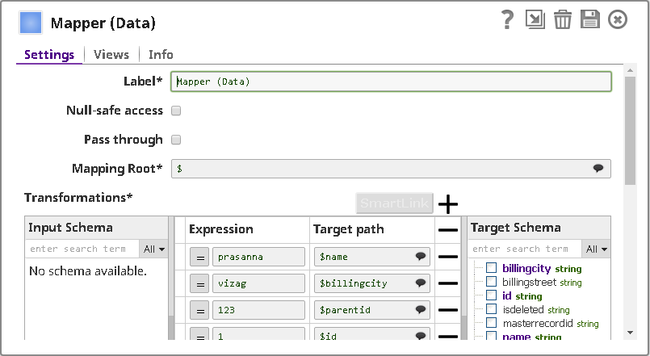

Mapper (Data) Snap maps the record details to the input fields of Redshift Upsert Snap:

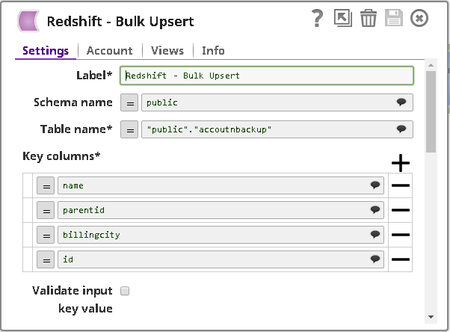

Redshift Upsert Snap updates the record using the Accountnbackup table object:

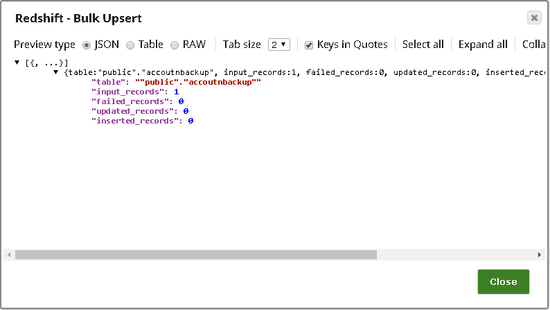

After the pipeline executes, the Redshift Upsert Snap shows the following data preview:

| Insert excerpt | ||||||

|---|---|---|---|---|---|---|

|