On this Page

...

Inception-v3 is the name of very Deep Convolutional Neural Networks which can recognize objects in images. We are going to write a Python script using Keras library to host Inception-v3 with SnapLogic pipeline. According to this, Inception-v3 shows a promising result with 78.8% top-1 accuracy and 94.4% top-5 accuracy. It has the depth of 159 with 23,851,784 parameters.

Here is the link to the original research paper.

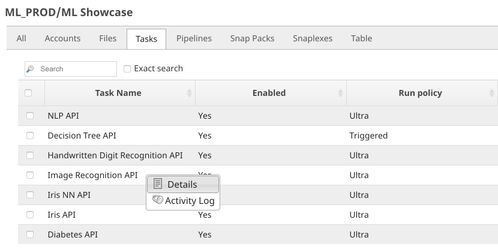

The live demo is available at our Machine Learning Showcase.

Objectives

- Model Hosting: Use Remote Python Script Snap from ML Core Snap Pack to deploy python script to host the Inception-v3 model and schedule an Ultra Task to provide API.

- API Testing: Use REST Post Snap to send a sample request to the Ultra Task to make sure the API is working as expected.

We are going to build 2 pipelines: Model Hosting and API Testing; and an Ultra Task to accomplish the above objectives. Each of these pipelines is described in the Pipelines section below.

Pipelines

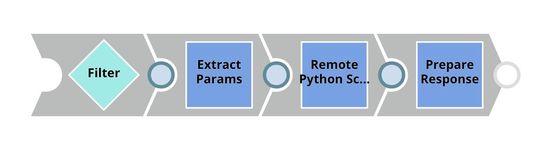

Model Hosting

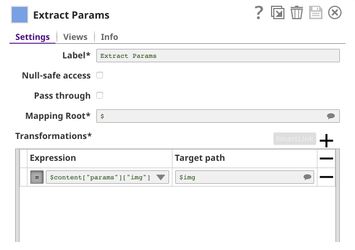

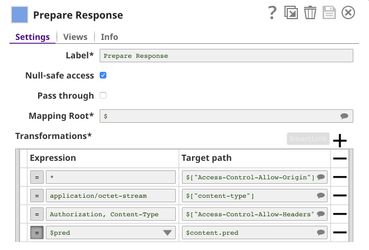

This pipeline is scheduled as an Ultra Task to provide a REST API that is accessible by external applications. The core component of this pipeline is the Remote Python Script Snap. It downloads the Inception-v3 model and recognizes objects in the incoming images. The Filter Snap is used to authenticate the request by checking the token that can be changed in pipeline parameters. The Extract Params Snap (Mapper) extracts the required fields from the request. The Prepare Response Snap (Mapper) maps from prediction to $content.pred which will be the response body. This Snap also adds headers to allow Cross-Origin Resource Sharing (CORS).

Python Script

Below is the script from the Remote Python Script Snap used in this pipeline. There are 3 main functions: snaplogic_init, snaplogic_process, and snaplogic_final. The first function (snaplogic_init) will be executed before consuming input data. The second function (snaplogic_process) will be called on each of the incoming documents. The last function (snaplogic_final) will be processed after all incoming documents have been consumed by snaplogic_process.

First of all, We use SLTool.ensure to automatically install required libraries. SLTool class contains useful methods: ensure, execute, encode, decode, etc. In this case, we need keras and tensorflow. The tensorflow 1.5.0 does not have optimization so it is recommended for old CPUs.

We load the model in snaplogic_init. If the model is not found on the machine, it will be automatically downloaded. The request contains a serialized image. We deserialize the image using base64, then, apply the model to recognize objects in the image. The result contains top 5 objects with the confidence level.

| Paste code macro | ||

|---|---|---|

| ||

from snaplogic.tool import SLTool as slt

# Ensure libraries.

slt.ensure("keras", "2.2.4")

slt.ensure("tensorflow", "1.5.0")

# Imports

import base64

import re

from io import BytesIO

import numpy

from PIL import Image

from keras.applications.inception_v3 import InceptionV3, preprocess_input, decode_predictions

# Global Variables

model = None

# This function will be executed once before consuming the data.

def snaplogic_init():

global model

model = InceptionV3(include_top=True, weights='imagenet', input_tensor=None,

input_shape=None, pooling=None, classes=1000)

return None

# This function will be executed on each document from the upstream snap.

def snaplogic_process(row):

try:

global model

image_uri = row["img"]

image_base64 = re.sub("^data:image/.+;base64,", "", image_uri)

image_data = base64.b64decode(image_base64)

image = Image.open(BytesIO(image_data)).convert('RGB')

image = numpy.array(image, dtype=numpy.float32)

image = numpy.expand_dims(image, axis=0)

image = preprocess_input(image)

pred = model.predict(image)

pred_list = decode_predictions(pred, top=5)[0]

pred_list = [[x[0], x[1], float(x[2])] for x in pred_list]

return {"pred": pred_list}

except:

return {"pred": "The request is not valid."}

# This function will be executed after consuming all documents from the upstream snap.

def snaplogic_final():

return None |

...

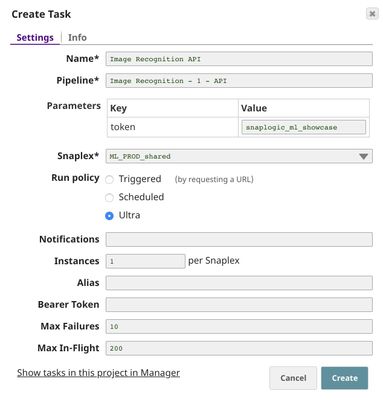

Triggered Task is good for batch processing since it starts a new pipeline instance for each request. Ultra Task is good to provide REST API to external applications that require low latency. In this case, the Ultra Task is preferable. Bearer token is not needed here since the Filter Snap will perform authentication inside the pipeline.

In order to get the URL, click Show tasks in this project in Manager in the Create Task window. Click the small triangle next to the task, and then click Details. The task detail shows up with the URL.

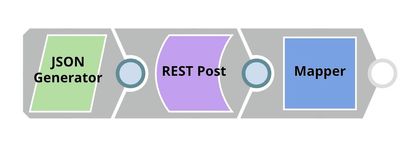

API Testing

In this pipeline, a sample request is generated by the JSON Generator. The request is sent to the Ultra Task by REST Post Snap. The Mapper Snap is used to extract response which is in $response.entity.

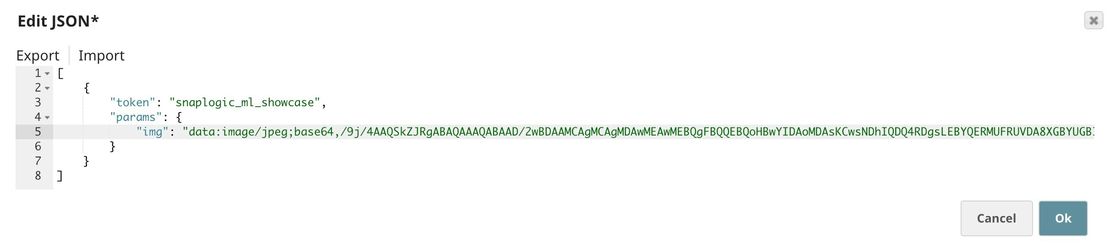

Below is the content of the JSON Generator Snap. It contains $token and $params which will be included in the request body sent by REST Post Snap.

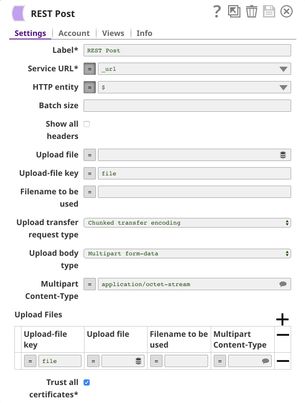

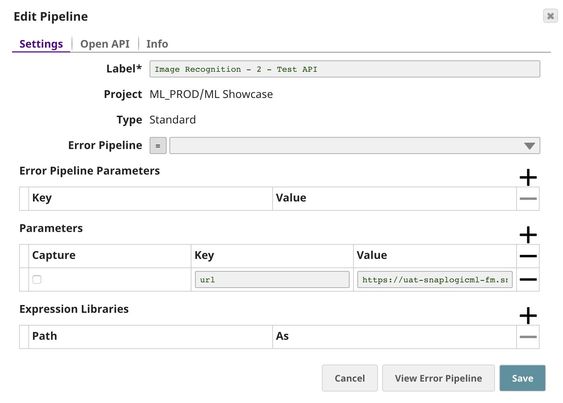

The REST Post Snap gets the URL from the pipeline parameters. Your URL can be found in the Manager page. In some cases, it is required to check Trust all certificates in the REST Post Snap.

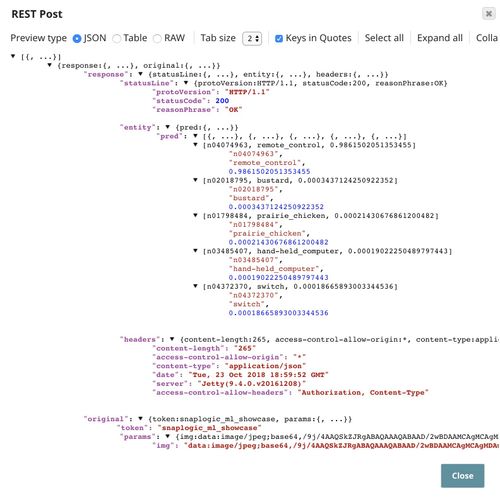

The output of the REST Post Snap is shown below. The last Mapper Snap is used to extract $response.entity from the request. In this case, the model thinks that the object in this image is a remote control with the confidence level of 0.986.

Downloads

| Attachments | ||||||

|---|---|---|---|---|---|---|

|