Adobe Cloud Platform Write

On this Page

ACP Snap Deprecation Notice

Since Adobe Cloud Platform (ACP) is deprecated by Adobe, we are replacing the ACP Snap Pack with Adobe Experience Platform (AEP) Snap Pack starting from 4.22 GA release. Hence, ACP Write Snap is deprecated in 4.22 release. For more information, see Adobe Experience Platform Write Snap article.

Overview

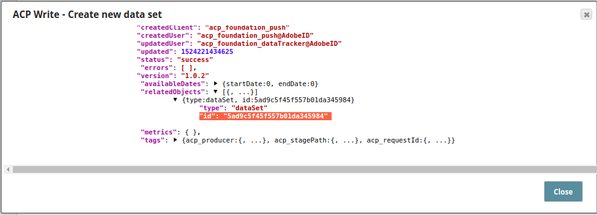

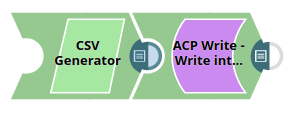

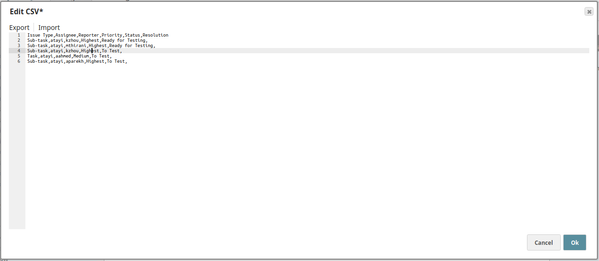

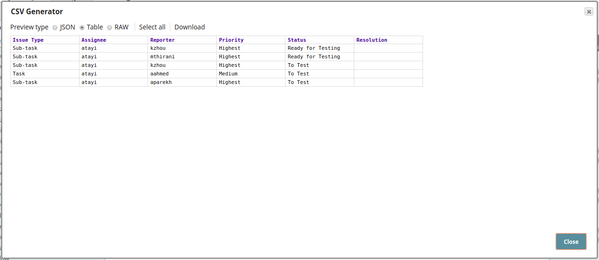

The Adobe Experience Platform Write Snap is useful in creating data sets in the Adobe Experience Platform instance based on a selected schema and data set name, or by writing incoming documents into an existing data set in the Adobe Experience Platform. Some of the Snap's properties are suggestible to make it easier to select the data set into which the incoming document is to be written. These properties are Dataset name, and Dataset ID. The Snap also supports batching, which enables you to write a configurable number of documents into a data set. If a batch write is unsuccessful, then the Snap will retry writing that batch for a configurable number of times.

Prerequisites

Access to Adobe Experience Platform.

Configuring Accounts

This Snap uses account references created on the Accounts page of SnapLogic Manager to handle access to this endpoint. See Configuring Adobe Experience Platform Accounts for information on setting up this type of account.

Configuring Views

Input | This Snap has exactly one document input view. |

|---|---|

| Output | This Snap has at most one document output view. |

| Error | This Snap has at most one document error view and produces zero or more documents in the view. |

Limitations and Known Issues

As of its initial release, the Snap supports only Profile XSD, CSV, and Parquet formats.

Modes

- Ultra Pipelines: Works in Ultra Pipelines.

Snap Settings

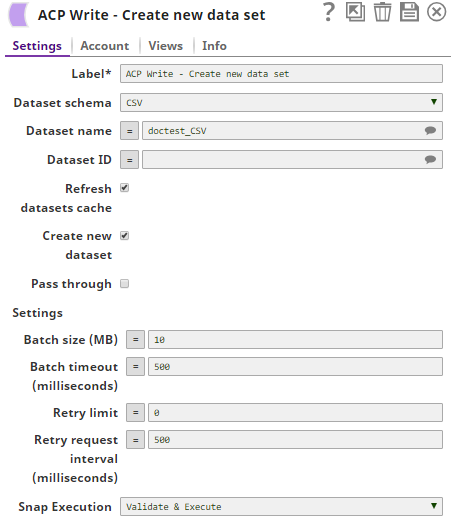

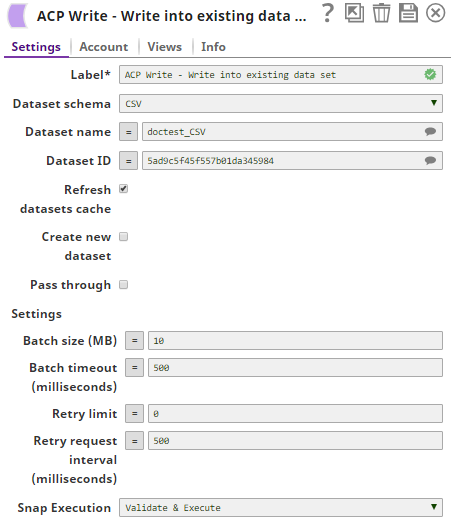

| Label | Required. The name for the Snap. Modify this to be more specific, especially if there are more than one of the same Snap in the pipeline. |

|---|---|

| Dataset schema | This is a drop-down list that lets the user select the schema of the data set that is to be read. The suggestions in Dataset name and Dataset ID are based on this selection. The following options are available in this drop-down menu:

Default value: Parquet Recommendations for writing/creating data sets in different schema types Data sets can be written in CSV and Parquet schema using incoming documents from upstream Snaps. Profile XSD Schema - Points to remember

|

| Dataset name | The name of the data set to be read. This is a suggestible field and will provide names of all the data sets within the Account based on the selection in the Dataset schema property. Example: doctest Default value: [None] This property is expression-enabled. For more information on the expression language, see Understanding Expressions in SnapLogic and Using Expressions. For information on Pipeline Parameters, see Pipeline Properties. |

| Dataset ID | Required. The data set ID of the data set to be read. This is a suggestible field and will provide IDs of all the data sets within the Account based on the Dataset schema and Dataset name property's configuration. Select the applicable data set ID into which the incoming data is to be written. Example: 5acdb87f9iqdrac201da2e0e9 Default value: [None] Leave this property blank if a new data set is to be created and enable the Create new dataset property. This property is expression-enabled. For more information on the expression language, see Understanding Expressions in SnapLogic and Using Expressions. For information on Pipeline Parameters, see Pipeline Properties. |

| Refresh datasets cache | If selected, the Snap will refresh the cache and show refreshed results. This is handy in cases where changes are made to the data set outside of SnapLogic, such as a new data set created, existing data set deleted, and so on. In such cases, enabling this property will refresh the cache and display updated content. Default value: Not selected. This property is disabled by default to save on unnecessary API calls but it is useful in cases where a new data set is added after the Snap's initial configuration. New data sets will not be visible in the property's suggestions unless this property is enabled. |

| Create new dataset | To be enabled when creating a new data set. Default value: Not selected. |

| Pass through | If selected, the input document will be passed through to the output view under the key 'original'. Default value: Not selected. |

| Settings | Batching-related settings (Batch size, Retry limits, and so on). |

| Batch size (MB) | The size limit per batch in mega bytes (MB). Default value: 10 This property is expression-enabled. For more information on the expression language, see Understanding Expressions in SnapLogic and Using Expressions. For information on Pipeline Parameters, see Pipeline Properties. |

| Batch timeout (milliseconds) | The timeout limit (in milliseconds) within which one batch of data should be written. If a batch exceeds this limit, the Snap proceeds to the next batch and displays the records from the batch that could not be written, in the Error view (if the Snap's Error view is configured). Default value: 500 This property is expression-enabled. For more information on the expression language, see Understanding Expressions in SnapLogic and Using Expressions. For information on Pipeline Parameters, see Pipeline Properties. |

| Retry limit | The number of retries for the Snap to write an unsuccessful batch. Default value: 0

This property is expression-enabled. For more information on the expression language, see Understanding Expressions in SnapLogic and Using Expressions. For information on Pipeline Parameters, see Pipeline Properties. |

| Retry request interval (milliseconds) | The time interval (in milliseconds) between successive retry attempts. Default value: 500 This property is expression-enabled. For more information on the expression language, see Understanding Expressions in SnapLogic and Using Expressions. For information on Pipeline Parameters, see Pipeline Properties. |

Snap execution | Select one of the three modes in which the Snap executes. Available options are:

|

Troubleshooting

Snap throws an error when Pipeline execution is done in Cloudplex

To execute Pipelines in a Cloudplex, you must set ALLOW_CLOUDPLEX_PROCESS_CREATION to true in the SnapLogicSecurityManager.java file. Contact SnapLogic Customer Support for help with the setting.

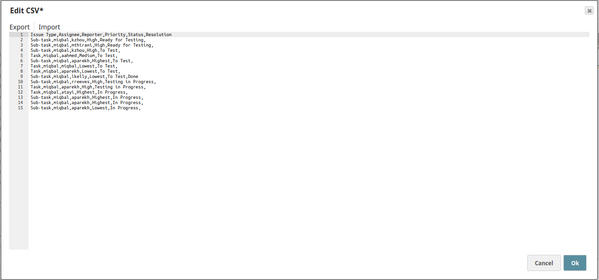

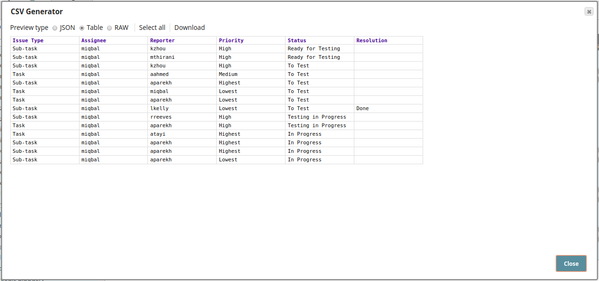

Examples

Downloads

Snap Pack History

Have feedback? Email documentation@snaplogic.com | Ask a question in the SnapLogic Community

© 2017-2024 SnapLogic, Inc.