Match

On this Page

Overview

This Snap performs record linkage to identify documents from different data sources (input views) that may represent the same entity without relying on a common key. The Match Snap enables you to automatically identify matched records across datasets that do not have a common key field.

The Match Snap is part of our ML Data Preparation Snap Pack.

This Snap uses Duke, which is a library for performing record linkage and deduplication, implemented on top of Apache Lucene.

Input and Output

Expected input

- First Input: The first dataset that must be matched with the second dataset.

- Second Input: The second dataset that must be matched with the first dataset.

Expected Output

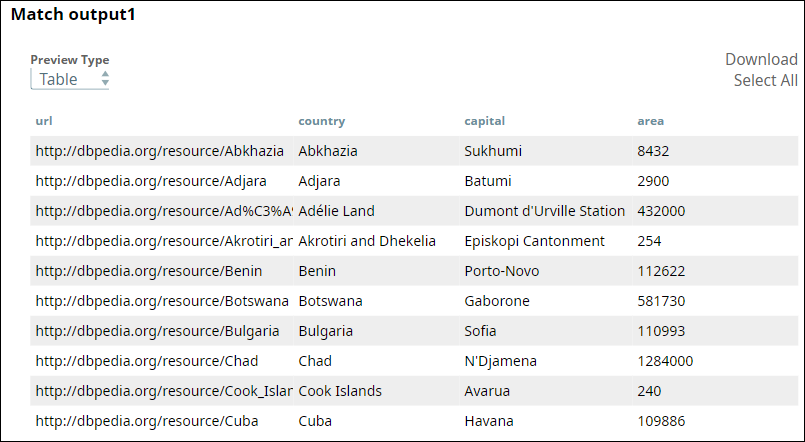

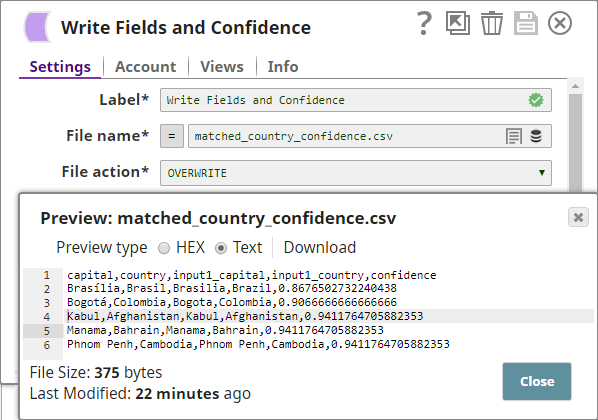

- First Output: The matched documents and, optionally, the confidence level associated with the matching.

- Second Output: Optional. Unmatched documents from the first dataset.

- Third Output: Optional. Unmatched documents from the second dataset.

Expected Upstream Snaps

- First Input: A Snap that offers documents. For example, Mapper, MySQL - Select, and JSON Parser.

- Second Input: A Snap that offers documents. For example, Mapper, MySQL - Select, and JSON Parser.

Expected Downstream Snaps

- Snaps that accept documents. For example, Mapper, JSON Formatter, and CSV Formatter.

Prerequisites

None.

Configuring Accounts

Accounts are not used with this Snap.

Configuring Views

Input | This Snap has exactly two document input views. |

|---|---|

| Output | This Snap has at most three document output views. |

| Error | This Snap has at most one document error view. |

Troubleshooting

None.

Limitations and Known Issues

None.

Modes

- Ultra Pipelines: Does not work in Ultra Pipelines.

Snap Settings

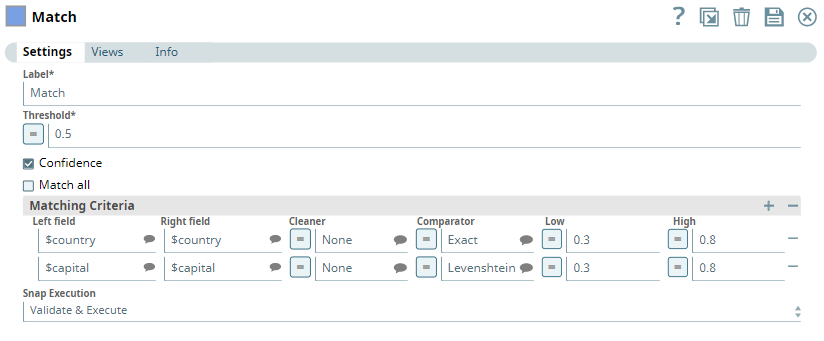

| Label | Required. The name for the Snap. Modify this to be more specific, especially if there are more than one of the same Snap in the pipeline. |

|---|---|

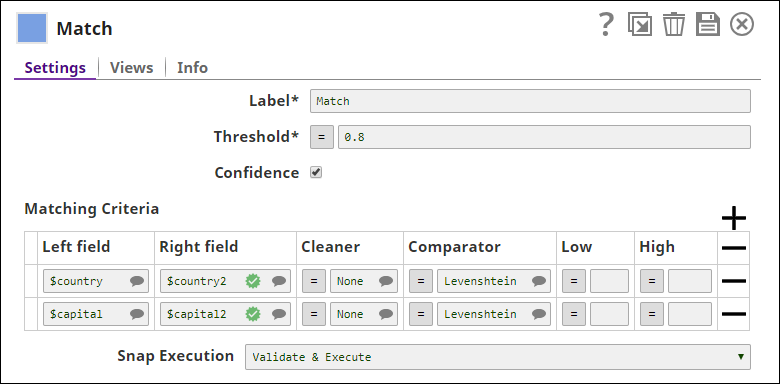

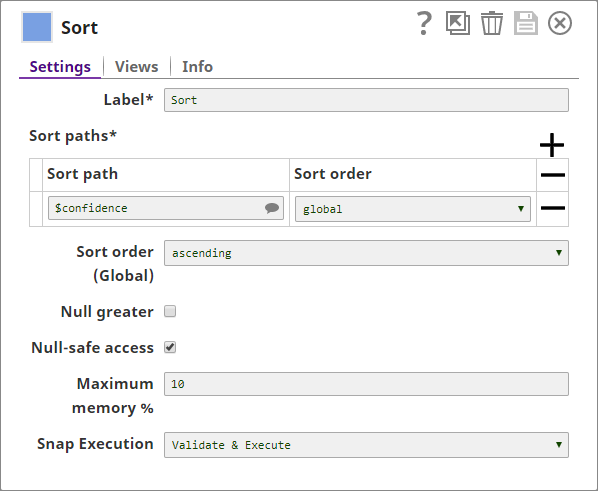

| Threshold | Required. The minimum confidence required for documents to be considered matched. Minimum Value: 0 Maximum Value: 1 Default Value: 0.8 |

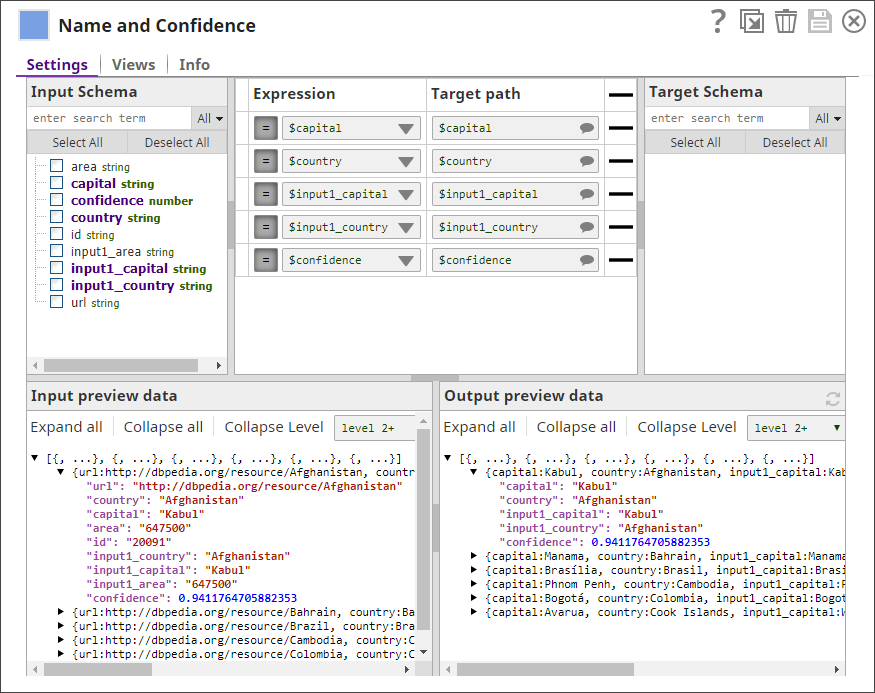

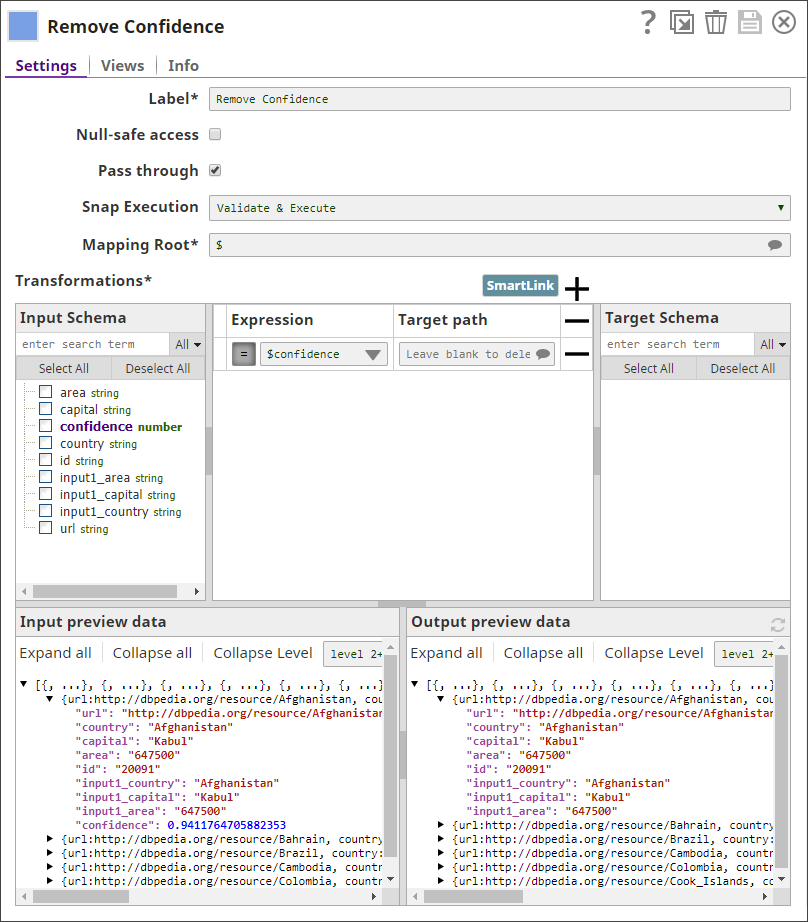

| Confidence | Select this check box to include each match's confidence levels in the output. Default Value: Deselected |

| Match all | Select this check box to match one record from the first input with multiple records in the second input. Else, the Snap matches the first record of the second input with the first record of the first input. Default Value: Deselected |

| Matching Criteria | Enables you to specify the settings that you want to use to perform the matching between the two input datasets. |

| Left Field | The field in the first dataset that you want to use for matching. This property is a JSONPath. Example: $name Default Value: [None] |

| Right Field | The field in the second dataset that you want to use for matching. This property is a JSONPath. Example: $country Default Value: [None] |

| Cleaner | Select the cleaner that you want to use on the selected fields. A cleaner makes comparison easier by removing variations from data, which are not likely to indicate genuine differences. For example, a cleaner might strip everything except digits from a ZIP code. Or, it might normalize and lowercase text. Depending on the nature of the data in the identified input fields, you can select the kind of cleaner you want to use from the options available:

|

| Comparator | A comparator compares two values and produces a similarity indicator, which is represented by a number that can range from 0 (completely different) to 1 (exactly equal). Choose the comparator that you want to use on the selected fields, from the drop-down list:

Default Value: Levenshtein |

| Low | Enter a decimal value representing the level of probability of the records to be matched if the specified fields are completely unlike. Example: 0.1 Default Value: [None] If this value is left empty, a value of 0.3 is applied automatically. |

| High | Enter a decimal value representing the level of probability of the records to be matched if the specified fields are exact match. Example: 0.8 Default Value: [None] If this value is left empty, a value of 0.95 is applied automatically. |

| Snap execution | Select one of the three modes in which the Snap executes. Available options are:

|

Temporary Files

During execution, data processing on Snaplex nodes occurs principally in-memory as streaming and is unencrypted. When larger datasets are processed that exceeds the available compute memory, the Snap writes Pipeline data to local storage as unencrypted to optimize the performance. These temporary files are deleted when the Snap/Pipeline execution completes. You can configure the temporary data's location in the Global properties table of the Snaplex's node properties, which can also help avoid Pipeline errors due to the unavailability of space. For more information, see Temporary Folder in Configuration Options.Example

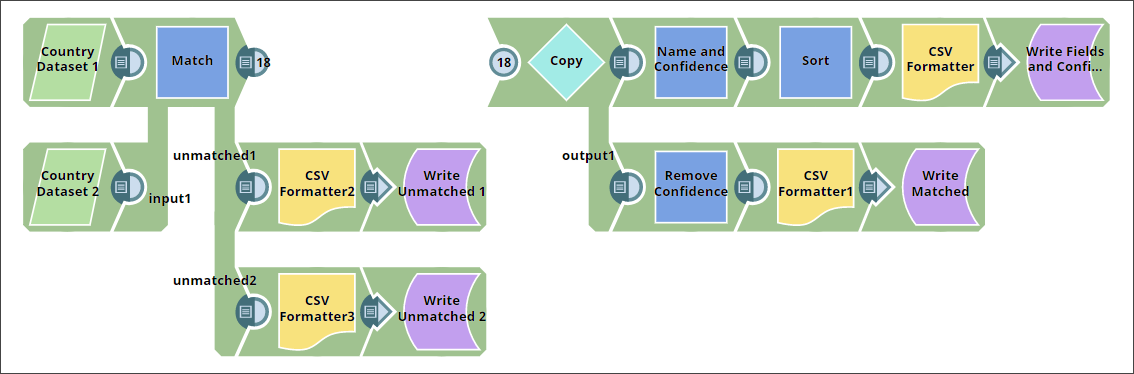

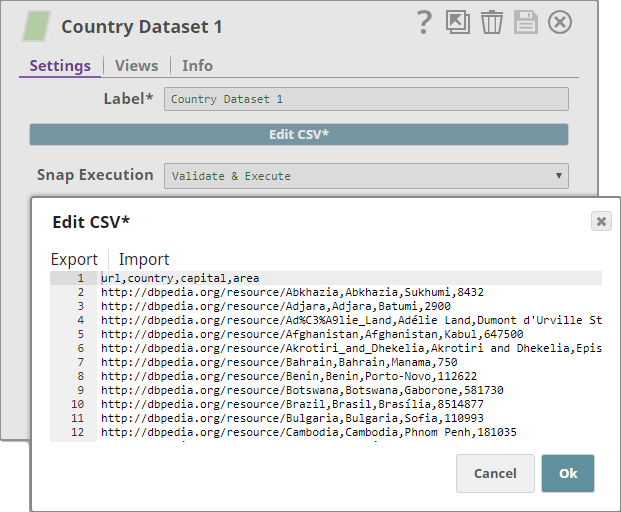

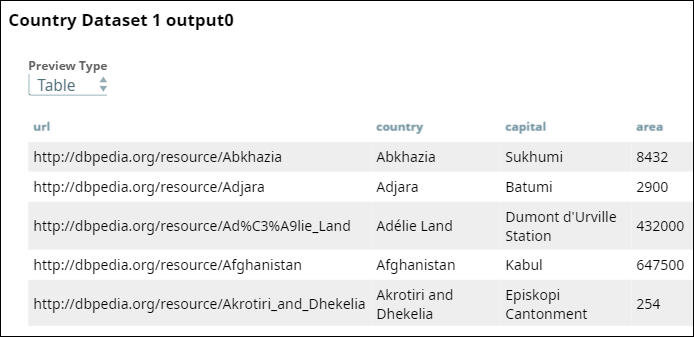

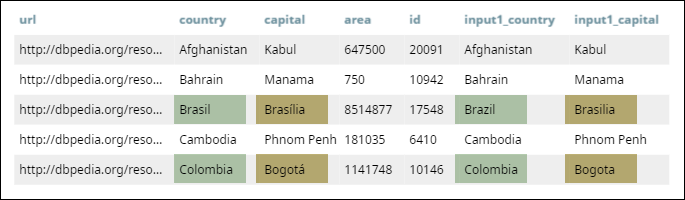

Matching Countries Based on their Names and Capitals

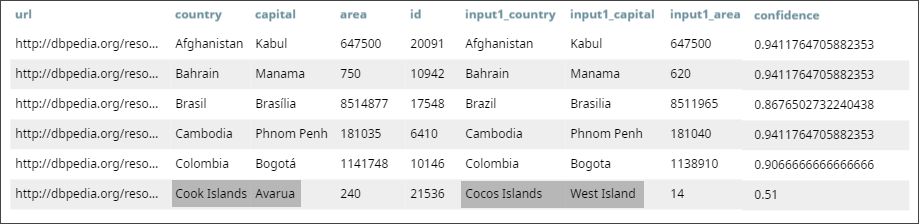

One of the challenges associated with data integration is consolidating datasets representing the same entities but using different measurement standards of the data. For example, if you buy the same data from multiple brokers, you may see that the datasets are organized or labeled differently. In data lake scenarios, consolidating data across thousands of datasets manually is a nearly impossible task, given the large number of datasets and the various formats and labeling patterns used across databases. The Match Snap enables you to identify such matched records automatically.

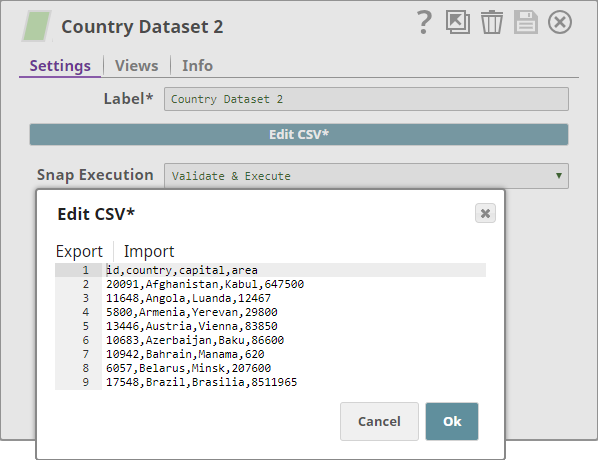

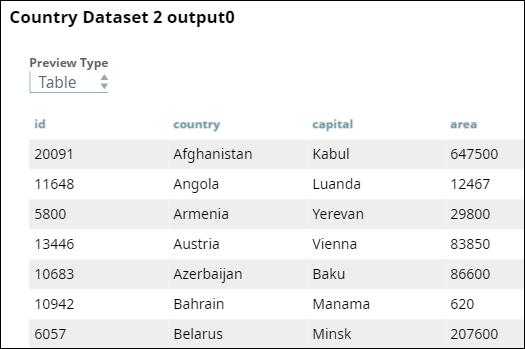

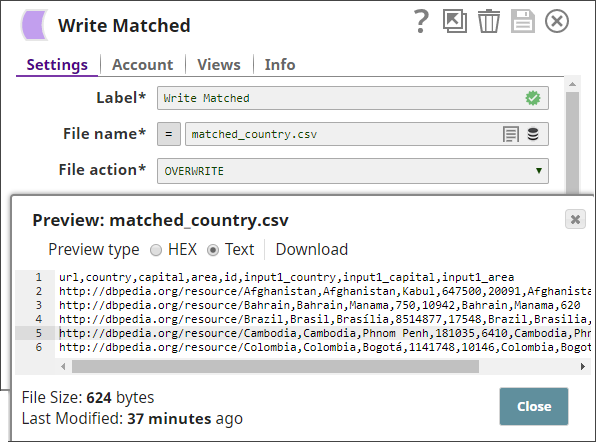

In this example, you take two datasets as input and match country records in them using the Match Snap. You then adjust the similarity threshold value in the Match Snap to try to get the best results (maximize the number of matches while making sure the results are reliable).

Download this Pipeline.

Downloads

Important steps to successfully reuse Pipelines

- Download and import the Pipeline into SnapLogic.

- Configure Snap accounts as applicable.

- Provide Pipeline parameters as applicable.

Snap Pack History

Have feedback? Email documentation@snaplogic.com | Ask a question in the SnapLogic Community

© 2017-2024 SnapLogic, Inc.