On this Page

| Table of Contents | ||||

|---|---|---|---|---|

|

Snap type: | Write | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

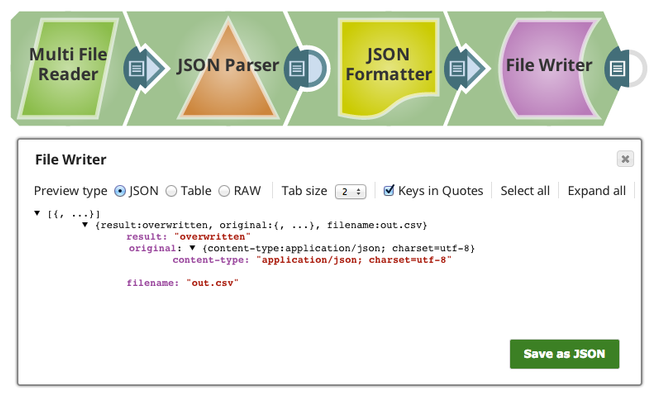

Description: | This Snap reads a binary data stream from the input view and writes it to a specified file destination. Possible file destinations include: SLDB, HTTP, S3, FTP, SFTP, FTPS, or HDFS. If File permissions for the file are provided, the Snap set those permissions to the file.

The value of the "result" field can be "overwritten", "created", "ignored", or "appended". The value "ignored" indicates that the Snap did not overwrite the existing file because the value of the File action property is "IGNORE". | |||||||||

| Prerequisites: |

| |||||||||

| Support and limitations: |

| |||||||||

| Known Issues: | This Snap does not create an output file when using the input from SAS Generator Snap configured with only the DELETE SAS permission. This is not the case when the target file exists. | |||||||||

| Account: | This Snap uses account references created on the Accounts page of SnapLogic Manager to handle access to this endpoint. This Snap supports several account types, as listed in the table below, or no account. | |||||||||

Accounts for information on setting up |

accounts that work with this Snap. Account types supported by each protocol are as follows:

Required settings for account types are as follows:

| |||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Views: |

| ||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

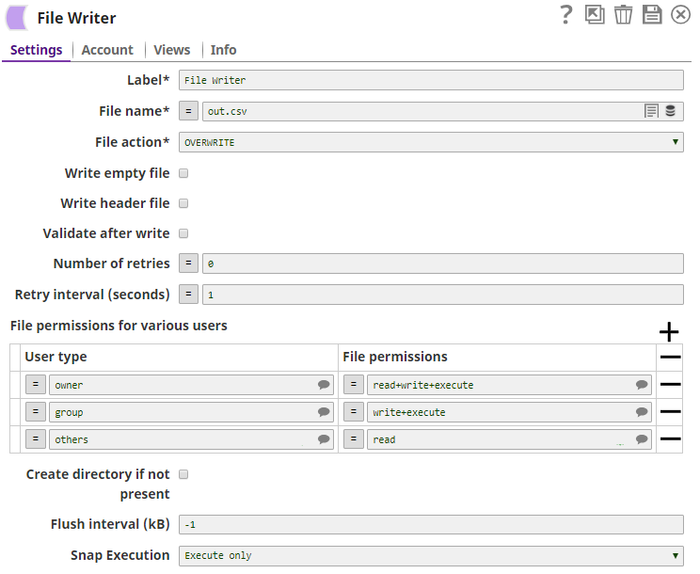

Settings | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||

Label |

| ||||||||||||||||||||||||||||||||||||||||||||||||||||||||

File name | Required. This property specifies the URL for the destination file, which the binary data read from the input view is written to. It may start with one of the following protocols:

For SLDB files, if you enter:

The Snap can write a file to its own project directory or the shared project, and cannot write it to another project directory. For S3, your account must have full access.

Fields in the binary header can be also be accessed when computing a file name. For example, if a File Reader Snap was directly connected to a File Writer, you could access the "content-location" header field to get the original path to the file. You could then compute a new file name based on the old one, for instance, to make a backup file: For http: and https: protocols, the Snap uses http PUT method only. This property should have the syntax: Please note "://" is a separator between the file protocol and the rest of the URL and the host name and the port number should be between "://" and "/". If the port number is omitted, a default port for the protocol is used. The hostname and port number are omitted in the sldb and s3 protocols.

The file:/// protocol is supported only on Groundplex. In Cloudplex configurations, please use sldb or other file protocols. When using the file:/// protocol, the file access is conducted using the permissions of the user in whose name the Snaplex is running (by default Snapuser). File system access is to be used with caution, and it is the customer's own responsibility to ensure that file system is cleaned up after use. For HDFS, if you want to be able to suggest information, use the HDFS Writer Snap. For webhdfs, make sure webhdfs is enabled on your hdfs server and use the file protocol "webhdfs://" instead of "hdfs://". Everything else is the same as hdfs. Examples:

Default value: [None]

| ||||||||||||||||||||||||||||||||||||||||||||||||||||||||

File action | Required. The action to perform if the file already exists. The available options include:

Default value: Overwrite | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Write empty file | If selected, an empty file is written when the incoming binary document has empty data. If there is no incoming document at the input view of the Snap, no file is written regardless of the value of the property. Default value: Not selected. | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||

Write header file | The binary data stream in the input view may contain header information about the binary data in the form of a document with key-value-pair map data. If this property is checked, the Snap writes a header file whose name is generated by appending ".header" to the value of the File name property. The same header information is also included in the output view data, as shown in the "Expected output" section above, under the key "original". Note that if the header has no keys other than Content-Type or Content-Encoding, the .header file will not be written Default value: Not selected | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||

Validate after write | If selected, the Snap checks if the file exists after the completion of the file write. This may delay a few more seconds for the validation. Default value: Not selected | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Number of retries | Specifies the maximum number of retry attempts when the Snap fails to write. If the value is larger than 0, the Snap first stores the input data in a temporary local file before writing to the target file.

Example: 3 Minimum value: 0 Default value: 0

| ||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Retry interval (seconds) | Specifies the minimum number of seconds for which the Snap must wait before attempting recovery from a network failure. Example: 3 Minimum value: 1 Default value: 1 | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||

File permissions for various users | File permission sets to be assigned to the file.

| ||||||||||||||||||||||||||||||||||||||||||||||||||||||||

User type | The user type to assign the permissions. This field is case-insensitive. Suggestible options are:

| ||||||||||||||||||||||||||||||||||||||||||||||||||||||||

File permissions | Specify any combination of {read, write, execute}, separated by a plus sign (+). This field is case-insensitive. Suggestible options are:

| ||||||||||||||||||||||||||||||||||||||||||||||||||||||||

Create directory if not present | When selected, checks if the specified directory path exists and if it does not exist, creates a new directory. This property is not supported for HTTP, HTTPS, SLDB and SMB file protocols. Default value: Not selected | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Flush interval (kB) | Flush interval in kilobytes during the file upload. The Snap can flush a given size of data output stream written to the target file server. If the value is zero, the Snap flushes in maximum frequency after each byte block is written. Larger the value is, the less frequent flushes the Snap performs. Leave the property at default -1 for no flush during the upload. This property may help if the file upload experiences an intermittent failure. However, more frequent flushes will result in a slower file upload. Example: 100 Default value: -1 | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

| ||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

| ||||||||||||||||||||||||||||||||||||||||||||||||||||||||

Examples

- For SFTP files, if you attempt to write a file into a directory where you do not have a write access right, the write operation will fail with "access denied" error. When you get an SFTP account credential, it is also important to know where your home directory is, for example,

sftp://ftp.snaplogic.com/home/mrtestfor username "mrtest"

- HDFS Example

For HDFS file access, please use a SnapLogic on-premises Groundplex and make sure that its instance is within the Hadoop cluster and SSH authentication has already been established. You can access HDFS files in the same way as other file protocols in File Reader and File Writer Snaps. There is no need to use any account in the Snap. The Snap also supports the webhdfs protocol, which does not require a Groundplex and works for all versions of Hadoop.

| Note |

|---|

HDFS 2.4.0 is supported for the hdfs protocol. |

An example for HDFS is:

| Code Block |

|---|

hdfs://<hostname>:<port number>/<path to folder>/<filename> |

If Cloudera Hadoop Namenode is installed in AWS EC2 and its hostname is "ec2-54-198-212-134.compute-1.amazonaws.com" and its port number is 8020, then you would enter:

| Code Block |

|---|

hdfs://ec2-54-198-212-134.compute-1.amazonaws.com:8020/user/john/input/sample.csv |

| Expand | ||

|---|---|---|

| ||

Example pipeline file for an sldb file write as shown below: |

Common Error Messages

| Message | Cause |

|---|---|

Reason: Could not evaluate expression: filepath Mismatched input ':' expecting {<EOF>, '||', '&&', '^', '==', '!=', '>', '<', '>=', '<=', '+', '-', '*', '/', '%', '?', '[', PropertyRef}. Resolution: Please check expression syntax | The expression toggle (=) is selected on the File name field, so it is trying to evaluate the filepath as an expression. Click on the toggle to take the field out of expression mode. |

Reason: Failure: Resolution: Please check expression syntax and data types. | The expression toggle (=) is selected on the File name field, so it is trying to evaluate the filename as an expression. Click on the toggle to take the field out of expression mode. |

Downloads

| Attachments | ||||||

|---|---|---|---|---|---|---|

|

See Also

| Insert excerpt | ||||||

|---|---|---|---|---|---|---|

|