On this Pagepage

| Table of Contents | ||||

|---|---|---|---|---|

|

Snap type: | Read | |||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

Description: | This Snap reads ORC files from SLDB, HDFS, S3, and WASB, and converts the data into documents.

| |||||||||||||

| Prerequisites: | None | |||||||||||||

| Support and limitations: | The Snap works

| |||||||||||||

| Account: | Depending on the source of the data you want to read, you will need to provide valid account information for either AWS S3 Account or Azure Storage Account. | |||||||||||||

| Views: |

| |||||||||||||

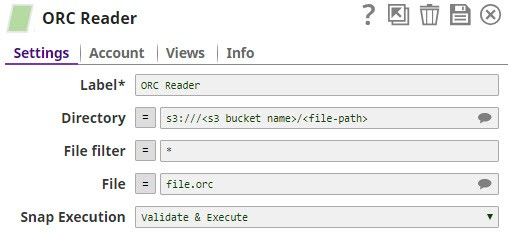

Settings | ||||||||||||||

Label | Required. The name of the Snap. You can modify this to be more specific, especially if you have more than one of the same Snap in your pipeline. | |||||||||||||

Directory | The path to a directory from which you want the ORC Reader Snap to read data. All files within the directory must be ORC formatted. Basic directory URI structure

When you use the ABFS protocol to connect to an endpoint, the account name and endpoint details provided in the URL override the corresponding values in the Account Settings fields.

The Directory property is not used in the pipeline execution or preview, and is used only in the Suggest operation. When you press the Suggest icon, the Snap displays a list of subdirectories under the given directory. It generates the list by applying the value of the Filter property. Example:

Default value: hdfs://<hostname>:<port>/ | |||||||||||||

| Filter |

| |||||||||||||

| File | Required for standard mode. Filename or a relative path to a file under the directory given in the Directory property. It should not start with a URL separator "/". The File property can be a JavaScript expression which will be evaluated with values from the input view document. When you press the Suggest icon, it will display a list of regular files under the directory in the Directory property. It generates the list by applying the value of the Filter property.

Default value: [None] | |||||||||||||

|

| |||||||||||||

Examples

...

| Expand | ||

|---|---|---|

| ||

Reading from a Local Instance of HDFSYou can configure the ORC Reader Snap to read from a specific directory in a local HDFS instance. In the example below, it reads from the file.orc file in the /tmp directory. |

...

| Expand | ||

|---|---|---|

| ||

Reading from a Local Instance of S3You can configure the ORC Reader Snap to read from a specific directory in a local S3 instance. In the example below, it reads from the /<file-path>/file.orc file. |

Troubleshooting

| Insert excerpt | ||||||

|---|---|---|---|---|---|---|

|

Related Information

...

Multiexcerpt include macro name Temporary Files page Join

See Also

| Insert excerpt | ||||||

|---|---|---|---|---|---|---|

|

...