In this article

| Table of Contents | ||||

|---|---|---|---|---|

|

Key Components

There are three key components involved with the Redshift Bulk Snaps.

EC2 Instance

Redshift Cluster

S3

These components can reside in the same AWS account or different accounts. After executing the Pipeline, these components perform the following operations:

EC2: Archives input data and writes the data into the specified S3 bucket/folder.

ABC.csv.gz -> s3://swat-3032/datalake/raw

Redshift Cluster: Copies the data from S3 to a Redshift temporary table using the COPY command.

COPY "public"."swat3032_update_temp_table_XYZ" ("id", "name", "price")

FROM 's3://swat-3032/datalake/raw/Redshift_load_temp/ABC.csv.gz'

CREDENTIALS '...'S3: Loads the data from temporary table to a target table. For the Upsert Snap this will be an UPDATE followed by an INSERT operation.

For more information about the operation that is done by each component, you can inspect the queries in the Redshift console.

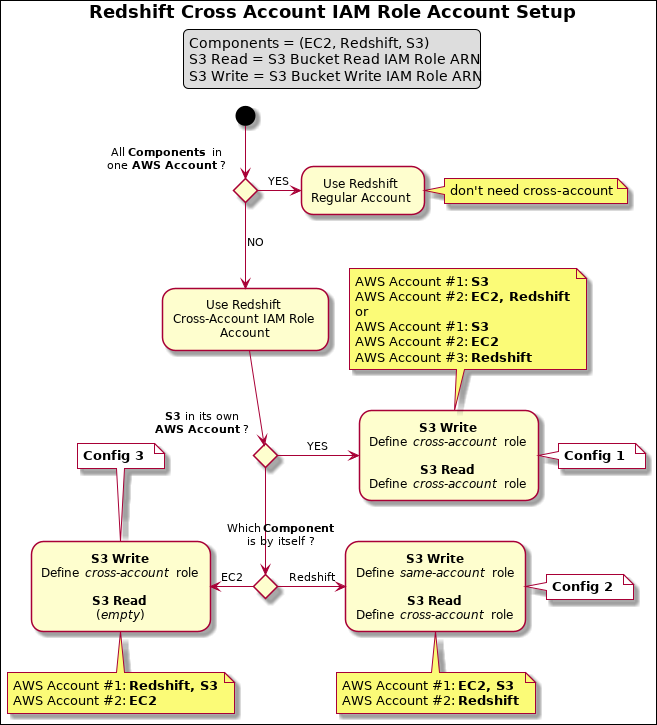

Configuring Redshift Cross Account with IAM Role

The following flow chart illustrates the cross-account roles that you should configure for each key component.

| Appsplus panel macro confluence macro | ||

|---|---|---|

| ||

Here are the typical combinations of your Redshift cross IAM Role account configuration, when all the components are in different AWS accounts:

|

The values in the legend indicate the values that you can use in your account configuration.

| Appsplus panel macro confluence macro | ||

|---|---|---|

| ||

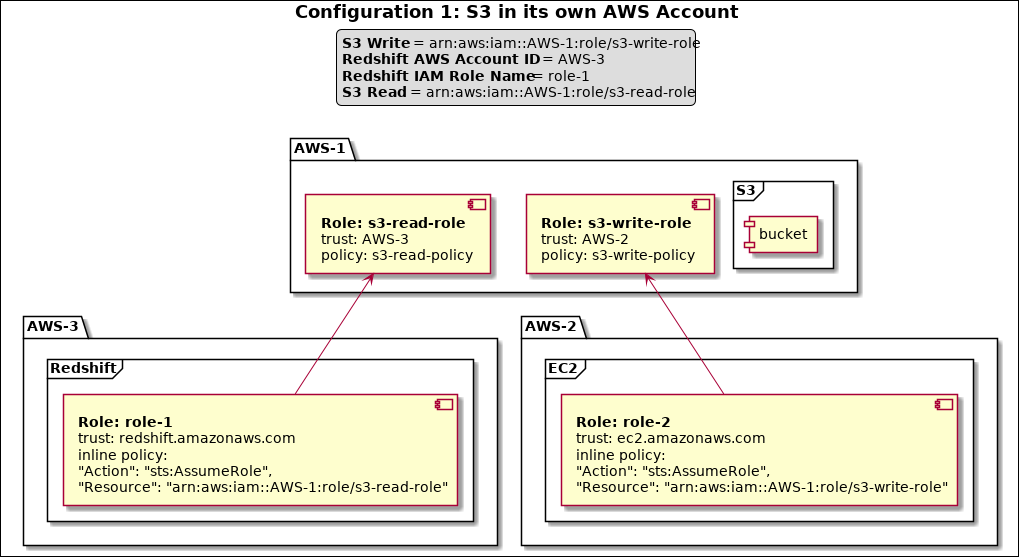

When all the components are in three different AWS accounts, the configuration centers around accessing S3. In which case, you need Read (read from S3) and Write (write to S3) permissions for S3. EC2 should be able to write and Redshift should be able to read. |

| Appsplus panel macro confluence macro | ||

|---|---|---|

| ||

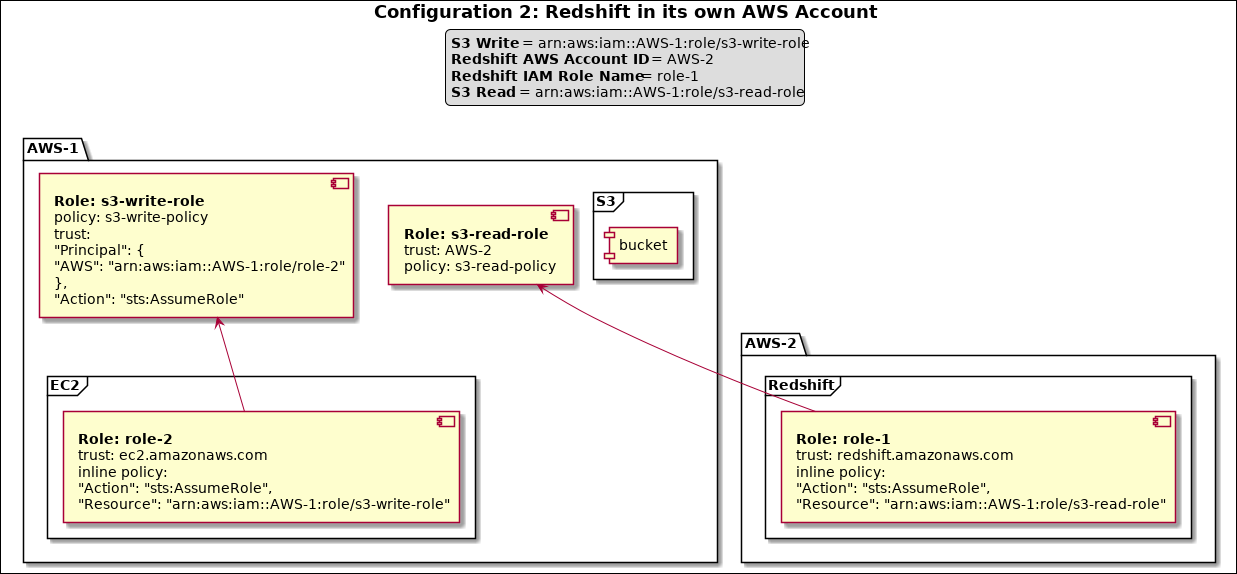

When Redshift is in one account and EC2 and S3 are in a different account, you need to define a role with an S3 write policy. Define another role in the same account for EC2 to assume that role and assign this role to the |

| Appsplus panel macro confluence macro | ||

|---|---|---|

| ||

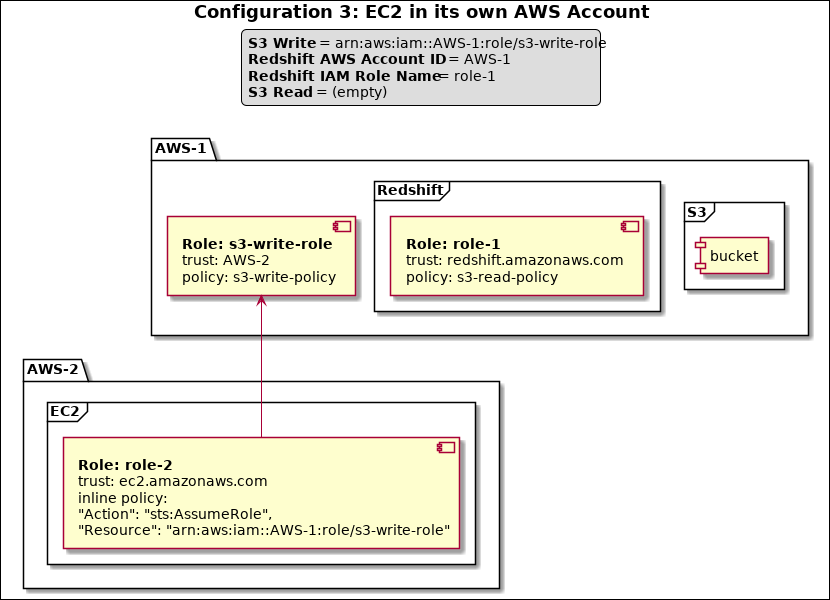

When Redshift and S3 are in the same account and EC2 is in another account, you can define a role (role1) with S3 read permission, and then define another role (role2) in the same account to assume that role (role1). Assign role2 the Redshift Cluster, and role1 to the S3 read field. Alternatively, leave S3 Read blank and ensure role assigned to the Redshift Cluster has S3 read permission. |

Read and Write policies

| Paste code macro | ||

|---|---|---|

| ||

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"s3:ListBucket",

"s3:GetBucketLocation"

],

"Resource": "arn:aws:s3:::bucket"

},

{

"Effect": "Allow",

"Action": [

"s3:GetObject"

],

"Resource": "arn:aws:s3:::bucket/*"

}

]

}

|

| Paste code macro | ||

|---|---|---|

| ||

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"s3:PutObject",

"s3:DeleteObject"

],

"Resource": "arn:aws:s3:::bucket/*"

}

]

}

|