In this Page

Setting Up Kerberos on Groundplex Nodes

To set up Kerberos on Groundplex nodes:

- Before doing the following setups, make sure the Groundplex is working fine.

Install Kerberos packages on the Groundplex nodes.

$ sudo yum install krb5-workstation krb5-libs krb5-auth-dialog

Copy the file /etc/krb5.conf from one of the target cluster nodes to /etc/krb5.conf on each Groundplex node.

- Install the JCE extension on each Groundplex node.

- Download the JCE extension zip file: http://www.oracle.com/technetwork/java/javase/downloads/jce8-download-2133166.html

Copy the JCE extension zip file onto each Groundplex node and install the JCE extension with the following command. Restart the node after the installation.

$ unzip -o -j -q jce_policy-8.zip -d /opt/snaplogic/pkgs/jre1.8.0_45/lib/security/

To check if the JCE extension was correctly installed, run the command below and you should see the same output shown as below:

$ zipgrep CryptoAllPermission /opt/snaplogic/pkgs/jre1.8.0_45/lib/security/local_policy.jar default_local.policy: permission javax.crypto.CryptoAllPermission;

Generate the keytab file for the Kerberos user, put it on each Groundplex node, and give snapuser access to the keytab file.

$ sudo cp /path/to/keytab/file /home/snapuser/<keytab_file_name> $ sudo chown snapuser:snapuser /home/snapuser/<keytab_file_name> $ sudo chmod 400 /home/snapuser/<keytab_file_name>

To provide additional Hadoop Configuration details to the JCC, it should be passed as a JCC Configuration option. For more details on configuration, please check the Configuration page. The

global.propertiesfile should be updated to add the following configuration option. Value that jcc.jvm_options points to the HDFS configuration directory.jcc.jvm_options=-DHADOOP_CLIENT_CONF_DIR=<PATH_TO_HDFS_CONF_DIRECTORY>

for example:

jcc.jvm_options=-DHADOOP_CLIENT_CONF_DIR=/home/snapuser/remote-hadoop/conf

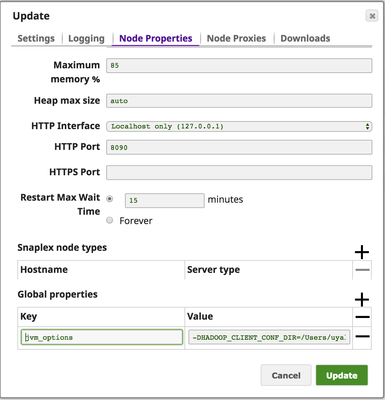

To provide additional Hadoop Configuration details to the JCC from the UI, edit the Snapplex properties in Manager.

Go to the Snapplex Node Properties. Under Global Properties and add a key named "jvm_options" with the value:

-DHADOOP_CLIENT_CONF_DIR=<PATH_TO_HDFS_CONF_DIRECTORY>

for example:-DHADOOP_CLIENT_CONF_DIR=/home/snapuser/remote-hadoop/conf

Setting Up Edge Node with Kerberos Configurations on Groundplex Nodes

Edge nodes are the interface between the Hadoop cluster and the outside network. The edge node can be a part of Hadoop Cluster or can be outside of the Hadoop Cluster. SnapLogic's suggested configuration for an edge node is to be a part of HDFS Cluster and running the Groundplex on the edge node.

Different Hadoop distributions follows different steps to configure a node as an edge node. Here are the links to setting up the edge node.

Testing Kerberos Configurations on Groundplex Nodes

The following commands can be used to test Kerberos configurations on the Groundplex nodes:

$ kinit -k -t /path/to/keytab/file <principal_name> $ klist

For example, with a keytab file of principal snaplogic/node1.snaplogic.dev.com@SNAPLOGIC.DEV.COM, you should be able to initialize the ticket cache and see outputs like this:

$ kinit -k -t /home/snapuser/snaplogic.keytab snaplogic/node1.snaplogic.dev.com@SNAPLOGIC.DEV.COM $ klist Ticket cache: FILE:/tmp/krb5cc_5112 Default principal: snaplogic/node1.snaplogic.dev.com@SNAPLOGIC.DEV.COM Valid starting Expires Service principal 03/27/2017 18:39:59 03/28/2017 18:39:59 krbtgt/node1.snaplogic.dev.com@SNAPLOGIC.DEV.COM renew until 04/03/2017 18:39:59

Common Issues

How to Create a New User for Hive with Kerberos

Create a Linux user on each CDH cluster node.

Create a home directory on the CDH cluster's HDFS for the new user.

Create a Kerberos principal for the new user.

Snap fails with error: [Cloudera][HiveJDBCDriver](500051) ERROR processing query/statement.

Error Code: ERROR_STATE, SQL state: Error while processing statement: FAILED: Execution Error, return code 1 from org.apache.hadoop.hive.ql.exec.mr.MapRedTask, Query: ......

In the Snap's account setting:

If the authentication type is Kerberos, make sure the all the steps in How to Create a New User for Hive with Kerberos have been done correctly.

- If the authentication type is User ID or User ID and Password, make sure the first two steps of How to Create a New User for Hive with Kerberos have been done correctly.

If the Authentication type is None, a home directory for an anonymous user should be created on HDFS:

Snap failed with error: Unable to authenticate the client to the KDC using the provided credentials.

This error indicates that the Kerberos is not configured properly on the Groundplex nodes. See section Configure Kerberos on Groundplex Nodes.

Snap failed with error: Keytab file does not exist or is not readable.

As the error message says, the keytab file is either missing or snapuser does not have the access to the keytab file. See step 5 in Step-by-step Guide.

Common errors with HDFS Cluster authenticated with Kerberos

Symptom:

The attempt to access Kerberos enabled Hadoop Distributed File System (HDFS) on any cluster host fails with error message "SIMPLE authentication is not enabled" though all Kerberos parameters are configured correctly.

Error Message:

The following exception is displayed as the error message for the Kerberos authentication enable HDFS Snaps.

java.util.concurrent.ExecutionException: java.io.IOException:

Failed on local exception: java.io.IOException: org.apache.hadoop.security.AccessControlException:

Client cannot authenticate via:[TOKEN, KERBEROS];

Cause:

All hosts that participate in the Kerberos authentication system must have their internal clocks synchronized within a specified maximum amount of time (known as clock skew). This requirement provides another Kerberos security check. If the clock skew is exceeded between any of the participating hosts, client requests are rejected.

Resolution:

Maintaining synchronized clocks between the KDCs and Kerberos clients is important, please use the Network Time Protocol (NTP) software to synchronize them.

Symptom:

The attempt to access Kerberos enabled Hadoop Distributed File System (HDFS) on any cluster host fails with error message "Server has invalid Kerberos principal:" though all Kerberos parameters are configured correctly.

Error Message:

The following exception is displayed as the error message.

Failed on local exception: java.io.IOException:

java.lang.IllegalArgumentException: Server has invalid Kerberos principal:

hdfs/cdhclusterqa-2-1.clouddev.snaplogic.com@CLOUDDEV.SNAPLOGIC.COM;

Host Details : local host is: "<LOCALHOST>/127.0.0.1";

destination host is: "cdh2-1.devsnaplogic.com":8020;

Cause:

When Kerberos Authentication is configured on the HDFS Server, the following properties are added

<property> <name>dfs.namenode.kerberos.principal</name> <value>hdfs/_HOST@YOUR-REALM.COM</value> </property> <property> <name>dfs.datanode.kerberos.principal</name> <value>hdfs/_HOST@YOUR-REALM.COM</value> </property>

The special string _HOST in the properties is replaced at run-time by the fully-qualified domain name of the host machine where the daemon is running. This requires that reverse DNS is properly working on all the hosts configured this way.

Resolution:

One potential cause for this issue is, the "_HOST" has multiple host names and the hostname provided in the "Service Principle" in the Snap Kerberos configuration is not matching with the hostname resolved on the Namenode or DataNode. In the error message, the server's service principle is displayed, please make sure that the same service principle is provided in the Snap Kerberos Configuration.

More details can be found at

You can use the HadoopDNSResolver tool to verify the DNS Names. Details on the usage of the tool are provided in the same page.

Symptom:

- HDFS Reader Snap times out to read the data, even after all the credentials provided correctly,

- HDFS Writer Snap times out in writing the data, even after all the credentials provided correctly,

Error Message:

The following exception is displayed as the error message for the Kerberos authentication enable HDFS Writer.

java.lang.Thread.State: WAITING

at java.lang.Object.wait(Object.java:-1)

at org.apache.hadoop.hdfs.DFSOutputStream.waitForAckedSeqno(DFSOutputStream.java:2119)

at org.apache.hadoop.hdfs.DFSOutputStream.flushInternal(DFSOutputStream.java:2101)

at org.apache.hadoop.hdfs.DFSOutputStream.closeImpl(DFSOutputStream.java:2232)

- locked <0x2fca> (a org.apache.hadoop.hdfs.DFSOutputStream)

at org.apache.hadoop.hdfs.DFSOutputStream.close(DFSOutputStream.java:2204)

at org.apache.hadoop.fs.FSDataOutputStream$PositionCache.close(FSDataOutputStream.java:72)

at org.apache.hadoop.fs.FSDataOutputStream.close(FSDataOutputStream.java:106)

at java.io.FilterOutputStream.close(FilterOutputStream.java:159)

Cause:

This can be caused by the following reasons.

- Data Node not responding to the Groundplex requests

- Security or Firewall settings are blocking the access from the Groundplex to the Ports on DataNode

Resolution:

The edge node, on which the Groundplex is executing should be able to access all the standard Hadoop ports. Here is the Hadoop default ports for various distributions.