In this article

You can use this Snap to execute arbitrary Snowflake SQL. This Snap works only with single queries.

The Snap substitutes the valid JSON paths that are defined in the WHERE clause for queries/statements with the values present in the incoming document. If the incoming document does not carry a substituting value, the document is written to the error view. If the Snap executes a SELECT query, it merges the query results into the incoming document and overwrites the values of all existing keys. On the other hand, the Snap writes the original document if there are no results from the query. If an output view is available and an UPDATE/INSERT/MERGE/DELETE statement is executed, the original document that was used to create the statement becomes output with the status of the statement executed.

The Snap substitutes the valid JSON paths that are defined in the WHERE clause for queries/statements with the values present in the incoming document. If the incoming document does not carry a substituting value, the document is written to the error view. If the Snap executes a SELECT query, it merges the query results into the incoming document and overwrites the values of all existing keys. On the other hand, the Snap writes the original document if there are no results from the query. If an output view is available and an UPDATE/INSERT/MERGE/DELETE statement is executed, the original document that was used to create the statement becomes output with the status of the statement executed.

The Snowflake - Execute Snap is a Write-type Snap that executes arbitrary Snowflake SQL.

You should have the following permissions (but not limited to) in your Snowflake account to execute this Snap:

Usage (DB and Schema): Privilege to use database, role, and schema.

Create table: Privilege to create a table on the database. role, and schema.

The following commands enable minimum privileges in the Snowflake Console:

grant usage on database <database_name> to role <role_name>; grant usage on schema <database_name>.<schema_name>; grant "CREATE TABLE" on database <database_name> to role <role_name>; grant "CREATE TABLE" on schema <database_name>.<schema_name>; |

For more information on Snowflake privileges, refer to Access Control Privileges.

The permissions to grant for usage on database and creating tables depend on the queries you provide in this Snap.

Works in Ultra Pipelines. However, we recommend that you not use this Snap in an Ultra Pipeline.

User-defined functions (UDFs) created in the Snowflake console can be executed using Snowflake - Execute Snap. See Execute-Snowflake Execute Snap supports UDFs in examples.

The Snap may break existing Pipelines if the JDBC Driver is updated to a newer version.

With the updated JDBC driver (version 3.12.3), the Snowflake Execute and Multi-Execute Snaps' output displays a Status of "-1" instead of "0" without the Message field upon successfully executing DDL statements. If your Pipelines use these Snaps and downstream Snaps use the Status field's value from these, you must modify the downstream Snaps to proceed on a status value of -1 instead of 0.

This change in the Snap behavior follows from the change introduced in the Snowflake JDBC driver in version 3.8.1:

"Statement.getUpdateCount() and PreparedStatement.getUpdateCount() return the number of rows updated by DML statements. For all other types of statements, including queries, they return -1."

With the updated JDBC driver (version 3.12.3), the Snowflake Execute and Multi-Execute Snaps' output displays a Status of "-1" instead of "0" without the Message field upon successfully executing DDL statements. If your Pipelines use these Snaps and downstream Snaps use the Status field's value from these, you must modify the downstream Snaps to proceed on a status value of -1 instead of 0.

This change in the Snap behavior follows from the change introduced in the Snowflake JDBC driver in version 3.8.1:

"Statement.getUpdateCount() and PreparedStatement.getUpdateCount() return the number of rows updated by DML statements. For all other types of statements, including queries, they return -1."

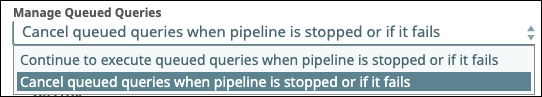

Because of performance issues, all Snowflake Snaps now ignore the Cancel queued queries when pipeline is stopped or if it fails option for Manage Queued Queries, even when selected. Snaps behave as though the default Continue to execute queued queries when the Pipeline is stopped or if it fails option were selected.

In 4.26, when the stored procedures were called using the Database Execute Snaps, the queries were treated as write queries instead of read queries. So the output displayed message and status keys after executing the stored procedure.

In 4.27, all the Database Execute Snaps run stored procedures correctly, that is, the queries are treated as read queries. The output now displays message key, and OUT params of the procedure (if any). The status key is not displayed.

If the stored procedure has no OUT parameters, only the message key is displayed with value success.

From 4.30 Release, the Snowflake Execute Snap writes the output value as-is for FLOAT or DOUBLE datatype columns if these columns have the value as NaN (Not a Number). Earlier, the Snap displayed an exception error when the FLOAT or DOUBLE datatype column has the value as NaN. This behavior is not backward compatible.

If you have any existing Pipelines that are mapped with status key or previous description then those Pipelines will fail. So, you might need to revisit your Pipeline design.

If you have any existing Pipelines that are mapped with status key or previous description then those Pipelines will fail. So, you might need to revisit your Pipeline design.

Type | Format | Number of Views | Examples of Upstream and Downstream Snaps | Description |

|---|---|---|---|---|

Input | Document |

|

| Incoming documents are first written to a staging file on Snowflake's internal staging area. A temporary table is created on Snowflake with the contents of the staging file. An update operation is then run to update existing records in the target table and/or an insert operation is run to insert new records into the target table. |

Output | Document |

|

| If an output view is available, then the output document displays the number of input records and the status of the bulk upload as follows:

|

Error | Error handling is a generic way to handle errors without losing data or failing the Snap execution. You can handle the errors that the Snap might encounter when running the Pipeline by choosing one of the following options from the When errors occur list under the Views tab:

Learn more about Error handling in Pipelines. | |||

|

Field Name | Field Type | Description | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

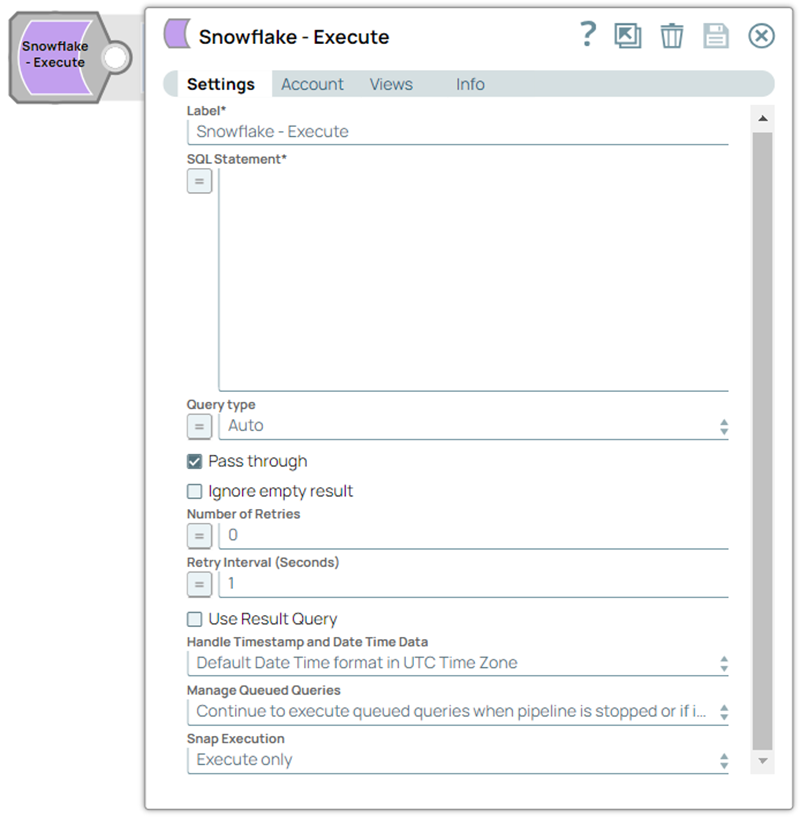

Label* Default Value: Snowflake - Execute | String | Specify the name for the Snap. You can make the name more specific, especially if your Pipeline has more than one of the same Snap. | |||||||||||

SQL Statement* Default Value: N/A Example: INSERT into SnapLogic.book (id, book) VALUES ($id,$book) | String/Expression | Specify the Snowflake SQL statement to execute on the server. We recommend you to add a single query in the SQL Statement field. We recommend you to add a single query in the SQL Statement field. Document value substitution is performed on literals starting with '$', for example, $people.name is substituted with its value available in the incoming document. In DB Execute Snaps, if the Snowflake SQL statement is not an expression, the JSON path, such as $para, is allowed in the WHERE clause only. If the query statement starts with SELECT (case-insensitive), the Snap regards it as a select-type query and executes once per input document. If not, the Snap regards it as a write-type query and executes in batch mode. This Snap does not allow you to inject Snowflake SQL, for example, select * from people where $columnName = abc. Without using expressions

Using expressions

| |||||||||||

Query type

Default Value: Auto | Dropdown list/Expression | Select the type of query for your SQL statement (Read or Write). When Auto is selected, the Snap tries to determine the query type automatically. | |||||||||||

Pass through Default Value: Selected | Checkbox | Select this checkbox to enable the Snap to pass the input document to the output view under the key named | |||||||||||

Ignore empty result Default Value: Deselected | Checkbox | Select this checkbox to not write any document to the output view when a SELECT operation does not produce any result. If this checkbox is not selected and the Pass-through checkbox is selected, the input document is passed through to the output view. | |||||||||||

Number of Retries Default Value: 0 | Integer | Specify the maximum number of attempts to be made to receive a response. The request is terminated if the attempts do not result in a response. If the value is larger than 0, the Snap first downloads the target file into a temporary local file. If any error occurs during the download, the Snap waits for the time specified in the Retry interval and attempts to download the file again from the beginning. When the download is successful, the Snap streams the data from the temporary file to the downstream Pipeline. All temporary local files are deleted when they are no longer needed. Ensure that the local drive has sufficient free disk space to store the temporary local file. Ensure that the local drive has sufficient free disk space to store the temporary local file. Minimum value: 0 | |||||||||||

Retry Interval (seconds) Default Value: 1 | Integer | Specify the time interval between two successive retry requests. A retry happens only when the previous attempt resulted in an exception. | |||||||||||

Use Result Query Default Value: Deselected | Checkbox | Select this checkbox to write the query execution result to the Snap's output view after the successful execution. The output of the Snap will be enclosed within the key This option allows users to effectively track the query's execution by clearly indicating the successful execution and the number of records affected, if any, after the execution. | |||||||||||

Handle Timestamp and Date Time Data Default value: Default Date Time format in UTC Time Zone Example: SnapLogic Date Time format in Regional Time Zone | Dropdown list | Specify how the Snap must handle timestamp and date time data. The available options are:

| |||||||||||

Manage Queued Queries Default value: Continue to execute queued queries when pipeline is stopped or if it fails Example: Cancel queued queries when the Pipeline is stopped or if it fails | Dropdown list | Select an option from the list to determine whether the Snap should continue or cancel the execution of the queued Snowflake Execute SQL queries when you stop the Pipeline. The available options are:

If you select Cancel queued queries when pipeline is stopped or if it fails, the read queries under execution are canceled, whereas the write type of queries under execution are not canceled. Snowflake internally determines which queries are safe to be canceled and cancels those queries. If you select Cancel queued queries when pipeline is stopped or if it fails, the read queries under execution are canceled, whereas the write type of queries under execution are not canceled. Snowflake internally determines which queries are safe to be canceled and cancels those queries. | |||||||||||

Default Value: Execute only | Dropdown list |

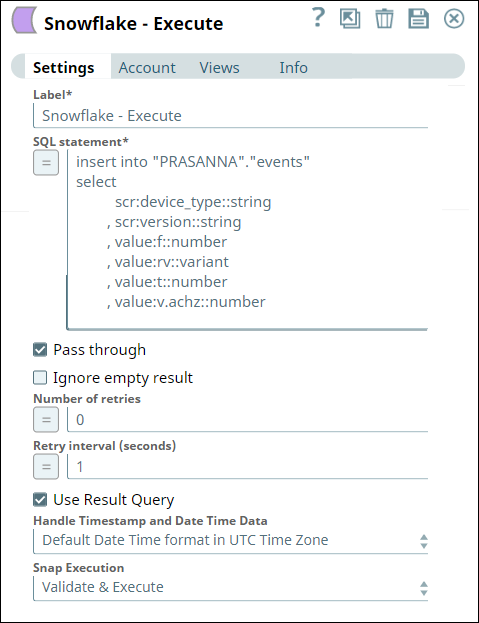

This example Pipeline demonstrates how to insert data into a table using the Snowflake Execute Snap.

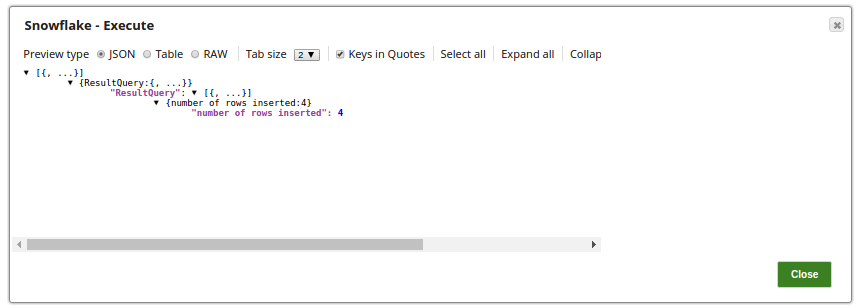

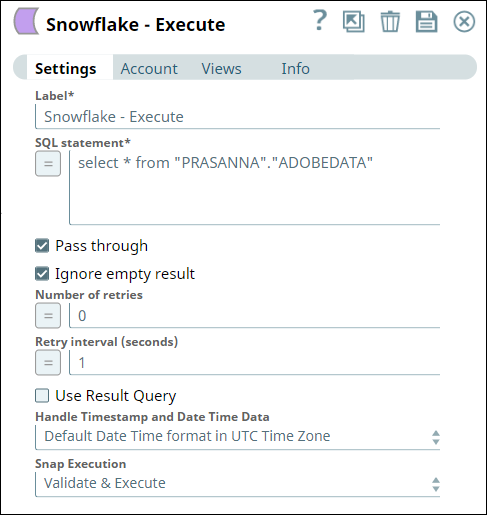

First, we configure the Snowflake Execute Snap as follows. Note that we select the Use Result Query checkbox to view the statement result output.

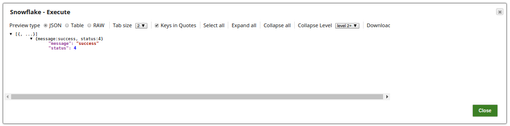

Upon execution, we see the following output enclosed within the key Result Query.

The following screenshot displays the output preview when we disable the Use Result Query checkbox.

The following example demonstrates the execution of Snowflake SQL query using the Snowflake Execute Snap.

First, we configure the Execute Snap with this query—select * from "PRASANNA"."ADOBEDATA" , which returns the data from ADOBEDATA.

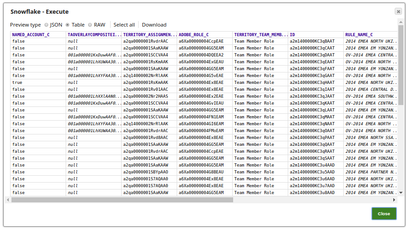

Upon successful execution, the Snap displays the following output in its data preview.

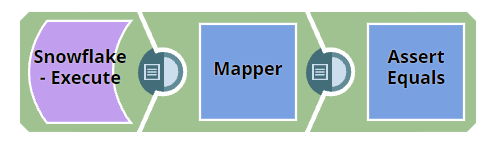

User-defined functions (UDFs) created in the Snowflake console can be executed using Snowflake - Execute Snap. In the following example, the SQL statement is defined and then the Snap is executed with that conditions.

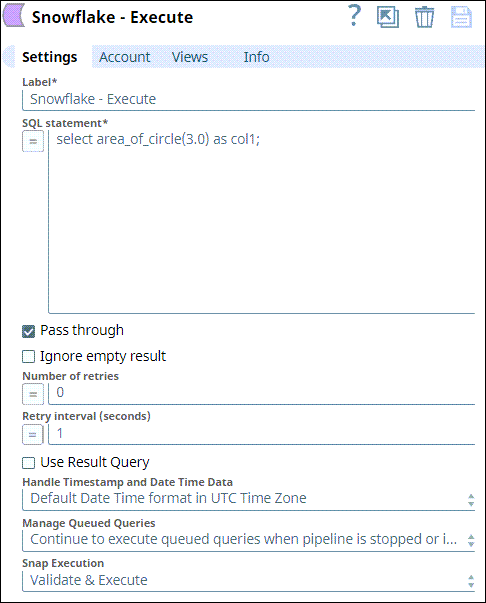

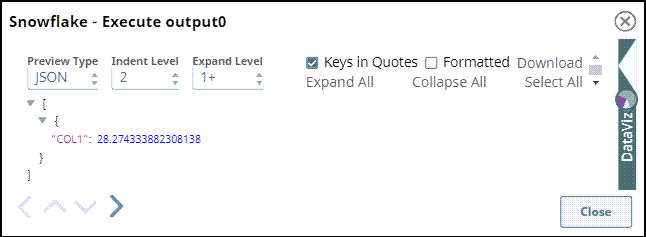

First, the Snowflake Execute Snap is used to give the user-defined SQL statement. area_of_circle(3.0) is a UDF here. The Snap settings and the output view are as follows:

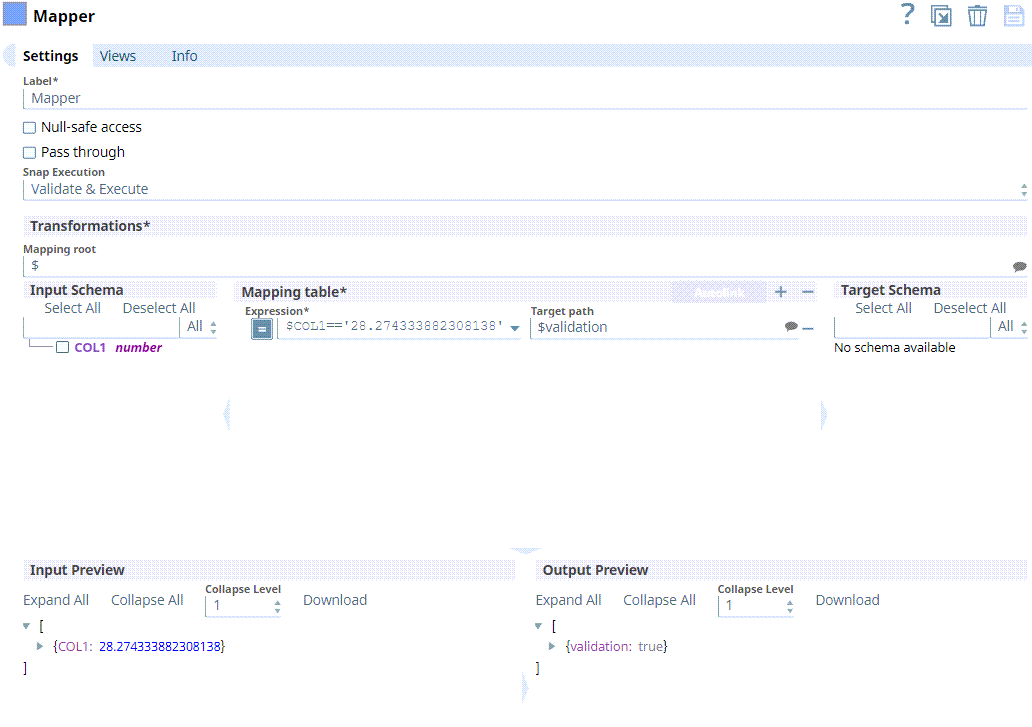

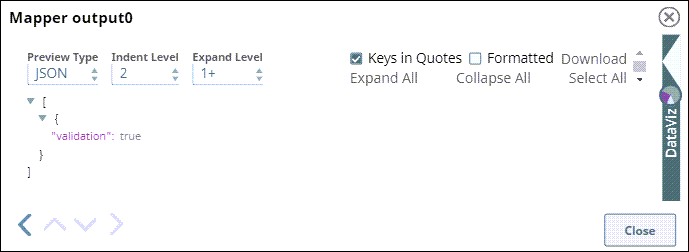

Then the Mapper Snap is used to define columns that need to be picked up from the Output of the Snowflake Execute.

https://docs.snowflake.com/en/developer-guide/udf/sql/udf-sql-scalar-functions.html

https://docs.snowflake.com/en/sql-reference/udf-overview.html

https://docs.snowflake.com/en/user-guide-getting-started.html