Overview

You can use this Snap to implement the Slowly Changing Dimension Type 2 (SCD2) function in your Snowflake, Redshift, Azure Synapse, Databricks Lakehouse Platform, or BigQuery table. Configure the database type and the access to corresponding tables in the Configuring ELT Database Accounts for the Snap.

Switching the Snap behaviorThe ELT SCD2 Snap performs a MERGE INTO-based SCD2 operation as opposed to its initial INSERT-and-then-UPDATE approach. If you prefer to use the INSERT-and-then-UPDATE approach for your SCD2 operation, define the Pipeline parameter SCD2_INSERTUPDATE_FALLBACK_MODE with a value greater than zero (0), before running your Pipeline. |

Snap Type

The ELT SCD2 Snap is a write-type Snap that writes SCD2 data from a source location to a supported CDW destination.

Prerequisites

Valid accounts and access permissions to connect to the following:

Source: AWS S3, Azure Cloud Storage, or Google Cloud Storage.

Target: Snowflake, Redshift, Azure Synapse, Databricks Lakehouse Platform, or BigQuery.

Limitation

ELT Snap Pack does not support Legacy SQL dialect of Google BigQuery. We recommend that you use only the BigQuery's Standard SQL dialect in this Snap. |

Known Issues

|

When using the ELT SCD2 Snap to load your source data into a Redshift target instance, the Snap fails if you use constant values such as ‘2022-01-01’ or SQL expressions such as GETDATE() and current_time() that evaluate to constant values instead of the target table column names in the Target Table Sort Fields. |

In the case of Azure Synapse, if you are configuring the Start_Date column as a Target Table Natural Key, the Snap fails in each of the following scenarios:

|

|

|

Snap Views

Type | Format | Number of Views | Examples of Upstream and Downstream Snaps | Description |

|---|---|---|---|---|

Input | Document |

|

| A document containing an SQL query that yields the historization data to load. |

Output | Document |

|

| A document containing the status of the SCD2 Load operation along with the SQL query that you can use in downstream ELT Snaps if any. |

Error | Error handling is a generic way to handle errors without losing data or failing the Snap execution. You can handle the errors that the Snap might encounter when running the Pipeline by choosing one of the following options from the When errors occur list under the Views tab:

Learn more about Error handling in Pipelines. | |||

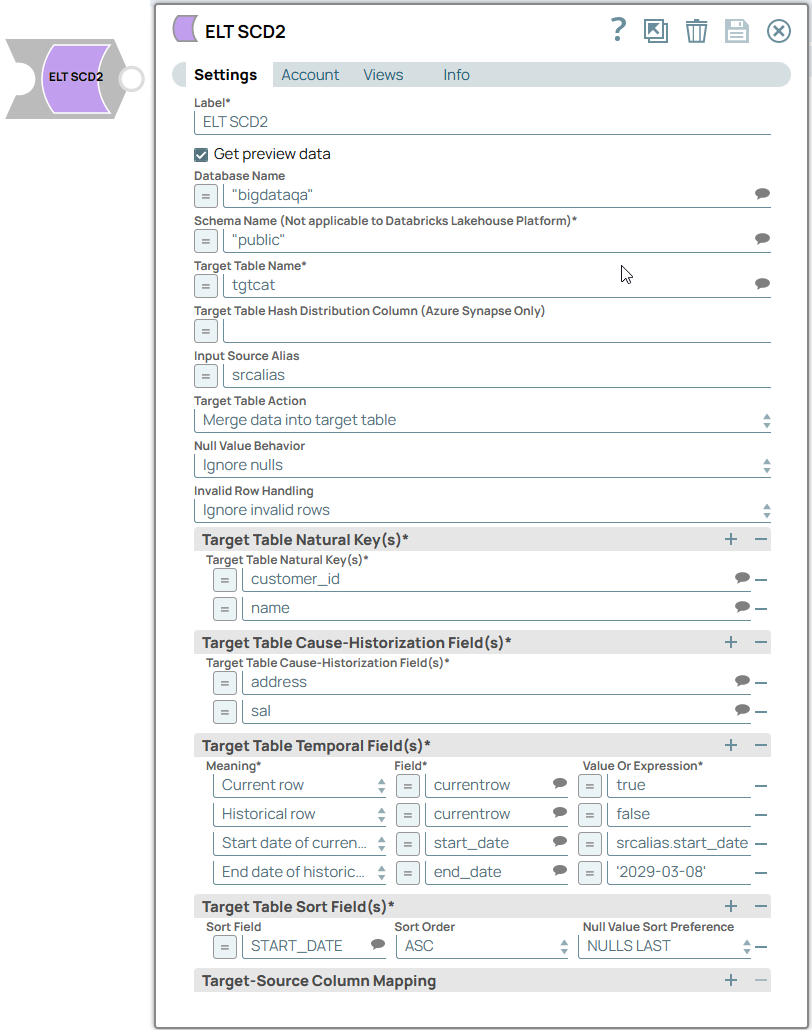

Snap Settings

|

Field Name | Field Type | Field Dependency | Description | |||

|---|---|---|---|---|---|---|

Label* Default Value: N/A | String | None. | Specify a name for the Snap. You can modify this to be more specific, especially if you have more than one of the same Snap in your Pipeline. | |||

Get preview data Default Value: Not selected | Checkbox | None. | Select this checkbox to include a preview of the query's output. The Snap performs limited execution and generates a data preview during Pipeline validation.

The number of records displayed in the preview (upon validation) is the smaller of the following:

| |||

Database Name Default Value: N/A | String/Expression | None. | Specify the name of the database in which the target table exists. Leave this field blank if you want to use the database name specified in the Default Database Name field of your Snap Account settings. | |||

Schema Name (Not applicable to Databricks Lakehouse Platform)* Default Value: N/A | String/Expression/Suggestion | None. | Specify the name of the database schema. Alternatively, choose from the suggested list of schemas associated with the database.

| |||

Target Table Name* Default Value: N/A | String/Expression/Suggestion | None. | Specify the name of the SCD2 table or view in which you want to load the data. Alternatively, choose from the suggested list of table names associated with the schema.

| |||

Input Source Alias Default Value: N/A | String/Expression | None. | Specify an alias name for your source data table. | |||

Target Table Action Default Value: Merge data into target table | Drop-down list | None. | Select the SCD2 action to perform on the target table. Available options are:

| |||

Table Columns | Field set | Target Table Action is Drop and Create table or Alter table. | This field set enables you to configure the schema of the target table. You can use this field set to create the target table or to add or drop columns from it. This field set consists of the following fields:

| |||

Column Default Value: N/A | String/Suggestion | Same as Table Columns. | Specify the name of the column that you want to load in the target table. You can also specify the columns to drop if you select the Alter table option in the Target Table Action field. You can also specify the columns to drop if you select the Alter table option in the Target Table Action field. | |||

Data Type Default Value: N/A | String | Same as Table Columns. | Specify the data type for the values in the specified column. | |||

Modifier Default Value: Add | Drop-down list | Target Table Action is Alter table. | Select whether you want to add/drop the specified column. Available options are:

| |||

Null Value Behavior Default Value: Honor nulls as distinct values | Drop-down list | None. | Select an option to specify how you want the Snap to treat rows with null values in Natural Key or Cause-Historization fields of the source table. The available options are:

Refer to the ELT SCD2 scenarios to learn more about the impact of the selection you make in this field. | |||

Invalid Row Handling Default Value: Ignore invalid rows Example: Insert duplicate invalid rows | Drop-down list | None. | Select an option to specify how you want the Snap to treat invalid rows, if any, in the source data. A row is considered invalid when it does not result in a new, valid, and coherent SCD2 entry/row in the target table. The available options are:

Refer to the ELT SCD2 scenarios to learn more about the impact of the selection you make in this field. | |||

Target Table Natural Key(s)* | This field set enables you to configure the names of fields that identify a unique row in the target table. The identity key cannot be used as the Natural key, since a current row and its historical rows cannot have the same identity value. Specify the value in the following field for each new row added in this field set.

| |||||

Target Table Natural Key(s) Default Value: N/A Example: CUSTOMER_ID | String/Expression/Suggestion | Specify the name of the Natural Key column from the target table. | ||||

Target Table Cause-Historization Field(s)* | This field set enables you to configure the names of fields where any change in value causes the historization of an existing row and the insertion of a new 'current' row. Specify the value in the following field for each new row added in this field set.

| |||||

Target Table Cause-Historization Field(s)* Default Value: N/A Example: LAST_ORDER_AMT | String/Expression/Suggestion | None. | Specify the name of the historization-causing column from the target table. | |||

Target Table Temporal Field(s)* | This field set enables you to configure the historical and updated information for the Cause-historization field. Click to add SCD fields. Specify the values in the following fields for each SCD field row in the field set.

| |||||

Meaning* Default Value: Five rows with one list item in each row Example: Start date of current row | Drop-down list | None. | Select one from the following six permitted values for this field in each row. At the minimum, ensure that you configure appropriate values for the first three meanings, only one of the end dates, and the flag in this list.

| |||

Field* Default Value: N/A Examples: Start_Date End_Date Current_Row Historical_Row | String/Expression/Suggestion | None. | Associate with each Meaning selected the field name from the target table that would contain the historical information.

See the Troubleshooting section in this document and ELT SCD2 Scenarios to ensure that you have configured these fields in your Snap's settings properly.

| |||

Value or Expression* Default Value: N/A Examples: For row fields: | String/Expression | None. | Configure the value for each of the fields selected in the Field field.

Here are a few more examples that you can use to define the expressions in this field based on your target CDW:

| |||

Target Table Sort Field(s)* | This field set enables you to sort the records in the target table, by one or more historization fields (for example, by natural key). This mapping is for columns that are not part of the Target Table Temporal Fields. Specify the values in the following fields for each sort order row.

| |||||

Sort Field Default Value: N/A Example: start_date GENDER | String/Expression/Suggestion | Specify the column by which to sort the target table data set. | ||||

Sort Order Default Value: ASC Example: DESC | Drop-down list | Choose either of the sort orders—ASC (ascending) or DESC (descending)—for the target table data set. | ||||

Null Value Sort Preference Default Value: NULLS LAST Example: NULLS FIRST | Drop-down list | Choose the position for the null values, if any, in the selected column while sorting—NULLS FIRST (at the beginning) or NULLS LAST (at the end).

| ||||

Target-Source Column Mapping | This field set enables you to define a one-to-one mapping between the columns in the source and target tables for inserting new rows into the target table. This field set contains the following fields:

| |||||

Target Table Column Name Default Value: N/A | String/Expression/Suggestion | None. | Choose a column from the target table's schema to map with a column from the source data set (schema).

| |||

Source Column Mapping Expression Default Value: N/A | String/Expression | None. | Choose a column from the source data set (schema) that corresponds to the respective column in the target table's schema. You can alternatively specify an expression for the source column name.

| |||

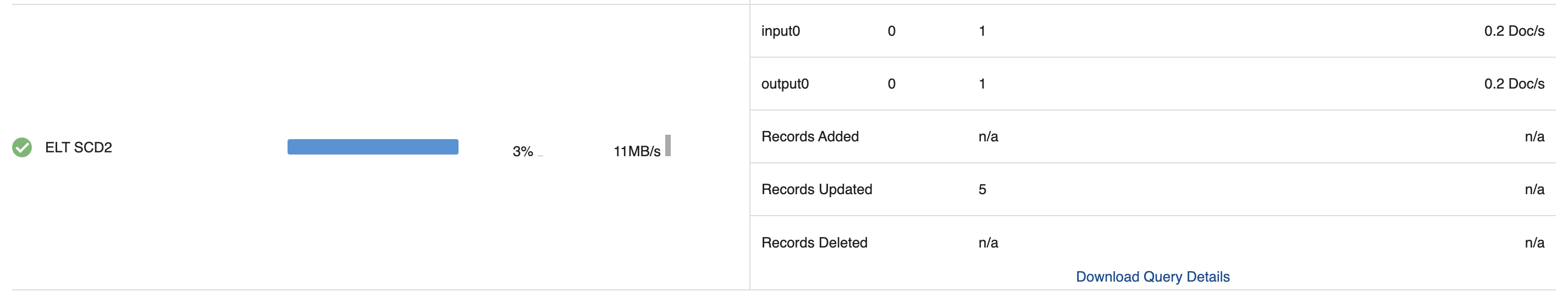

Pipeline Execution Statistics

As a Pipeline executes, the Snap shows the following statistics updating periodically. You can monitor the progress of the Pipeline as each Snap performs execution.

Records Added

Records Updated

Records Deleted

You can view more information by clicking the Download Query Details link. This downloads a JSON file that contains the count of rejected records besides the above counts.

The statistics are also available in the output view of the child ELT Pipeline. |

Troubleshooting

|

|

|

Examples

Loading Historical Data From an S3 Bucket to a Snowflake Table

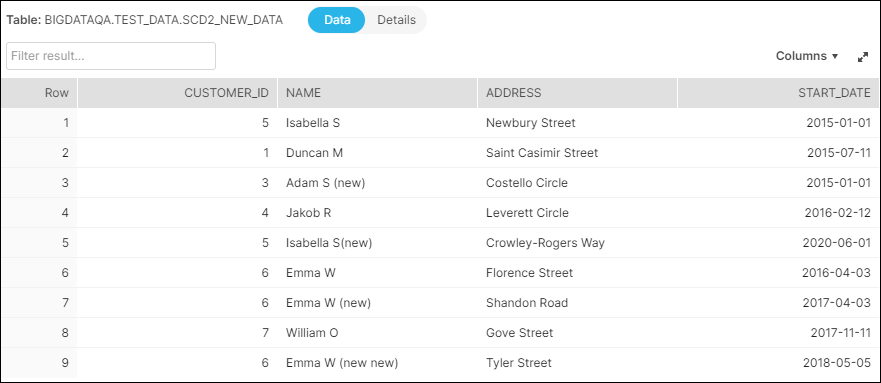

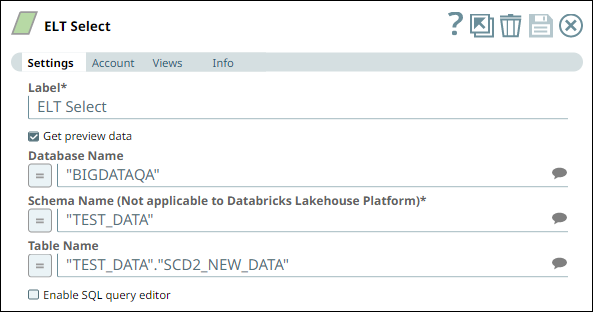

The following Pipeline is designed to view and capture Type 2 SCD data from a folder in S3 bucket and load it into the target table in Snowflake database.

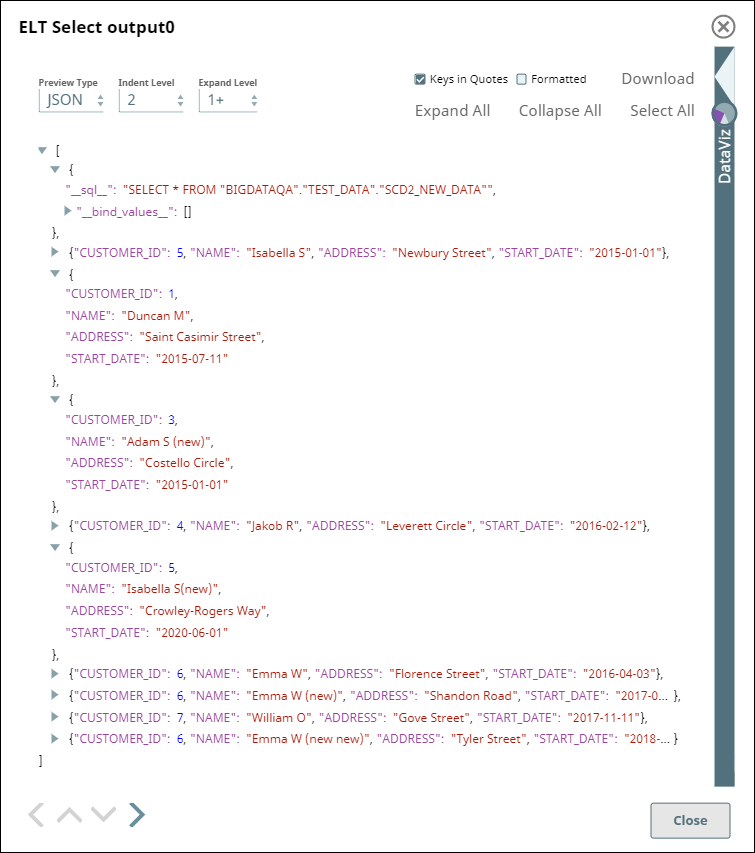

We configure an ELT Select Snap to capture SCD2 data from the source table SCD2_NEW_DATA in S3. The image below depicts the data available in the source table.

ELT Select Snap |

|---|

|

Snap Output |

|

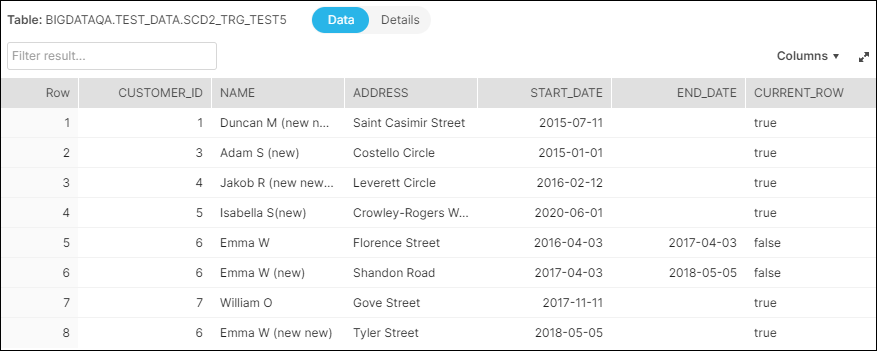

We use this Snap's output as the input to an ELT SCD2 Snap and configure this Snap to update the table SCD2_TRG_TEST5 residing in the Snowflake database.

The image below depicts the data available in the target table, before the data load.

Existing Data in Target Table |

|---|

|

ELT SCD2 Snap |

.png?version=5&modificationDate=1683730806625&cacheVersion=1&api=v2) |

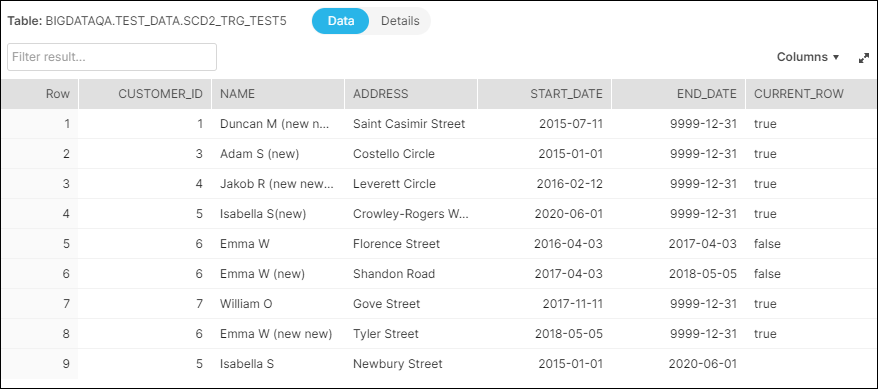

Target Table after SCD2 operation |

|

Notice the difference in the number of rows and the values in the END_DATE and the CURRENT_ROW columns. There is only one row with CURRENT_ROW = true in the updated table for each unique CUSTOMER_ID.

Downloads

Important Steps to Successfully Reuse Pipelines

|

Edit the Excerpt Include macro below to link to the Snap Pack page for this Snap page. Ensure that the heading Snap Pack History is not within the Snap Pack's history Excerpt.

Snap Pack History

Refer to the Snap Pack History table in ELT Snap Pack.

Related Content

Provide links to the endpoint's official documentation, any relevant or related internal documentation, and any other links you have referred to on this page. Care should be taken when referencing external sites/documentation that it is an official site/documentation. Do not refer to forums such as stackoverflow.