On this Page

Overview

Use the HDFS ZipFile Read Snap to extract and read archive files in HDFS directories and produce a stream of unzipped documents in the output.

For the HDFS protocol, use a SnapLogic on-premises Groundplex. Also, ensure that the instance is within the Hadoop cluster and that SSH authentication is established.

This Snap supports the HDFS 2.4.0 protocol & ABFS (Azure Data Lake Storage Gen 2 ) protocols. |

Expected Input and Output

- Expected Input: Documents containing information that identifies the directory and ZIP files that must be read.

- Expected Output: A binary stream containing unzipped documents from the specified ZIP files.

- Expected Upstream Snaps: Required. Any Snap that offers a list of ZIP files in its output view. Examples: HDFS ZipFile Writer, ZipFile Read.

- Expected Downstream Snaps: Any Snap that accepts document data in its input view. Examples: CSV Parser, HDFS Writer, File Writer.

Prerequisites

The user executing the Snap must have Read permissions on the concerned Hadoop directory.

Configuring Accounts

This Snap uses account references created on the Accounts page of SnapLogic Manager to handle access to this endpoint. See Configuring Hadoop Accounts for information on setting up this type of account.

Configuring Views

Input | This Snap has at most one document input view. |

|---|---|

| Output | This Snap has exactly one binary output view. |

| Error | This Snap has at most one document error view. |

Troubleshooting

None at this time.

Limitations and Known Issues

None at this time.

Modes

- Ultra Pipelines: Works in Ultra Pipelines.

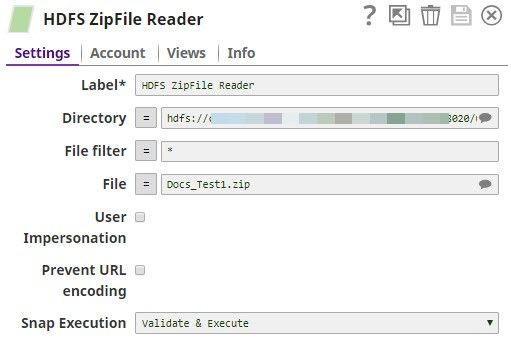

Snap Settings

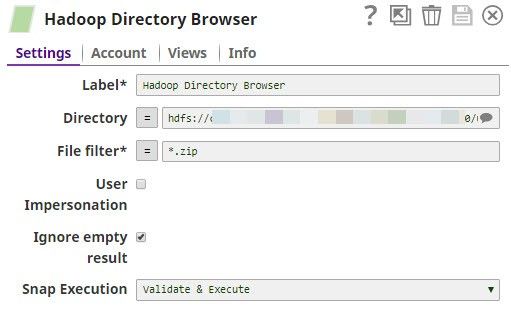

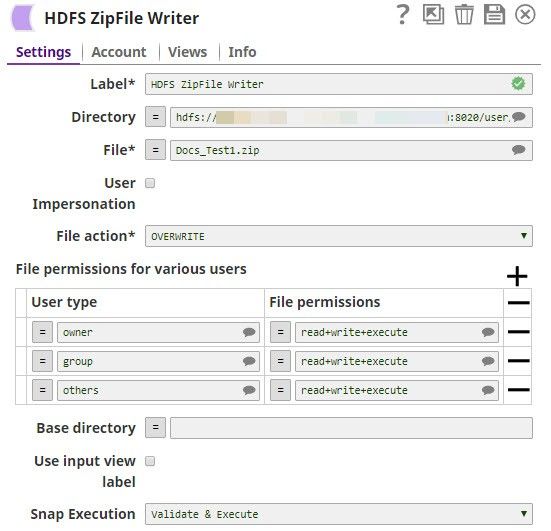

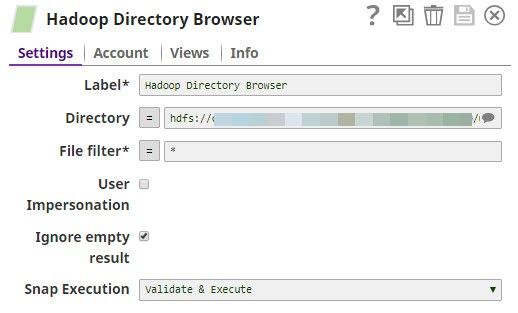

| Label | Required. The name for the Snap. Modify this to be more specific, especially if there are more than one of the same Snap in the pipeline. | ||

|---|---|---|---|

| Directory | The URL for the data source (directory). The Snap supports both HFDS and ABFS(S) protocols. Syntax for a typical HDFS URL:

Syntax for a typical ABFS and an ABFSS URL:

When you use the ABFS protocol to connect to an endpoint, the account name and endpoint details provided in the URL override the corresponding values in the Account Settings fields. Default value: [None] | ||

| File Filter | |||

| File | The relative path and name of the file that must be read. Example:

Default value: [None] | ||

| User Impersonation | |||

| Prevent URL Encoding | |||

The binary document header content-location of the HDFS ZipFile Writer input is the name within the ZIP file. (Example: foo.txt). The Snap does not include the 'base directory'. It could contain subdirectories though. On the other hand, the binary document header content-location of the output of the HDFS ZipFile Reader is the name of the ZIP file, the base directory, and the content location provided to the writer. Thus, while each Snap works well independent of each other, it's currently not possible to have a Reader > Writer > Reader combination in a pipeline without using other intermediate Snaps to provide the binary document header information. |

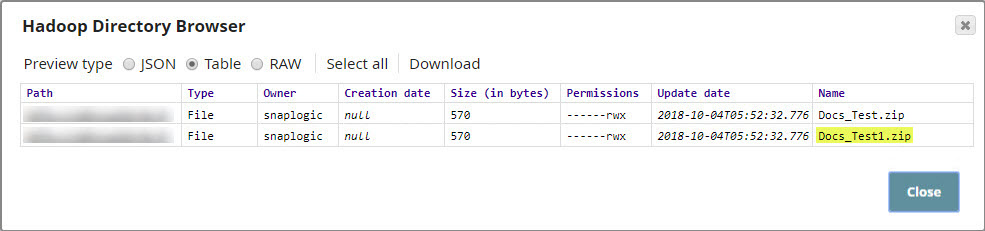

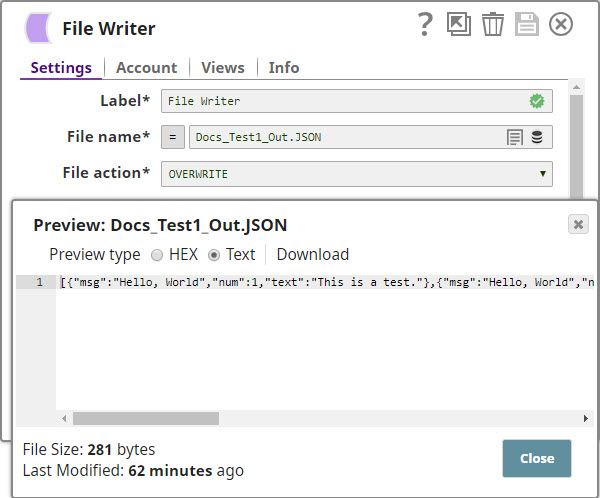

Examples

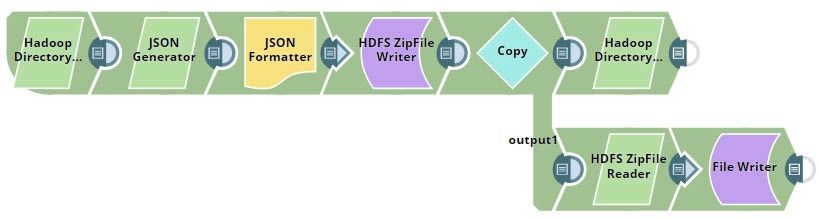

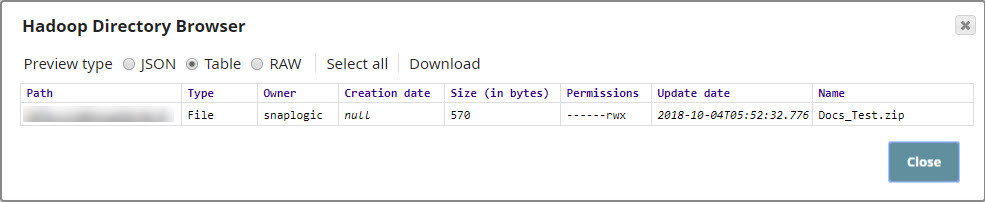

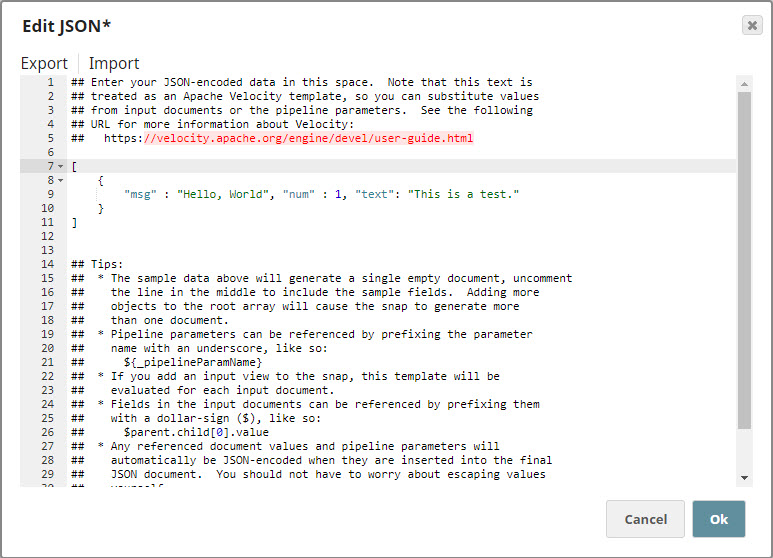

Writing and Reading a ZIP File in HDFSThe first part of this example demonstrates how you can use the HDFS ZipFile Write Snap to zip and write a new file into HDFS. The second part of this example demonstrates how you can unzip and check the contents of the newly-created ZIP file. Click here to download this pipeline. You can also downloaded this pipeline from the Downloads section below.

|

Troubleshooting

Downloads