In this article

| Table of Contents | ||||

|---|---|---|---|---|

|

| Multiexcerpt include | ||||||||

|---|---|---|---|---|---|---|---|---|

|

Overview

You can use this Snap to execute SQL queries in the target database—Snowflake, Redshift, Azure Synapse, Databricks Lakehouse Platform (DLP), or BigQuery. You can run the following types of queries using this Snap:

Data Definition Language (DDL) queries

Data Manipulation Language (DML) queries

Data Control Language (DCL) queries

Prerequisites

Valid accounts and access permissions to connect to the following:

Source: AWS S3, Azure Cloud Storage, or Google Cloud Storage

Target: Snowflake, Redshift, Azure Synapse, DLP, or BigQuery

| Note |

|---|

If you want to use the COPY INTO command for loading data into the target database, you must pass (expose) these account credentials inside the SQL statement. Hence, we recommend you to consider using the ELT Load Snap as an alternative. |

Limitations

This Snap does not support multi-statement transaction rollback of any of the DDL, DCL or DML statements specified.

Each statement is auto-committed upon successful execution. In the event of a failure, the Snap can rollback only updates corresponding to the failed statement execution. All previous statements (during that Pipeline execution runtime) that ran successfully are not rolled back.

You cannot run Data Query Language (DQL) queries using this Snap. For example,

SELECTandWITHquery constructs.Use this Snap either at the beginning or in the end of the Pipeline.

This Snap executes the SQL query only during Pipeline Execution. It does NOT perform any action (including showing a preview) during Pipeline validation.

| Multiexcerpt include macro | ||||

|---|---|---|---|---|

|

Known Issues

While you specify an SQL statement in the SQL Statement Editor of an ELT Snap as an expression, the dynamic validation for the expression displays inline errors when there is more than one incoming document and without the

'__sql__'key to the current Snap, when you select Get Preview Data checkbox in the previous Snap, and when Preview Document Count in your user settings is set to a value more than 1.To prevent this error and similar ones, do not select the Get Preview Data checkbox in the previous Snap, set the Preview Document Count in your user settings to 1, or append a condition

where 1 = 0to the SQL statement with the Get Preview Data checkbox selected.

Due to an issue with BigQuery table schema management (the time travel feature), an ALTER TABLE action (Add or Update column) that you attempt after deleting a column (DROP action) in your BigQuery target table causes the table to break and the Snap to fail.

As a workaround, you can consider either avoiding ALTER TABLE actions on your BigQuery instance or creating (CREATE) a temporary copy of your table and deleting (DROP) it after you use it.

- When your Databricks Lakehouse Platform instance uses Databricks Runtime Version 8.4 or lower, ELT operations involving large amounts of data might fail due to the smaller memory capacity of 536870912 bytes (512MB) allocated by default. This issue does not occur if you are using Databricks Runtime Version 9.0.

- ELT Pipelines created prior to 4.24 GA release using one or more of the ELT Insert Select, ELT Merge Into, ELT Load, and ELT Execute Snaps may fail to show expected preview data due to a common change made across the Snap Pack for the current release (4.26 GA). In such a scenario, replace the Snap in your Pipeline with the same Snap from the Asset Palette and configure the Snap's Settings again.

The Snap’s preview data (during validation) contains a value with precision higher than that of the actual floating point value (float data type) stored in the Delta. For example, 24.123404659344 instead of 24.1234. However, the Snap reflects the exact values during Pipeline executions.

| Multiexcerpt include macro | ||||

|---|---|---|---|---|

|

Snap Input and Output

| Input/Output | Type of View | Number of Views | Examples of Upstream and Downstream Snaps | Description |

|---|---|---|---|---|

| Input | Document |

|

| An upstream Snap is not mandatory. Use the input view to connect the Snap as the terminating Snap in the Pipeline. |

| Output | Document |

|

| A downstream Snap is not mandatory. Use the output view to connect the Snap as the first Snap in the Pipeline. |

Snap Settings

| Note |

|---|

|

Field Name | Type | Field Dependency | Description | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

Label* | String | None. | The name for the Snap. You can modify this to be more specific, especially if you have more than one of the same Snap in your Pipeline. Default Value: NA Example: ELT Execute for SF | |||||||||

SQL Statements* | Fieldset | None. | Use this field set to define your SQL statements, one in each row. Click to add a new row. You can add as many SQL statements as you need. | |||||||||

SQL Statement Editor* | String/Expression | None. | Enter the SQL statement to run, in this field. The SQL statement must follow the SQL syntax as stipulated by the target database—Snowflake, Redshift, Azure Synapse, Databricks Lakehouse Platform or BigQuery.

Default Value: NA Example: drop table base_01_oldcodes; |

Pipeline Execution Statistics

As a Pipeline executes, the Snap shows the following statistics updating periodically. You can monitor the progress of the Pipeline as each Snap performs executes. In case of DLP, the Snap captures and displays these details for DML statements onlyits action.

Records Added

Records Updated

Records Deleted

You can view more information as follows, by clicking the Download Query Details link to download a JSON file. In case of DLP, the Snap captures and depicts additional information (extraStats) on DML statement executions.

| Info |

|---|

The statistics are also available in the output view of the child ELT Pipeline. |

Troubleshooting

| Error | Reason | Resolution |

|---|---|---|

Failure: DQL statements are not allowed. | The ELT Execute Snap does not support Data Query Language (DQL) and hence statements containing | Remove any DQL statements (containing

|

| Multiple source rows are attempting to update or delete the same target row. | When you configure an ELT Execute Snap with a MERGE INTO statement that performs Update or Delete operation on a Databricks Lakehouse Platform cluster, it may return an error if multiple source rows attempt to update or delete the same target row. | To prevent such errors, you need to preprocess the source table to have only unique rows. |

No matching signature for operator = for argument types: INT64. (Target CDW: BigQuery) | BigQuery treats the values of Pipeline parameters as String, by default. Passing a value with any other data type causes this error (INT64 in this example). | Cast any non-String Pipeline parameter used in your SQL statement to its target data type for the Snap to work as expected. Ex: Consider using |

Keyword RANGE is not acceptable as a column name. (CDW: Databricks Lakehouse Platform) | This can happen with any reserved keyword if it is used as a column/field name in the table to be created. | Ensure the enclose such column names (reserved keywords) between backticks (`). For example: `RANGE' STRING. |

[Simba][SparkJDBCDriver](500051) ERROR processing query/statement. Error Code: 0 Cannot create table (' (Target CDW: Databricks Lakehouse Platform) | The specified location contains one of the following:

So, the Snap/Pipeline cannot overwrite this table with the target table as needed. | Ensure that you take appropriate action (mentioned below) on the existing table before running your Pipeline again (to create another Delta table at this location). Move or drop the existing table from the schema manually using one of the following commands: Access the DBFS through a terminal and run:

OR Use a Python notebook and run:

|

[Simba][SparkJDBCDriver](500051) ERROR processing query/statement. Error Code: 0 Cannot create table (' (CDW: Databricks Lakehouse Platform) | A non-Delta table that currently exists is corrupted and needs to be dropped from the schema before creating a Delta-formatted table. However, this corrupted table can only be dropped manually—by accessing the DBFS through a terminal. The Pipeline cannot perform this operation. | Drop the corrupted table and then try creating the new table in Delta format (using the Pipeline). To drop the corrupted table, from the terminal, access the DBFS and run the following command:

|

Syntax error when database/schema/table name contains a hyphen (-) such as in (CDW: Azure Synapse) | Azure Synapse expects any object name containing hyphens to be enclosed between double quotes as in "<object-name>". | Ensure that you use double quotes for every object name that contains a hyphen when your target database is Azure Synapse. For example: default."schema-1"."order-details". |

Cannot execute bigger SQL queries. (CDW: Azure Synapse) | The SQL query should not exceed the maximum allowed limit of 32500 Literals per Query. | Execute two or more SQL queries in place of one bigger query. See, http://aka.ms/dwsoftlimits for more details |

Fail to populate 1000 rows. Not enough resources for query planning - too many subqueries or query is too complex. (CDW:Big Query) | The Snap fails to execute if the query is too complex. | Split one large SQL query into two or more simple ones or request a higher quota limit from Google BigQuery. |

Sample Queries for the ELT Execute Snap

| Paste code macro | ||

|---|---|---|

| ||

CREATE OR REPLACE WAREHOUSE me_wh WITH warehouse_size='X-LARGE'; CREATE OR REPLACE TABLE "TEST_DATA".NEW_DATA(VARCHAR_DATA VARCHAR(10)); CREATE OR REPLACE TABLE "TEST_DATA".DT_EXECUTE_01(VARCHAR_DATA VARCHAR(100),TIME_DATA TIME,FLOAT_DATA FLOAT,BOOLEAN_DATA BOOLEAN,NUMBER_DATA NUMBER(38,0),DATE_DATA DATE); TRUNCATE TABLE IF EXISTS "public".simple_data_02; INSERT OVERWRITE INTO "BIGDATAQA"."TEST_DATA"."OUT_ELT_EXECUTE_SF_003" SELECT * FROM ( SELECT * FROM "BIGDATAQA"."TEST_DATA"."DT_EXECUTE_03" ) |

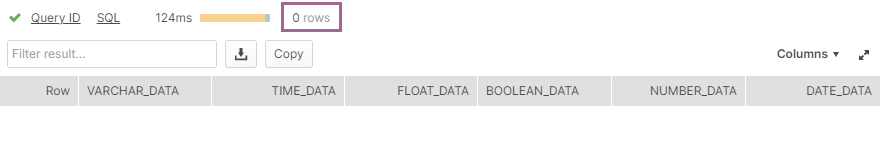

Example 1: Updating a Target Table Based on Updates to Another Table

The following Pipeline updates a target (backup) table - OUT_ELT_EXECUTE_SF_003 periodically based on the updates to another table DT_EXECUTE_03 (source). These tables are present in a Snowflake database, and we use data views from this database to present the updates that the Pipeline does in the target table.

There are two steps to achieve this functionality using the ELT Execute Snap:

Create a Pipeline with only the ELT Execute Snap for performing the periodic update.

Create a Scheduled Task from this Pipeline to trigger a job at specific times of the day, as needed. See Tasks Page for information on creating Scheduled Tasks from Pipelines.

This task regularly looks into the DT_EXECUTE_03 table for updates and inserts the latest data from this table into the target (backup) table.

Before we create the Pipeline:

| Source Table: DT_EXECUTE_03 |

|---|

| Target Table: OUT_ELT_EXECUTE_SF_003 |

We configure the ELT Execute Snap to run a DML query, as follows.

Once we create the Scheduled Task (after saving this Pipeline), the task runs as scheduled. Then, the ELT Execute Snap copies the data from the source table and inserts into the target (backup) table.

After the Scheduled Task/Pipeline is run:

| Target Table: OUT_ELT_EXECUTE_SF_003 |

|---|

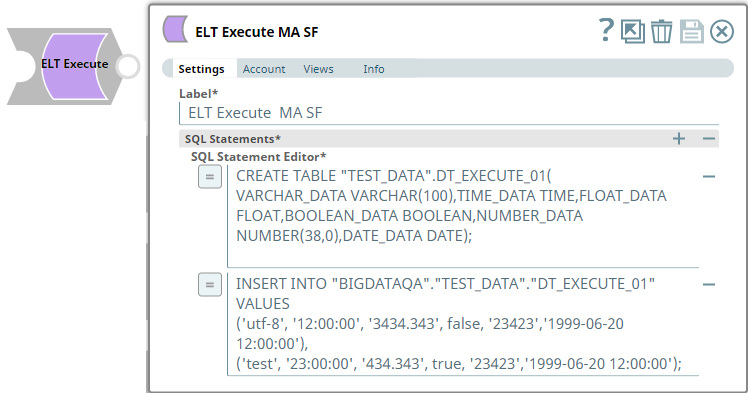

Example 2: Using one ELT Execute Snap to Create and Fill a Table

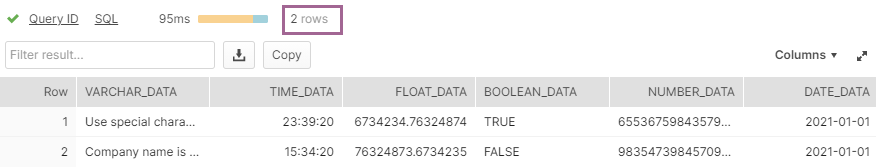

In this example Pipeline, we create a new table in the Redshift database and fill data into this table using an ELT Execute Snap. We later read the data from this table using an ELT Select Snap.

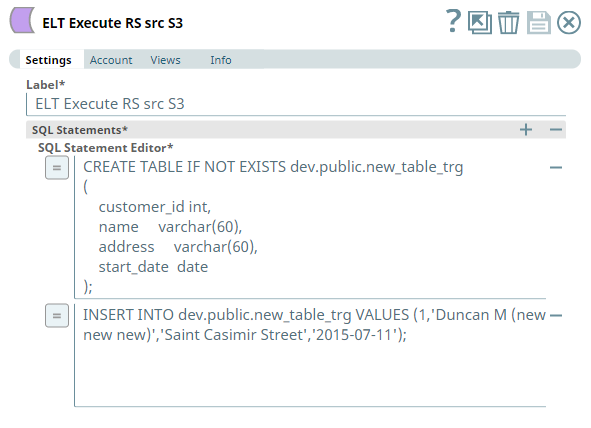

Let us observe the configuration of the ELT Execute Snap (first Snap in the above Pipeline).

| ELT Execute Snap |

|---|

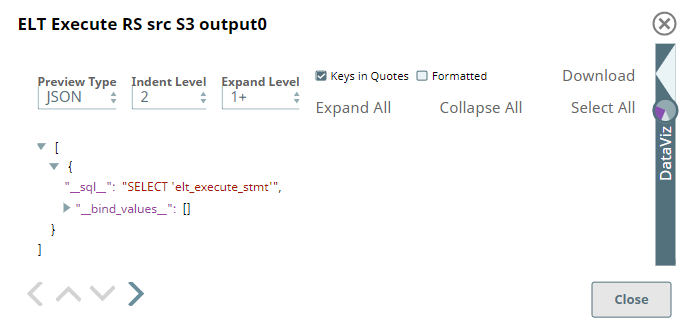

| Snap Output |

We have added two SQL statements into the SQL Statements field set—one for creating/overwriting a table and another for inserting a row into same table. ELT Execute Snap does not have a data preview except for the placeholder SQL statement that indicates the Snap is validated successfully. The Snap executes the SQL queries real-time when we run the Pipeline.

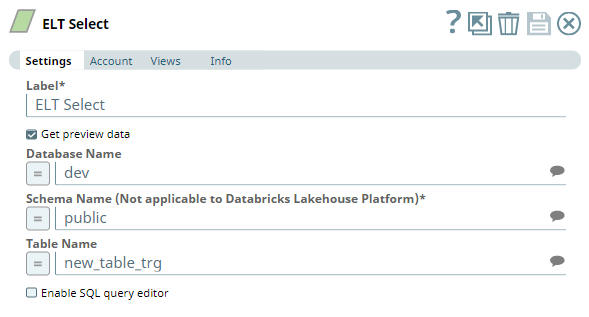

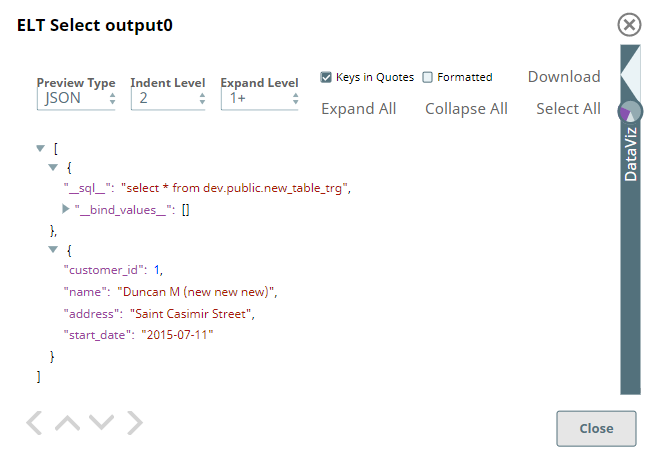

We connect an ELT Select Snap to the ELT Execute Snap to read the data from the newly-created table in the Redshift database. In this Snap:

We use the same ELT Database account that we use for the previous Snap.

Define/select the values for the database, schema and the table name to identify the table that the previous Snap is configured to create.

Alternatively, we can enable the SQL query editor and include the

select * from dev.public.new_table_trg;statement.It is also important here to note that we cannot run this DQL query using the ELT Execute Snap.

| ELT Select Snap |

|---|

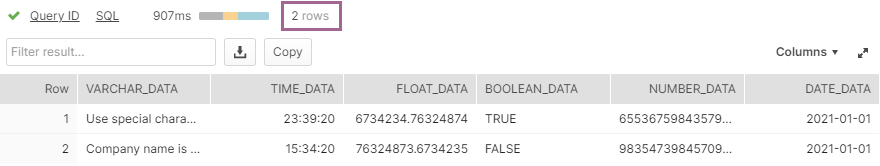

Snap Output |

Downloads

| Note | ||

|---|---|---|

| ||

|

| Attachments | ||

|---|---|---|

|

| Insert excerpt | ||||||

|---|---|---|---|---|---|---|

|