In this Article

| Table of Contents | ||||

|---|---|---|---|---|

|

...

To solve this big data problem, we have designed Pipelines that run on Snowflake and Microsoft Azure Databricks Lakehouse Platform

Example

...

Pipelines

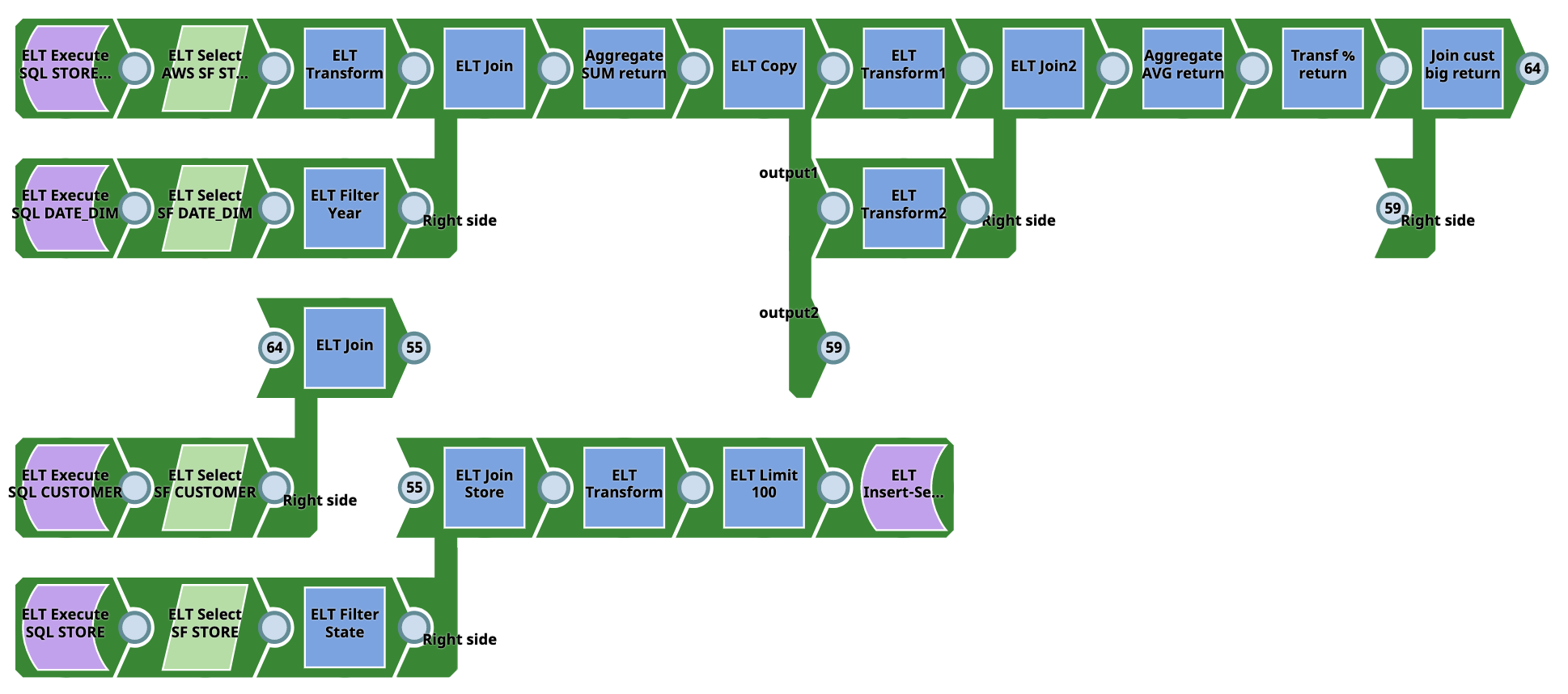

Pipeline 1 (SQL to SF)

Pipeline 1

This Pipeline does not require source tables as they are created on Snowflake on the fly from SQL. Output target tables are also created on Snowflake. The Pipeline writes from the Snowflake database to the Snowflake database. Users need not have Snowflake accounts. However, they require SQL experience.

You can download the Pipeline from from here

Pipeline

...

2 (Snowflake to Snowflake)

The Pipeline writes from the Snowflake database to the Snowflake database. However, it requires source tables to be present in the Snowflake database to run the Pipeline. An An output target table is created on Snowflake. Users need not have AWS/Snowflake accounts or do The Pipeline converts data from CSV to database tables and can be used for a wide variety of complex tasks. It requires table schema setup and AWS/Snowflake account is required. Users need not require SQL experience for this Pipeline.

You can download the Pipeline from from here.

Pipeline 3 (Snowflake (S3 location) to Snowflake)

This Pipeline does not require source tables as they are created on Snowflake on the fly using ELT Load snap and the output target tables are created on Snowflake. The Pipeline writes from the Snowflake database to the Snowflake database. The Pipeline converts data from CSV (S3 location) to database tables and can be used for a wide variety of complex tasks. It requires table schema setup and AWS/Azure account is required. Users need not require SQL experience for this Pipeline.

You can download the Pipeline from here.

Example Pipelines - Microsoft Azure Databricks Lakehouse Platform

Pipeline

...

1 (SQL to DLP)

This Pipeline does not require source tables or raw data as they are created on Microsoft Azure Databricks Lakehouse Platform on the fly from SQL. An output target tables are also created on Microsoft Azure Databricks Lakehouse Platform. The Pipeline writes from the Microsoft Azure Databricks Lakehouse Platform database to the Microsoft Azure Databricks Lakehouse Platform. However, they require SQL experience. It can be used only for ELT demo or simple tasks.

You can download the Pipeline from here

Pipeline

...

2 (DLP to DLP)

The Pipeline writes from the Microsoft Azure Databricks Lakehouse Platform to the Microsoft Azure Databricks Lakehouse Platform. However, it requires the source tables to should be present in the Microsoft Azure Databricks Lakehouse Platform before execution. An output target table is created on Microsoft Azure Databricks Lakehouse Platform. Users do not require SQL experience is not required. The Pipeline can be used for a wide variety of complex tasks.

You can download the Pipeline from here

Example Pipelines - AWS Redshift

Pipeline 1 (SQL to RS)

This Pipeline does not require source tables or raw data as they are created on AWS Redshift on the fly from SQL. The output target tables are also created on AWS Redshift. However, they require SQL experience. It can be used only for ELT demo or simple tasks.

Pipeline 2 (S3 to RS)

This Pipeline does not require source tables as they are created on AWS Redshift on the fly using ELT Load snap and the output target tables are created on AWS Redshift. The Pipeline converts data from CSV (S3 location) to database tables and can be used for a wide variety of complex tasks. It requires table schema setup and AWS Redshift account is required. SQL experience is not needed for this Pipeline.

Pipeline 3 (RS to RS)

The Pipeline writes from AWS Redshift to AWS Redshift. The source tables should be present in AWS Redshift before execution. SQL experience is not needed for this Pipeline. The Pipeline can be used for a wide variety of complex tasks.

Example Pipelines - Microsoft Azure Synapse

Pipeline 1 (SQL to SY)

This Pipeline does not require source tables or raw data as they are created on Azure Synapse on the fly from SQL. Output target tables are also created on Azure Synapse. SQL experience is needed. It can be used only for ELT demo or simple tasks.

Pipeline 2 (ADLS to SY)

This Pipeline does not require source tables as they are created on Azure Synapse on the fly using ELT Load snap and the output target tables are created on Azure Synapse. The Pipeline converts data from CSV (ADLS Gen 2 location) to database tables and can be used for a wide variety of complex tasks. It requires table schema setup and Azure Synapse account is required. SQL experience is not needed for this Pipeline.

Pipeline 3 (SY to SY)

The Pipeline writes from Azure Synapse to Azure Synapse. The source tables should be present in the Azure Synapse before execution. SQL experience is not needed for this Pipeline. The Pipeline can be used for a wide variety of complex tasks.

Example Pipelines - Google BigQuery

Pipeline 1 (SQL to BQ)

This Pipeline does not require source tables or raw data as they are created on Google BigQuery on the fly from SQL. Output target tables are also created on Google BigQuery. SQL experience is needed. It can be used only for ELT demo or simple tasks.

Pipeline 2 (S3 to BQ)

This Pipeline does not require source tables as they are created on Google BigQuery on the fly using ELT Load snap and the output target tables are created on Google BigQuery. The Pipeline converts data from CSV (S3 location) to database tables and can be used for a wide variety of complex tasks. It requires table schema setup and Google BigQuery account is required. SQL experience is not needed for this Pipeline.

Pipeline 3 (BQ to BQ)

The Pipeline writes from Google BigQuery to Google BigQuery. However, it requires source tables to be present in the Google BigQuery before execution. SQL experience is not needed for this Pipeline.The Pipeline can be used for a wide variety of complex tasks.

Source Data Sets Details:

File Name | Volume | Rows |

|---|---|---|

| STORE_RETURNS_DEMO_3.csv | 128K | 1000 |

| STORE_DEMO_3.csv | 128K | 1000 |

| CUSTOMER_DEMO_3.csv | 707K | 5000 |

| STORE_DEMO_3.csv | 271K | 1000 |