In this article

Overview

You can use this Snap to pass (switch) case arguments that define how the source data should be treated in different scenarios. The Snap includes these cases accordingly in the incremental SQL statement.

Prerequisites

Valid accounts and access permissions to connect to the following:

Source: AWS S3, Azure Cloud Storage, or Google Cloud Storage

Target: Snowflake, Redshift, Azure Synapse, Databricks Lakehouse Platform, or BigQuery

Limitations & Known Issues

None.

Snap Input and Output

Input/Output | Type of View | Number of Views | Examples of Upstream and Downstream Snaps | Description |

|---|---|---|---|---|

Input | Document |

|

| A document containing the SQL query that yields the data required to perform the SQL case operations. |

Output | Document |

|

| A document containing the incremental SQL query that includes the SQL case expressions defined in the Snap. |

Snap Settings

Click the = (Expression) button in the Snap's configuration, if available, to define the corresponding field value using expression language and Pipeline parameters.

Field names marked with an asterisk ( * ) in the table below are mandatory.

Field Name | Type | Description |

|---|---|---|

Label* | String | Specify a unique label for the Snap. Default Value: ELT Case Expression |

Get preview data | Checkbox | Select this checkbox to include a preview of the query's output. The Snap performs limited execution and generates a data preview during Pipeline validation. In the case of ELT Pipelines, only the SQL query flows through the Snaps but not the actual source data. Hence, the preview data for a Snap is the result of executing the SQL query that the Snap has generated in the Pipeline. The number of records displayed in the preview (upon validation) is the smaller of the following:

Rendering Complex Data Types in Databricks Lakehouse Platform Based on the data types of the fields in the input schema, the Snap renders the complex data types like map and struct as object data type and array as an array data type. It renders all other incoming data types as-is except for the values in binary fields are displayed as a base64 encoded string and as string data type. Default Value: Not selected |

Pass through | Checkbox | Select this checkbox to specify that the Snap must include the incoming document (SQL query) in its output document. If this checkbox is selected and there are no Case expressions defined in the Case Arguments fieldset below, Subquery Pushdown Optimization logically ignores this Snap—sends the input SQL query coming from the immediately upstream Snap as its output SQL query. |

Alias | String | Specify the alias name to use for the case arguments specified. Default Value: None. |

Case Arguments | Specify your case arguments using this fieldset. A case argument is essentially an action to perform on the source data when a specific condition is met. Ensure that you specify mutually exclusive case arguments. Specify each value in a separate row. Click This field set consists of the following fields:

| |

When | String/Expression | Specify the case (condition to be met). Default Value: None. |

Then | String/Expression | Specify the action to be performed when the corresponding case (condition) is met. |

Else | String/Expression | Specify the action to be taken if none of the case arguments defined are satisfied. Default Value: None. |

Troubleshooting

Error | Reason | Resolution |

|---|---|---|

Invalid placement of ELT Case Expression snap | ELT Case Expression Snap cannot be used at the start of a Pipeline. | Move the ELT Case Expression Snap to either the middle or the end of your Pipeline. |

The Snap has not been configured properly. | THEN clause expression can not be empty | Ensure that you provide all the THEN clause expressions. |

Examples

Updating a Dataset with New Values Based on Existing Values

We want to read a dataset from a file in S3 and define a new (alias) column for the target table to contain transformed values based on the values in the id column. We can use the following Pipeline for this purpose.

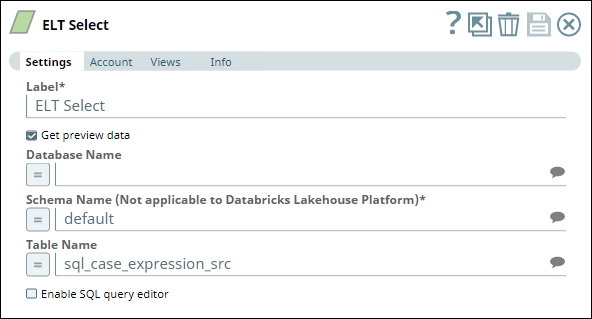

We begin with an ELT Select Snap to read the data from the source table (default.sql_case_expression_src) in S3. We associate a corresponding ELT Database Account (connecting to S3 location that contains the source file) for this Snap. In this example, the account is configured to write transformed data into a Snowflake database.

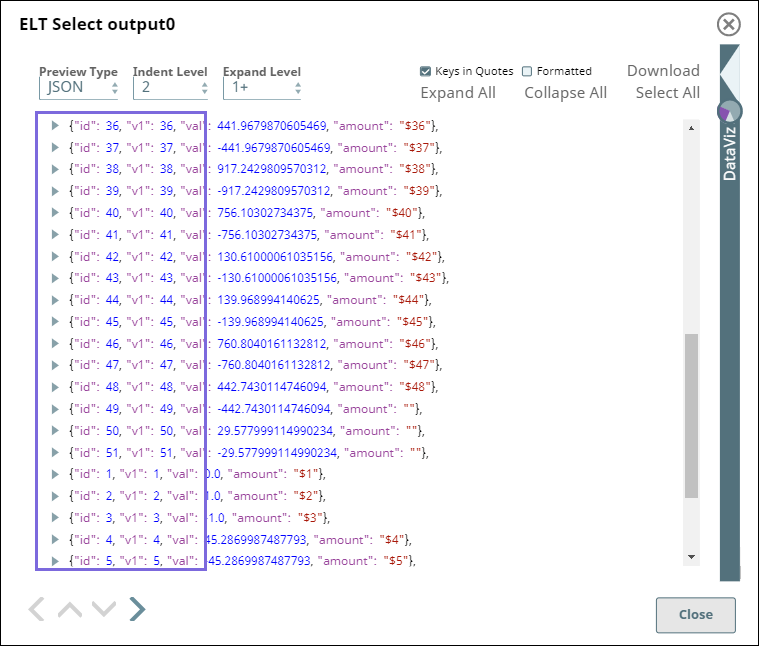

This Snap produces a preview output as shown below:

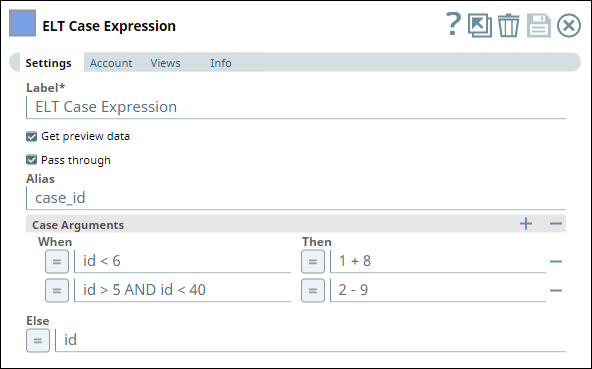

Next, we configure an ELT Case Expression Snap with the following settings:

Get preview data (to allow comparison of row values in this example).

Pass through (to allow comparison of row values in this example).

Alias as case_id.

The following Case Arguments:

If id < 6, then value of case_id is 1 + 8 (= 9).

If id > 5 AND id < 40, then value of case_id is 2 - 9 (= -7).

And the Else condition as the value in id column as is.

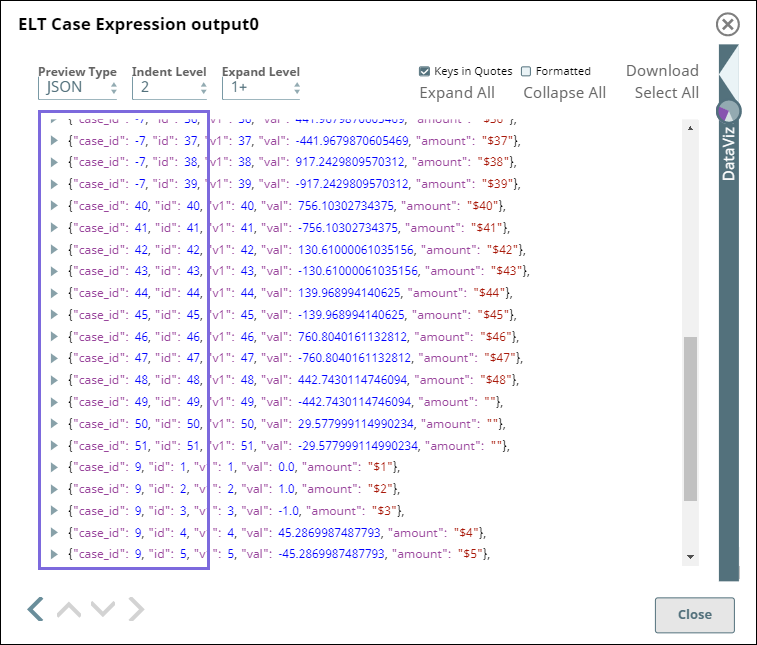

If you compare the highlighted areas in the above images (the two Snap output previews), you can identify that the values for case_id are calculated based on the case arguments we passed.

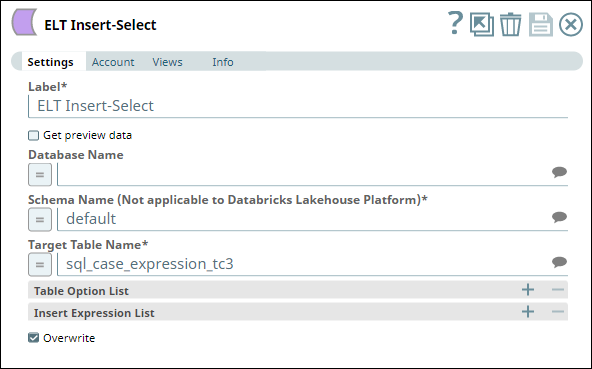

Then, to conclude this example, we write the transformed data to a table (default.sql_case_expression_tc3) in Snowflake database using an ELT Insert Select Snap.

Downloads

Important Steps to Successfully Reuse Pipelines

Download and import the Pipeline into SnapLogic.

Configure Snap accounts as applicable.

Provide Pipeline parameters as applicable.