On this Page

...

Snap type | Read | |||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

Description | This Snap browses a given directory path in the Hadoop file system (using the HDFS protocol) and generates a list of all the files in the directory and subdirectories. Use this Snap to identify the contents of a directory before you run any command that uses this information.

For example, if you need to iteratively run a specific command on a list of files, this Snap can help you view the list of all available files.

Input and Output

| |||||||||||||

| Prerequisites | The user executing the Snap must have at least Read permissions on the concerned directory. | |||||||||||||

| Support and limitations | Works in Ultra Task Pipelines. | |||||||||||||

| Account | This Snap uses account references created on the Accounts page of the SnapLogic Manager to handle access to this endpoint. This Snap supports Azure Data Lake Gen2 OAuth2 and Kerberos accounts. | |||||||||||||

| Views |

| |||||||||||||

Settings | ||||||||||||||

Label | Required. The name for the Snap. You can modify this to be more specific, especially if you have more than one of the same Snap in your pipeline. | |||||||||||||

Directory | The URL for the data source (directory). The Snap supports both HFDS and ABFS(S) protocols. Syntax for a typical HDFS URL:

Syntax for a typical ABFS and an ABFSS URL:

When you use the ABFS protocol to connect to an endpoint, the account name and endpoint details provided in the URL override the corresponding values in the Account Settings fields. Default value: [None] | |||||||||||||

| File filter | Required. The GLOB pattern to be applied to select the contents (files/sub-folders) of the directory. You cannot recursively navigate the directory structures. The File filter property can be a JavaScript expression, which will be evaluated with the values from the input view document. Example:

Default value: [None]

| |||||||||||||

| User Impersonation | Select this check box to enable user impersonation. For more information on working with user impersonation, click the link below.

| |||||||||||||

| Ignore empty result | If selected, no document will be written to the output view when the result is empty. If this property is not selected and the Snap receives an input document, the input document is passed to the output view. If this property is not selected and there is no input document, an empty document is written to the output view. Default value: Selected | |||||||||||||

|

| |||||||||||||

Troubleshooting

| Excerpt |

|---|

Writing to S3 files with HDFS version CDH 5.8 or laterWhen running HDFS version later than CDH 5.8, the Hadoop Snap Pack may fail to write to S3 files. To overcome this, make the following changes in the Cloudera manager:

|

Example

| Expand | ||||||||||||||||||||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| ||||||||||||||||||||||||||||||||||||||||

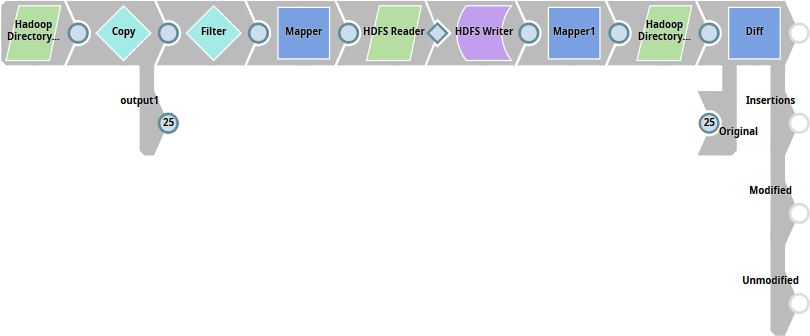

Hadoop Directory Browser in ActionThe Hadoop Directory Browser Snap lists out the contents of a Hadoop file system directory. In this example, we shall:

If the pipeline executes successfully, the second execution of the Hadoop Directory Browser will list out one additional file: the one we created using the output of the first execution of the Hadoop Directory Browser Snap. How This WorksThe table below lists the tasks performed by each Snap and documents the configuration details required for each Snap.

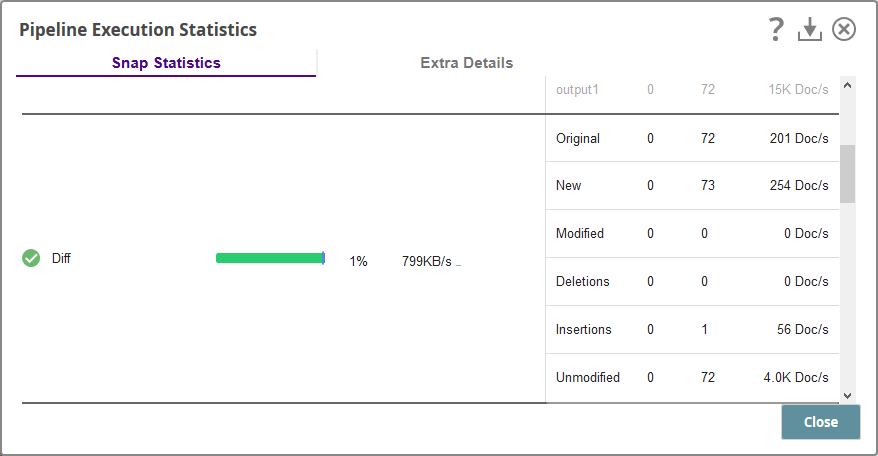

Run the pipeline. Once execution is done, click the Check Pipeline Statistics button to check whether it has worked. You should find the following changes:

|

...