On this Page

| Table of Contents | ||||

|---|---|---|---|---|

|

Snap type:

Write

Description:

This Snap executes a Snowflake bulk load, writing data into an Amazon S3 bucket or a Microsoft Azure Storage Blob.

In this article

| Table of Contents | ||||

|---|---|---|---|---|

|

Overview

You can use the Snowflake - Bulkload Snap to load data from input sources or files stored on external object stores like Amazon S3, Google Storage, and Azure Storage Blob into the Snowflake data warehouse.

Snap Type

The Snowflake - Bulk Load Snap is a Write-type Snap that performs a bulk load operation.

Prerequisites

| Excerpt | ||

|---|---|---|

You must have minimum permissions on the database to execute Snowflake Snaps. To understand if you already have them, you must retrieve the current set of permissions. The following commands enable you to retrieve those permissions:

|

You must enable the Snowflake account to use private preview features for creating the Iceberg table.

External volume has to be created on the Snowflake worksheet, or Snowflake Execute snap. Learn more about creating external volume.

Security Prererequisites

You must have the following permissions in your Snowflake account to execute this Snap:

Usage (DB and Schema): Privilege to use the database, role, and schema.

Create table: Privilege to create a temporary table within this schema.

The following commands enable minimum privileges in the Snowflake console:

| Code Block |

|---|

grant usage on database <database_name> to role <role_name>;

grant usage on schema <database_name>.<schema_name>;

grant "CREATE TABLE" on database <database_name> to role <role_name>;

grant "CREATE TABLE" on schema <database_name>.<schema_name>; |

Learn more about Snowflake privileges: Access Control Privileges.

Internal SQL Commands

This Snap uses the following Snowflake commands internally:

COPY INTO - Enables loading data from staged files to an existing table.

PUT - Enables staging the files internally in a table or user stage.

| Info |

|---|

Requirements for External Storage LocationThe following are mandatory when using an external staging location: When using an Amazon S3 bucket for storage:

When using a Microsoft Azure storage blob:

When using a Google Cloud Storage:

|

Support for Ultra Pipelines

Works in Ultra Pipelines. However, we recommend that you not to use this Snap in an Ultra Pipeline.

Limitations

Special character'~' is not supported if it is there in the temp directory name for Windows. It is reserved for the user's home directory.

Snowflake provides the option to use the Cross Account IAM in the external staging. You can adopt the cross-account access through the option Storage Integration. With this setup, you don’t need to pass any credentials around, and access to the storage only using the named stage or integration object. For more details: Configuring Cross Account IAM Role Support for Snowflake Snaps

Snowflake Bulk Load expects column order should be like a table from upstream snaps; otherwise, it will result in failure of data validation.

If a Snowflake Bulk Load operation fails due to inadequate memory space on the JCC node when the Data source is Input View and the Staging location is Internal Stage, you can store the data on an external staging location (S3, Azure Blob or GCS).

When the bulk load operation fails due to an invalid input and when the input does not contain the default columns, the error view does not display the erroneous columns correctly.

This is a bug in Snowflake and is being tracked under JIRA SNOW-662311 and JIRA SNOW-640676.This Snap does not support creating an iceberg table with an external catalog in Snowflake as, currently, the endpoint only allows read-only access for the tables that are created using an external catalog without any write capabilities. Learn more about iceberg catalog options.

Snowflake does not support cross-cloud and cross-region Iceberg tables when you use Snowflake as the Iceberg catalog. If the Snap displays an error message such as

External volume <volume_name> must have a STORAGE_LOCATION defined in the local region ..., ensure that the External volume fielduses an active storage location in the same region as your Snowflake account.

Behavior change

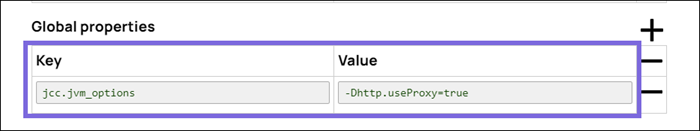

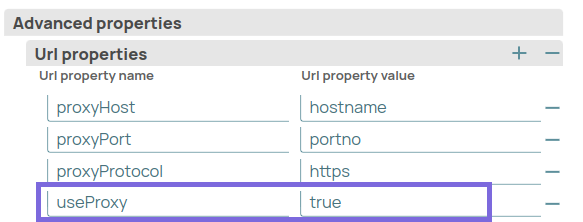

Starting from 4.35 GA, if your Snaplex is behind a proxy and the Snowflake Bulk Load Snap uses the default Snowflake driver (3.14.0 JDBC driver), then the Snap might encounter a failure. There are two ways to prevent this failure:

1️⃣ Add the following key-value pair in the Global properties section of the Node Properties tab:Key: jcc.jvm_optionsValue: -Dhttp.useProxy=true

2️⃣ Add the following key-value pairs in the URL properties of the Snap under Advanced properties.

We recommend you use the second approach to prevent the Snap’s failure.

Data source

The source from where the data is loaded from. The options available are Input view and Staged files.

| Note |

|---|

When the option 'Input View' is selected, leave the Table Coumns field empty, and if the 'Staged files' option is selected, provide the column names for the Table Coumns to which the records are to be added. |

Default value: Input view

If selected, the case sensitivity of the column names will be preserved.

Default value: Not selected

If selected, empty string values in the input documents are loaded as empty strings to the string-type fields. Otherwise, empty string values in the input documents are loaded as null. Null values are loaded as null regardless.

Default value: Not selected

Staging Location

The type of staging location that is used for data loading.

The options available include:

- External: Location that is not managed by Snowflake. The location should be an AWS S3 Bucket or Microsoft Azure Storage Blob. These credentials are mandatory while validating the Account.

- Internal: Location that is managed by Snowflake.

Default value: Internal

Target

An internal or external location where data can be loaded. If you selected External in the Staging Location field above, a staging area is created in S3 or Azure as required; else a staging area is created in Snowflake's internal location.

The staging field accepts the following input:

- Named Stage: The name for user-defined named stage. This should be used only when a Staging location is set as Internal.

Format: @<Schema>.<StageName>[/path]

- Internal Path: The staging represent with path.

Format: @~/[path]

- S3 Url: The external S3 URL that specifies an S3 storage.

Format: s3://[path]

Microsoft Azure Storage Blob URL: The external URL required to connect to the Microsoft Azure Storage.Folder Name: Anything else including none input. This is regarded as a Folder name under the Internal Home Path(@~) if using internal staging or under the S3 bucket and folder specified in the Snowflake account.

| Note |

|---|

The value for the expression has to be provided as a pipeline parameter and cannot be provided from the Upstream Snap for performance reasons when the property is used as an expression parameter. |

Default value: [None]

The pre-defined storage integration which is used to authenticate the external stages.

| Note |

|---|

The value for the expression has to be provided as a pipeline parameter and cannot be provided from the Upstream Snap for performance reasons when the property is used as an expression parameter. |

Default value: None

Staged file list

List of the staged file(s) loaded to the target file.

File name pattern

A regular expression pattern string, enclosed in single quotes, specifying the file names and /or path to match.

Default value: [None]

File format object

Specify an existing file format object to use for loading data into the table. The specified file format object determines the format type such as CSV, JSON,XMLand AVRO, or other format options for data files.

Default value: [None]

File Format type

Specify a predefined file format object to use for loading data into the table. The available file formats include CSV, JSON, XML and AVRO.

Default value: NONE

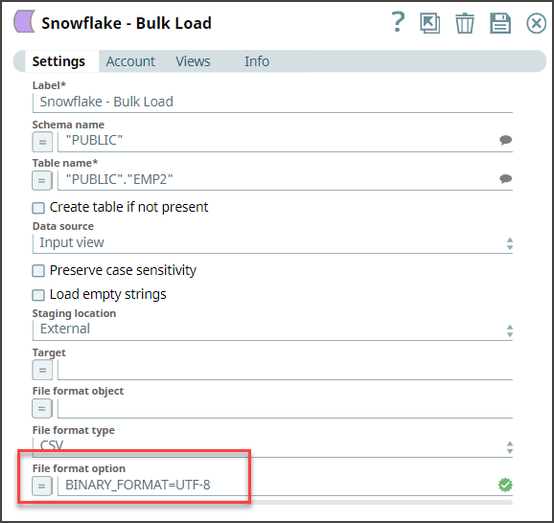

File Format option

Specify the file format option. Separate multiple options by using blank spaces and commas.

| Info |

|---|

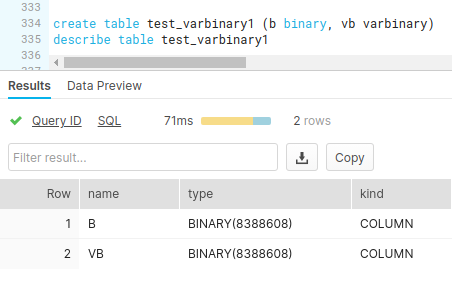

You can use various file format options including binary format which passes through in the same way as other file formats. See File Format Type Options for additional information. Before loading binary data into Snowflake, you must specify the binary encoding format, so that the Snap can decode the string type to binary types before loading into Snowflake. This can be done by specifying the following binary file format:

However, the file you upload and download must be in similar formats. For instance, if you load a file in HEX binary format, you should specify the HEX format for download as well. |

Example: BINARY_FORMAT=UTF-8

Default value: [None]

| Note | ||

|---|---|---|

| ||

|

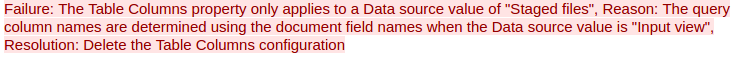

Conditional. This specifies the table columns to use in the Snowflake COPY INTO query. This only applies when the Data source is 'Staged files'. This configuration is useful when the staged files contain a subset of the columns in the Snowflake table. For example, if the Snowflake table contains columns A, B, C and D and the staged files contain columns A and D then the Table Columns field would be have two entries with values A and D. The order of the entries should match the order of the data in the staged files.

Default value: [None]

| Note |

|---|

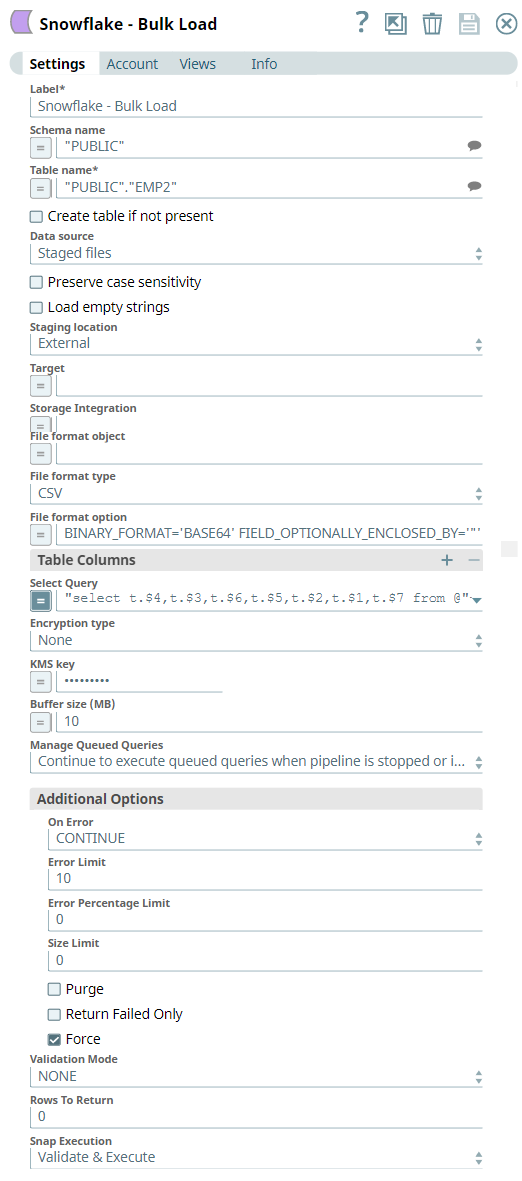

If the Data source is selected with 'Input view', the snap would throw an error as displayed: |

Activates when you select Staged files in the Data source field.

Specify the SELECT query to transform data before loading into Snowflake database.

The SELECT statement transform option enables querying the staged data files by either reordering the columns or loading a subset of table data from a staged file. For example, select $1:location, $1:dimensions.sq_ft, $1:sale_date, $1:price from @mystage/sales.json.gz tThis query loads the file sales.json from the internal stage mystage, (which stores the data files internally); wherein location, dimensions.sq_ft, and sale_date are the objects.

(OR)

select substr(t.$2,4), t.$1, t.$5, t.$4 from @mystage tThis query reorders the column data from the internal stage mystage before loading it into a table. The (SUBSTR), SUBSTRING function removes the first few characters of a string before inserting it.

| Note |

|---|

We recommend you not to use temporary stage while loading your data. |

Default value: N/A

Specifies the type of encryption to be used on the data. The available encryption options are:

- None: Files do not get encrypted.

- Server Side Encryption: The output files on Amazon S3 are encrypted with the server-side encryption.

- Server-Side KMS Encryption: The output files on Amazon S3 are encrypted with Amazon S3-generated KMS key.

Default value: No default value.

| Note |

|---|

The KMS Encryption option is available only for S3 Accounts (not for Azure Accounts) with Snowflake. |

| Note |

|---|

If Staging Location is set to Internal, and when Data source is Input view, the Server Side Encryption and Server-Side KMS Encryption options are not supported for Snowflake snaps: This happens because Snowflake encrypts loading data in its internal staging area and does not allow the user to specify the type of encryption in the PUT API (see Snowflake PUT Command Documentation.) |

The KMS key that you want to use for S3 encryption. For more information about the KMS key, see AWS KMS Overview and Using Server Side Encryption.

Default value: No default value.

| Note |

|---|

This property applies only when you select Server-Side KMS Encryption in the Encryption Type field above. |

Additional Options

This property is required when bulk loading to Snowflake using AWS S3 as the external staging area. It specifies the data in MB to be loaded into the S3 bucket, at a time.

Minimum value: 5 MB

Maximum value: 5000 MB

Default value: 10 MB

| Note |

|---|

S3 allows a maximum of 10000 parts to be uploaded so this property must be configured accordingly to optimize the bulk load. Refer to Upload Part for more information on uploading to S3. |

Select this property to decide whether the Snap should continue or cancel the execution of the queued Snowflake Execute SQL queries when you stop the pipeline.

| Note |

|---|

If you select Cancel queued queries when pipeline is stopped or if it fails, then the read queries under execution are cancelled, whereas the write type of queries under execution are not cancelled. Snowflake internally determines which queries are safe to be cancelled and cancels those queries. |

Default value: Continue to execute queued queries when pipeline is stopped or if it fails

On Error

Specifies an action to be performed when errors are encountered in a file. The available actions include:

- ABORT_STATEMENT: Abort the COPY statement if any error is encountered. The error will be thrown from the snap or routed to the error view.

- CONTINUE: Continue loading the file. The error will be shown as a part of the output document.

- SKIP_FILE: Skip file if any errors encountered in the file.

- SKIP_FILE_*error_limit*: Skip file when the number of errors in the file exceeds the number specified in Error Limit.

- SKIP_FILE_*error_percent_limit*%: Skip file when the percentage of errors in the file exceeds the percentage specified in Error percentage limit.

Default value: ABORT_STATEMENT

Error Limit

Specifies to skip file when the number of errors in the file exceeds the specified error limit on when SKIP_FILE_number is selected for On Error.

Default value: 0

Error percentage limit

Specifies to skip file when the percentage of errors in the file exceeds the specified percentage when SKIP_FILE_number% is selected for On Error.

Default value: 0

Size limit

Specifies the maximum size (in bytes) of data to be loaded.

| Note |

|---|

At least one file is loaded regardless of the value specified for SIZE_LIMIT unless there is no file to be loaded. A null value indicates no size limit. |

Default value: 0

Purge

Specifies whether to purge the data files from the location automatically after the data is successfully loaded.

Default value: Not Selected

Return Failed Only

Specifies whether to return only files that have failed to load while loading.

Default value: Not Selected

Force

Specifies to load all files, regardless of whether they have been loaded previously and have not changed since they were loaded.

Default value: Not Selected

Validation mode

This mode is useful for visually verifying the data before unloading it. The available options are:

NONE

RETURN_n_ROWS

RETURN_ERRORS

RETURN_ALL_ERRORS

Default value: NONE

Rows to return

Specifies the number of rows not loaded into the corresponding table. Instead, the data is validated to be loaded and returns results based on the validation option specified. It can be one of the following values: RETURN_n_ROWS | RETURN_ERRORS | RETURN_ALL_ERRORS

Default value: 0

| Multiexcerpt include macro | ||||

|---|---|---|---|---|

|

| Multiexcerpt include macro | ||||

|---|---|---|---|---|

|

| Note |

|---|

Instead of building multiple Snaps with inter dependent DML queries, it is recommended to use the Stored Procedure or the Multi Execute Snap. For example, when performing a create, insert and a delete function sequentially on a pipeline, using a Script Snap helps in creating a delay between the insert and delete function or otherwise it may turn out that the delete function is triggered even before inserting the records on the table. |

Examples

Loading Binary Data IntoKnown Issues

| Multiexcerpt macro | ||

|---|---|---|

| ||

Known Issue: The create table operation fails if it contains a geospatial data type column. |

| Multiexcerpt macro | ||||||||

|---|---|---|---|---|---|---|---|---|

| ||||||||

Because of performance issues, all Snowflake Snaps now ignore the Cancel queued queries when pipeline is stopped or if it fails option for Manage Queued Queries, even when selected. Snaps behave as though the default Continue to execute queued queries when the Pipeline is stopped or if it fails option were selected. |

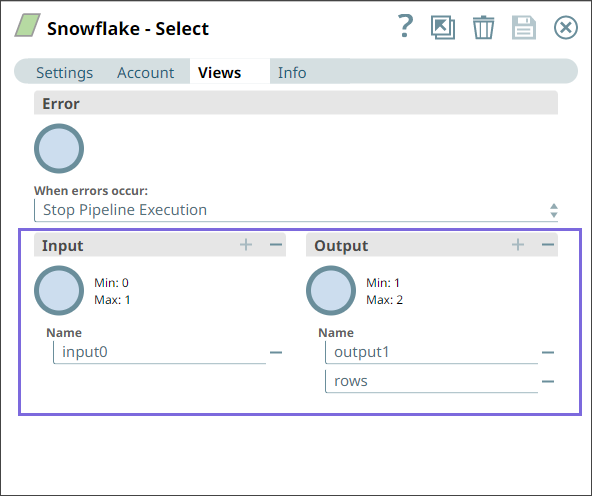

Snap Views

Type | Format | Number of Views | Examples of Upstream and Downstream Snaps | Description | ||

|---|---|---|---|---|---|---|

Input | Document |

|

| Documents containing the data to be uploaded to the target location.

| ||

Output | Document |

|

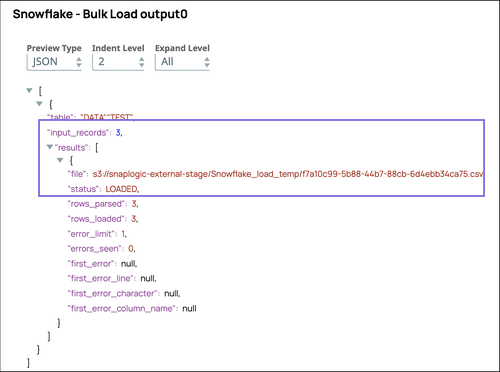

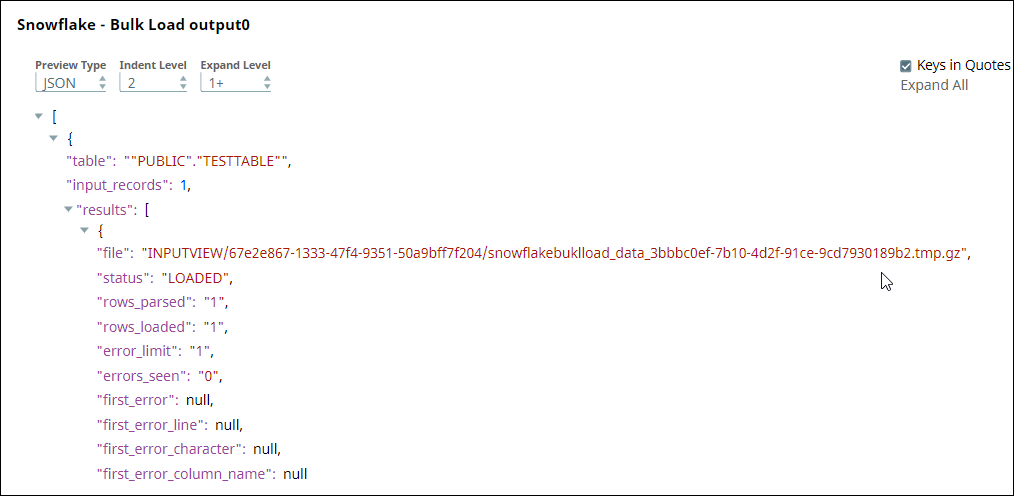

| If an output view is available, then the output document displays the number of input records and the status of the bulk upload as follows: | ||

Error | Error handling is a generic way to handle errors without losing data or failing the Snap execution. You can handle the errors that the Snap might encounter when running the Pipeline by choosing one of the following options from the When errors occur list under the Views tab:

Learn more about Error handling in Pipelines. | |||||

Snap Settings

| Info |

|---|

|

Field Name | Field Type | Field Dependency | Description | |||||

|---|---|---|---|---|---|---|---|---|

Label*

| String | N/A | Specify the name for the instance. You can modify this to be more specific, especially if you have more than one of the same Snap in your Pipeline. | |||||

Schema Name Default Value: N/A | String/Expression/Suggestion | N/A | Specify the database schema name. In case it is not defined, then the suggestion for the Table Name retrieves all tables names of all schemas. The property is suggestible and will retrieve available database schemas during suggest values. The values can be passed using the Pipeline parameters but not the upstream parameter. | |||||

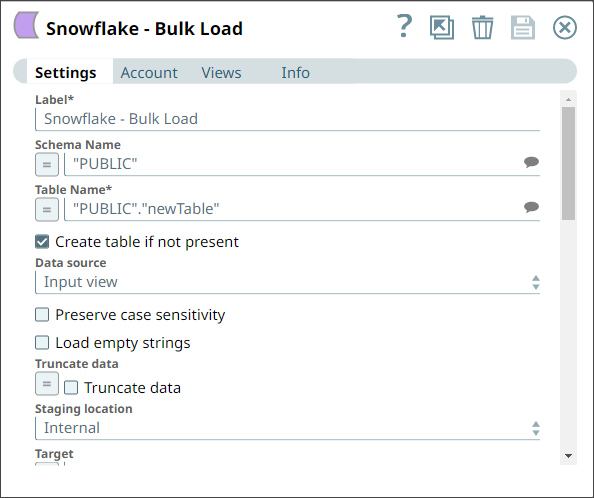

Table Name* Default Value: N/A | String/Expression/Suggestion | N/A | Specify the name of the table to execute bulk load operation on. The values can be passed using the Pipeline parameters but not the upstream parameter. | |||||

Create table if not present Default Value: Deselected | Checkbox | N/A | Select this checkbox to automatically create the target table if it does not exist.

| |||||

Iceberg table Default Value: Deselected | Checkbox | Appears when you select Create table if not present. | Select this checkbox to create an Iceberg table with the Snowflake catalog. Learn more about how to create and Iceberg table with Snowflake as the Iceberg catalog. | |||||

External volume* Default Value: N/A | String/Expression/Suggestion | Appears when you selectthe Iceberg table. | Specify the external volume for the Iceberg table. Learn more about how to configure an external volume for Iceberg tables. | |||||

Base location*

Default Value: N/A | String/Expression | Appears when you selectthe Iceberg table checkbox. | Specify the Base location for the Iceberg table. The base location is the relative path from the external volume. | |||||

Data source Default Value: Input view | Dropdown list | N/A | Specify the source from where the data should load. The available options are Input view and Staged files. When the option 'Input View' is selected, leave the Table Columns field empty, and if the 'Staged files' option is selected, provide the column names for the Table Columns to which the records are to be added. | |||||

Preserve case sensitivity Default Value: Deselected | Checkbox | N/A | Select this check box to preserve the case sensitivity of the column names.

| |||||

Load empty strings Default Value: Deselected | Checkbox | N/A | Select this check box to load empty string values in the input documents as empty strings to the string-type fields. Else, empty string values in the input documents are loaded as null. Null values are loaded as null regardless. | |||||

Truncate data Default Value: Deselected | Checkbox | N/A | Select this checkbox to truncate existing data before performing data load. With the Bulk Update Snap, instead of doing truncate and then update, a Bulk Insert would be faster. | |||||

Staging location

| Dropdown list/Expression | N/A | Select the type of staging location that is to be used for data loading:

| |||||

- Expected input: Documents containing the data to be uploaded to the target location.

- Expected output: A document containing the original document provided as input and the status of the bulk upload

- Expected upstream Snaps: Any document that offers documents. For example, JSON Generator or Binary to Document Snap.

- Expected downstream Snaps: Any Snap that accepts document. For example, Mapper or Snowflake Execute Snap.

Prerequisites:

You should have minimum permissions on the database to execute Snowflake Snaps. To understand if you already have them, you must retrieve the current set of permissions. The following commands enable you to retrieve those permissions.

| Code Block |

|---|

SHOW GRANTS ON DATABASE <database_name>

SHOW GRANTS ON SCHEMA <schema_name>

SHOW GRANTS TO USER <user_name> |

Security Prerequisites: You should have the following permissions in your Snowflake account to execute this Snap:

- Usage (DB and Schema): Privilege to use the database, role, and schema.

- Create table: Privilege to create a temporary table within this schema.

The following commands enable minimum privileges in the Snowflake Console:

| Code Block |

|---|

grant usage on database <database_name> to role <role_name>;

grant usage on schema <database_name>.<schema_name>;

grant "CREATE TABLE" on database <database_name> to role <role_name>;

grant "CREATE TABLE" on schema <database_name>.<schema_name>; |

For more information on Snowflake privileges, refer to Access Control Privileges.

The below are mandatory when using an external staging location:

When using an Amazon S3 bucket for storage:

- The Snowflake account should contain S3 Access-key ID, S3 Secret key, S3 Bucket and S3 Folder.

- The Amazon S3 bucket where the Snowflake will write the output files must reside in the same region as your cluster.

When using a Microsoft Azure storage blob:

- A working Snowflake Azure database account.

This Snap uses the following Snowflake commands internally:

- Ultra Pipelines: Works in Ultra Pipelines if batching is disabled.

- Special character '~': Not supported if it is there in temp directory name for Windows. It is reserved for user's home directory.

Snowflake provides the option to use the Cross Account IAM into the external staging. You can adopt the cross account access through option Storage Integration. With this setup, you don’t need to pass any credentials around, and access to the storage only using the the named stage or integration object. For more details: Configuring Cross Account IAM Role Support for Snowflake Snaps

- Snowflake Bulk Load expects column order should be as like table from upstream snaps otherwise it will result in failure of data validation.

This Snap uses account references created on the Accounts page of SnapLogic Manager to handle access to this endpoint. See Snowflake Account for information on setting up this type of account.

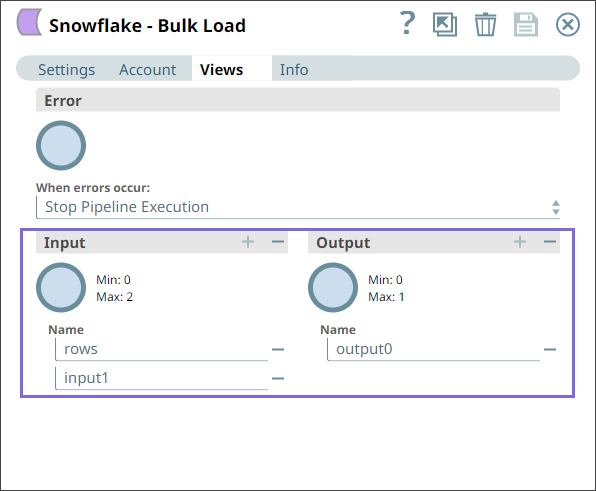

This Snap has one document input view by default.

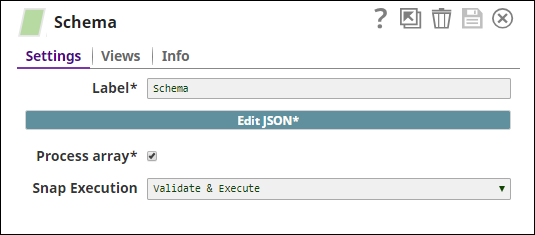

A second view can be added for metadata for the table as a document so that the target absent table can be created in the database with a similar schema as the source table. This schema is usually from the second output of a database Select Snap. If the schema is from a different database, there is no guarantee that all the data types would be properly handled.

This Snap has at most one output view. If an output view is available, then the original document that was used to create the statement will be output with the status of the insert executed.

This Snap has at most one error view and produces zero or more documents in the view.

Settings

Label

Schema name

Required. The database schema name. In case it is not defined, then the suggestion for the Table Name will retrieve all tables names of all schemas. The property is suggestible and will retrieve available database schemas during suggest values.

| Note |

|---|

The values can be passed using the pipeline parameters but not the upstream parameter. |

Default value: [None]

Required. The name of the table to execute bulk load operation on.

| Note |

|---|

The values can be passed using the pipeline parameters but not the upstream parameter. |

Default value: [None]

Create table if not present

Whether the table should be automatically created if not already present.

Default value: Not selected

| Note |

|---|

|

| Warning |

|---|

This should not be used in production since there are no indexes or integrity constraints on any column and the default varchar() column is over 30k bytes. |

| ||||||||

Flush chunk size (in bytes) | String/Expression | Appears when you select Input view for Data source and Internal for Staging location. | When using internal staging, data from the input view is written to a temporary chunk file on the local disk. When the size of a chunk file exceeds the specified value, the current chunk file is copied to the Snowflake stage and then deleted. A new chunk file simultaneously starts to store the subsequent chunk of input data. The default size is 100,000,000 bytes (100 MB), which is used if this value is left blank. | |||||

Target Default Value: N/A | String/Expression | N/A | Specify an internal or external location to load the data. If you select External for Staging Location, a staging area is created in Azure, GCS, or S3 as configured. Otherwise, a staging area is created in Snowflake's internal location. This field accepts the following input:

The value for the expression has to be provided as a Pipeline parameter and cannot be provided from the Upstream Snap for performance reasons when you use expression values. | |||||

Storage Integration Default Value: N/A | String/Expression | Appears when you select Staged files for Data source and External for Staging location. | Specify the pre-defined storage integration that is used to authenticate the external stages. The value for the expression has to be provided as a Pipeline parameter and cannot be provided from the Upstream Snap for performance reasons when you use expression values. | |||||

Staged file list | Use this field set to define staged file(s) to be loaded to the target file. | |||||||

Staged file | String/Expression | Appears when you select Staged files for Data source. | Specify the staged file to be loaded to the target table. | |||||

File name pattern Default Value: N/A Example: .length | String/Expression | Appears when you select Staged files for Data source. | Specify a regular expression pattern string, enclosed in single quotes with the file names and /or path to match. | |||||

File format object Default Value: None Example: jsonPath() | String/Expression | N/A | Specify an existing file format object to use for loading data into the table. The specified file format object determines the format type such as CSV, JSON, XML, AVRO, or other format options for data files. | |||||

File format type Default Value: None | String/Expression/Suggestion | N/A | Specify a predefined file format object to use for loading data into the table. The available file formats include CSV, JSON, XML, and AVRO. This Snap supports only CSV and NONE as file format types when the Datasource is Input view. | |||||

File format option Default value: N/A | String/Expression | N/A | Specify the file format option. Separate multiple options by using blank spaces and commas.

When using external staging locations

| |||||

Table Columns | Use this field set to specify the columns to be used in the COPY INTO command. This only applies when the Data source is Staged files. | |||||||

Columns | String/Expression/Suggestion | N/A | Specify the table columns to use in the Snowflake COPY INTO query. This configuration is valid when the staged files contain a subset of the columns in the Snowflake table. For example, if the Snowflake table contains columns A, B, C, and D, and the staged files contain columns A and D then the Table Columns field would have two entries with values A and D. The order of the entries should match the order of the data in the staged files. If the Data source is Input view, the snap displays the following error: | |||||

Select Query Default Value: N/A | String/Expression | Appears when the Data source is Staged files. | Specify the The SELECT statement transform option enables querying the staged data files by either reordering the columns or loading a subset of table data from a staged file. For example, (OR)

We recommend you not use a temporary stage while loading your data. | |||||

Encryption type Default Value: None | Dropdown list | N/A | Specify the type of encryption to be used on the data. The available encryption options are:

The KMS Encryption option is available only for S3 Accounts (not for Azure Accounts and GCS) with Snowflake. If Staging Location is set to Internal, and when Data source is Input view, the Server Side Encryption and Server-Side KMS Encryption options are not supported for Snowflake snaps: This happens because Snowflake encrypts loading data in its internal staging area and does not allow the user to specify the type of encryption in the PUT API. Learn more: Snowflake PUT Command Documentation. | |||||

KMS key Default Value: N/A | String/Expression | N/A | Specify the KMS key that you want to use for S3 encryption. Learn more about the KMS key: AWS KMS Overview and Using Server Side Encryption. | |||||

Buffer size (MB) Default Value: 10MB | String/Expression | N/A | Specify the data in MB to be loaded into the S3 bucket at a time. This property is required when bulk loading to Snowflake using AWS S3 as the external staging area. Minimum value: 5 MB Maximum value: 5000 MB S3 allows a maximum of 10000 parts to be uploaded so this property must be configured accordingly to optimize the bulk load. Refer toUpload Part for more information on uploading to S3. | |||||

Manage Queued Queries Default Value: Continue to execute queued queries when the Pipeline is stopped or if it fails | Dropdown list | N/A | Select this property to determine whether the Snap should continue or cancel the execution of the queued Snowflake Execute SQL queries when you stop the pipeline. If you select Cancel queued queries when the Pipeline is stopped or if it fails, then the read queries under execution are canceled, whereas the write type of queries under execution are not canceled. Snowflake internally determines which queries are safe to be canceled and cancels those queries. | |||||

Additional Options | ||||||||

On Error Default Value: ABORT_STATEMENT | Dropdown list | N/A | Select an action to perform when errors are encountered in a file. The available actions are:

| |||||

Error Limit Default Value: 0 | Integer | Appears when you select SKIP_FILE_*error_limit* for On Error. | Specify the error limit to skip file. When the number of errors in the file exceeds the specified error limit or when SKIP_FILE_number is selected for On Error. | |||||

Error Percentage Limit Default Value: 0 | Integer | Appears when you select SKIP_FILE_*error_percent_limit*% | Specify the percentage of errors to skip file. If the file exceeds the specified percentage when SKIP_FILE_number% is selected for On Error. | |||||

Size Limit Default Value: 0 | Integer | N/A | Specify the maximum size (in bytes) of data to be loaded. At least one file is loaded regardless of the value specified for SIZE_LIMIT unless there is no file to be loaded. A null value indicates no size limit. | |||||

Purge Default value: Deselected | Checkbox | Appears when the Staging location is External. | Specify whether to purge the data files from the location automatically after the data is successfully loaded. | |||||

Return Failed Only Default Value: Deselected | Checkbox | N/A | Specify whether to return only files that have failed to load while loading. | |||||

Force Default Value: Deselected | Checkbox | N/A | Specify if you want to load all files, regardless of whether they have been loaded previously and have not changed since they were loaded. | |||||

Truncate Columns Default Value: Deselected | Checkbox | N/A | Select this checkbox to truncate column values that are larger than the maximum column length in the table. | |||||

Validation Mode Default Value: None | Dropdown list | N/A | Select the validation mode for visually verifying the data before unloading it. The available options are:

| |||||

Validation Errors Type Default Value: Full error | Dropdown list | Appears when you select NONE for Validation Mode. | Select one of the following methods for displaying the validation errors:

| |||||

Rows to Return Default Value: 0 | Integer | Appears when you select RETURN_n_ROWS, RETURN_ERRORS, and RETURN_ALL_ERRORS for Validation Mode. | Specify the number of rows not loaded into the corresponding table. Instead, the data is validated to be loaded and returns results based on the validation option specified. It can be one of the following values: RETURN_n_ROWS | RETURN_ERRORS | RETURN_ALL_ERRORS | |||||

Snap Execution

| Dropdown list | N/A | Select one of the three modes in which the Snap executes. Available options are:

| |||||

Instead of building multiple Snaps with interdependent DML queries, we recommend you use the Stored Procedure or the Multi Execute Snaps.

In a scenario where the downstream Snap depends on the data processed on an upstream database Bulk Load Snap, use the Script Snap to add delay for the data to be available.

For example, when performing a create, insert, and delete function sequentially on a pipeline, the Script Snap helps to create a delay between the insert and delete function. Otherwise, the delete function may get triggered before inserting the records into the table.

Troubleshooting

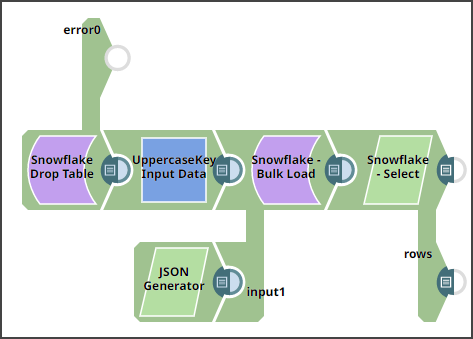

Error | Reason | Resolution |

|---|---|---|

Data can only be read from Google Cloud Storage (GCS) with the supplied account credentials (not written to it). | Snowflake Google Storage Database accounts do not support external staging when the Data source is the Input view. Data can only be read from GCS with the supplied account credentials (not written to it). | Use internal staging if the data source is the input view or change the data source to staged files for Google Storage external staging. |

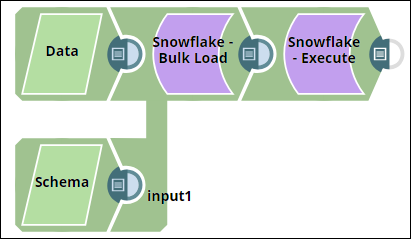

Examples

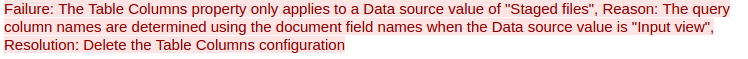

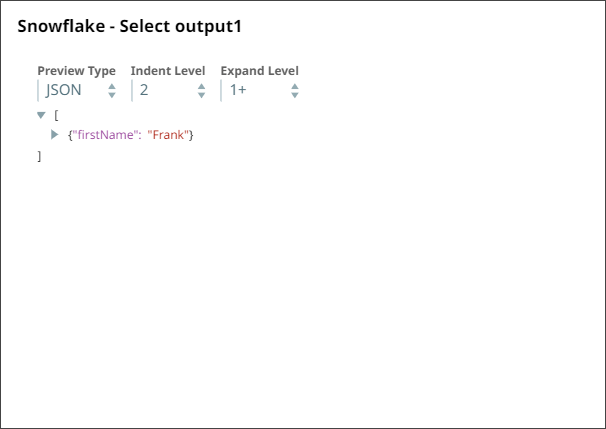

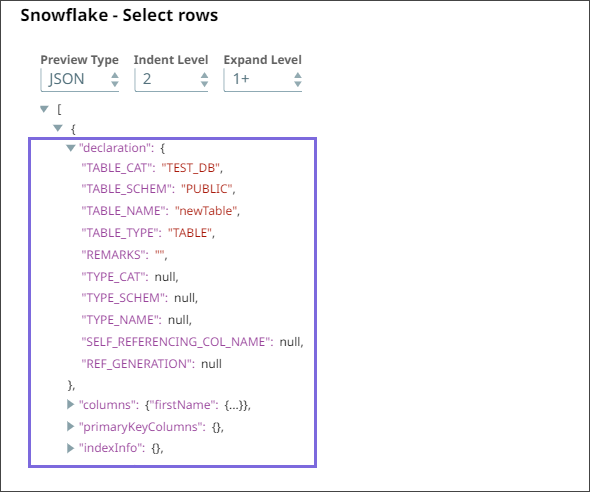

Provide metadata in the table using the second input view

This example Pipeline demonstrates how to provide metadata for the table definition through the second input view, to enable the Bulk Load Snap to create a table according to the definition.

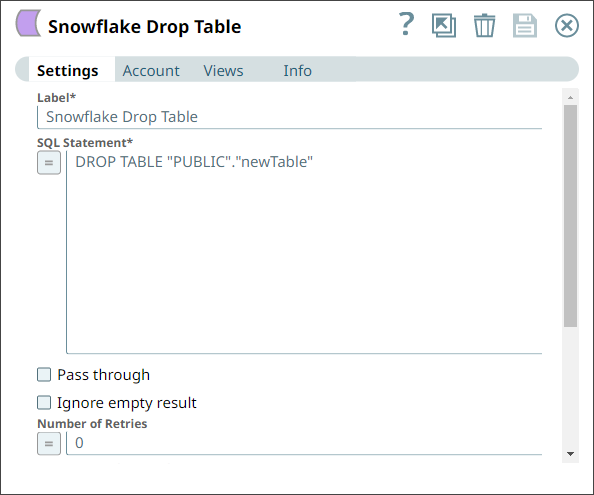

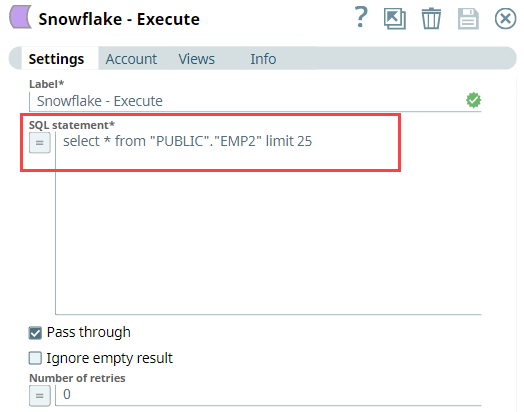

Configure the Snowflake Execute Snap as follows to drop the newTable with the DROP TABLE query.

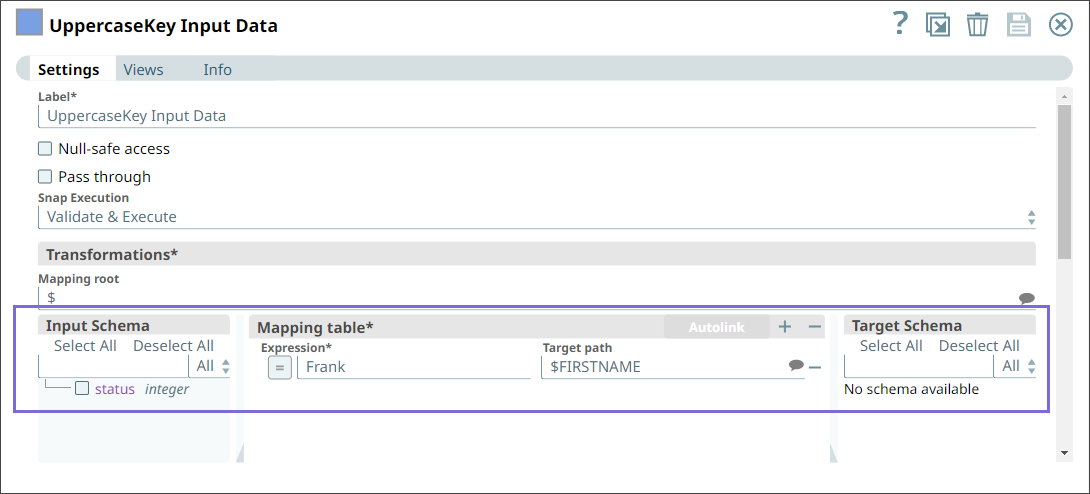

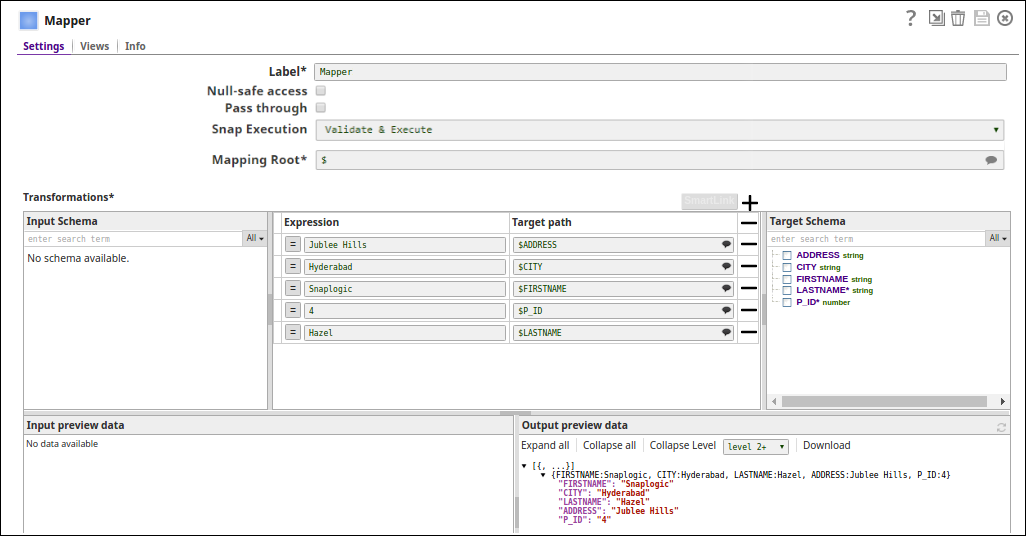

2. Configure the Mapper Snap as follows to pass the input data.

3. Configure the Snowflake Bulk Load Snap with two input views:

a. First input view: Input data from the upstream Mapper Snap.

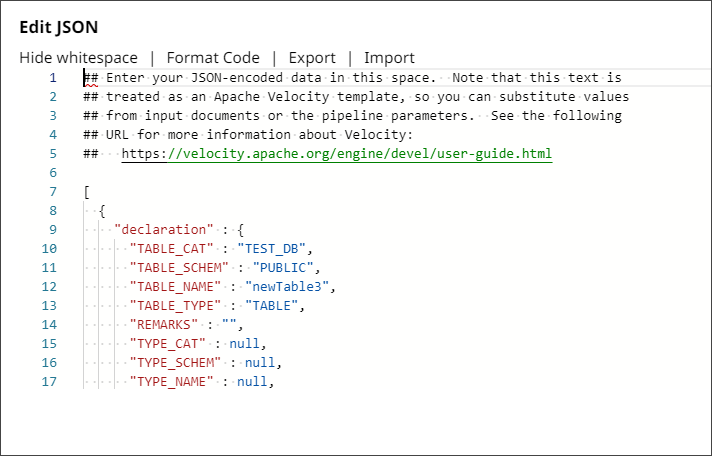

b. Second input view: Table metadata from JSON Generator. If the target table is not present, a table is created in the database based on the schema from the second input view.

JSON Generator Configuration: Table metadata to pass to the second input view.

4. Finally, configure the Snowflake Select Snap with two output views.

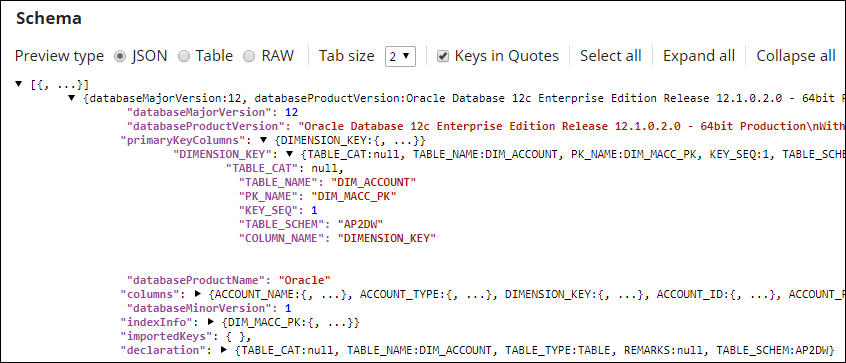

Output from the first input view. | Output from the second input view: This schema of the target table is from the second output (rows) of the Snowflake Select Snap. |

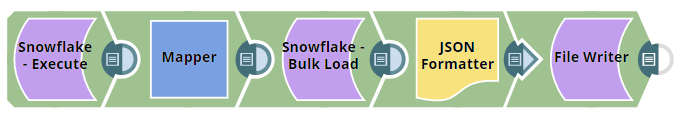

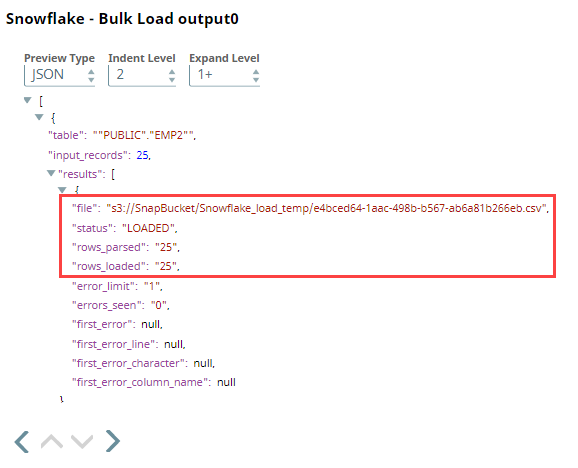

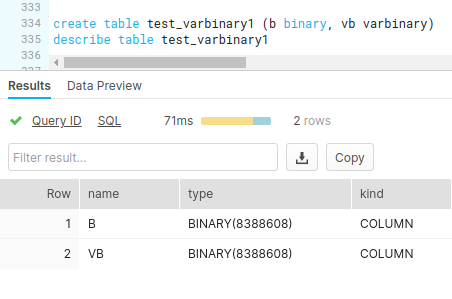

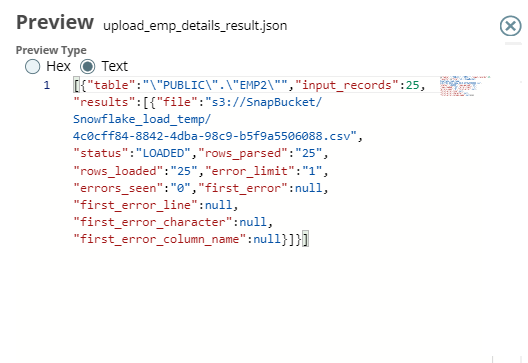

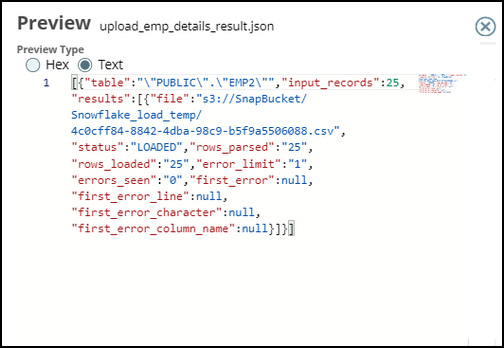

Load binary data into Snowflake

The following example Pipeline demonstrates how you can convert the staged data into binary data using the binary file format before loading it into the Snowflake database.

To begin with,

configure the Snowflake Execute Snap with this query: select * from "PUBLIC"."EMP2" limit

25——this query reads 25 records from the Emp2 table.

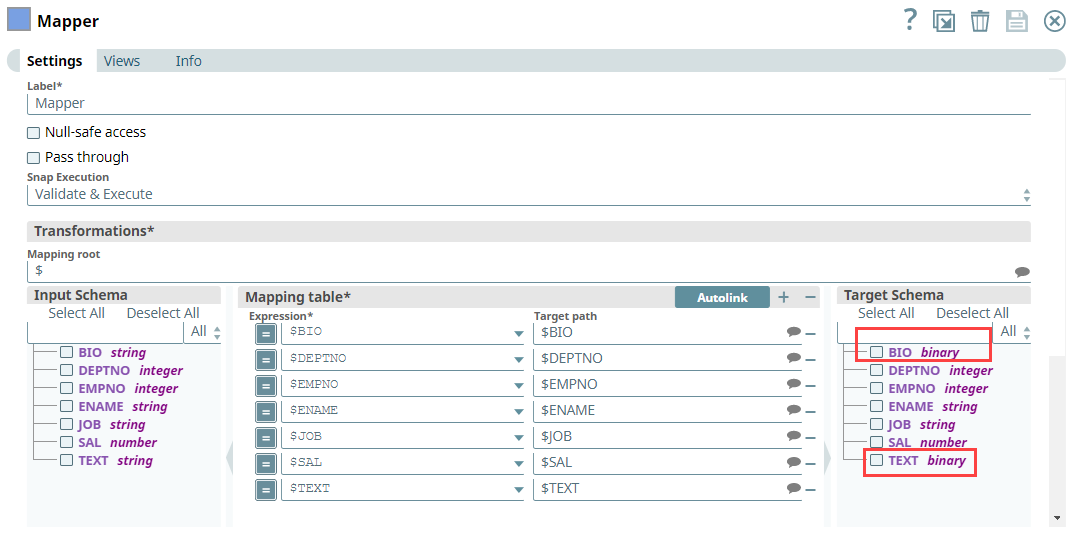

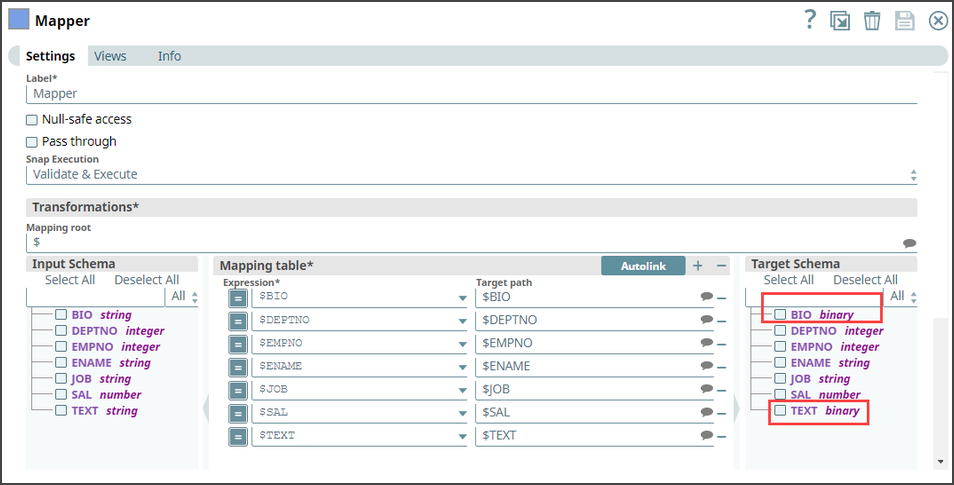

Next,

configure the Mapper Snap with the output from the upstream Snap by mapping the employee details to the columns in the target table. Note that the Bio column is the binary data type and the Text column is varbinary data type. Upon validation, the Mapper Snap passes the output with the given mappings (employee details) in the table.

Next,

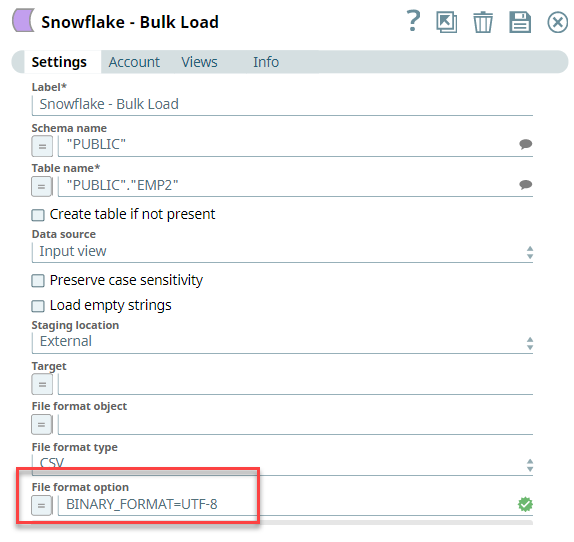

configure the Snowflake - Bulk Load Snap to load the records into Snowflake. We set the File format option as BINARY_FORMAT=UTF-8 to enable the Snap to encode the binary data before loading.

Upon validation, the Snap loads the database with 25 employee records.

Output Preview | Data in Snowflake |

|---|

Finally,

connect the JSON Formatter Snap to the Snowflake - Bulk Load Snap to transform the binary data to JSON format, and finally write this output in S3 using the File Writer Snap.

Transform data using a select query before loading into Snowflake

The following example Pipeline

demonstrates how you can reorder the columns using the SELECT statement transform option before loading data into Snowflake database. We use the Snowflake - Bulk Load Snap to accomplish this task.

Prerequisite: You must create an internal or external stage in Snowflake before you transform your data. This stage is used for loading data from source files into the tables of Snowflake database.

To begin with, we create a stage using a query in the following format.

Snowflake supports both internal (Snowflake) and external (Microsoft Azure and AWS S3) stages for this transformation.

"CREATE STAGE IF NOT EXISTS "+_stageName+" url='"+_s3Location+"' CREDENTIALS = (AWS_KEY_ID='string' AWS_SECRET_KEY='string') "

This query creates an external stage in Snowflake pointing to S3 location with AWS credentials (Key ID and Secrete Key).

We recommend that you do not

use a temporary stage to prevent issues while loading and transforming your data.

Now,

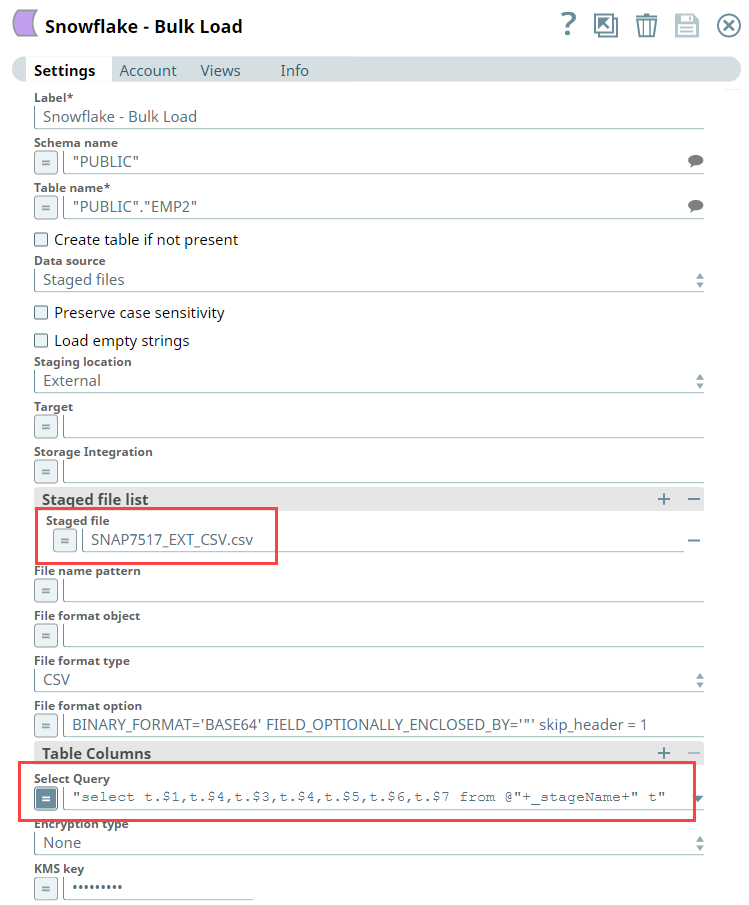

add the Snowflake - Bulk Load Snap to the canvas and configure it to transform the data in the staged file SNAP7517_EXT_CSV.csv by providing the following query in the Select Query field:

"select t.$1,t.$4,t.$3,t.$4,t.$5,t.$6,t.$7 from @"+_stageName+" t"

You must provide the stage name along with schema name in the Select Query, else the Snap displays an error. For instance,

SELECT t.$1,t.$4,t.$3,t.$4,t.$5,t.$6,t.$7 from @mys3stage t", displays an error.

SELECT t.$1,t.$4,t.$3,t.$4,t.$5,t.$6,t.$7 from @<Schema Name>.<stagename> t", executes correctly.

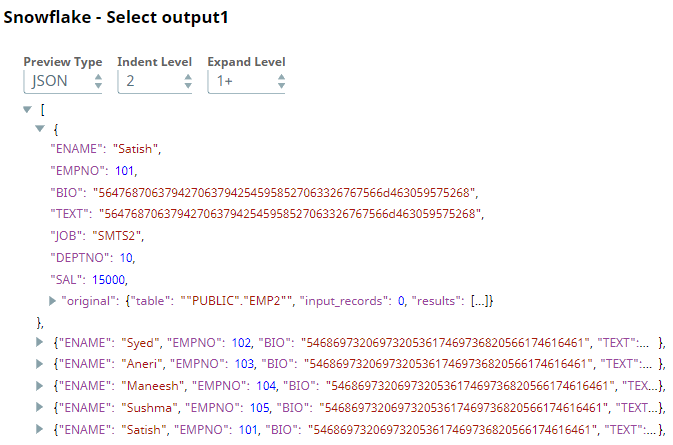

Next,

connect a Snowflake Select Snap with the Snowflake - Bulk Load Snap to select the data from the Snowflake database. Upon validation

you can view the transformed data in the output view.

| Expand | ||

|---|---|---|

|

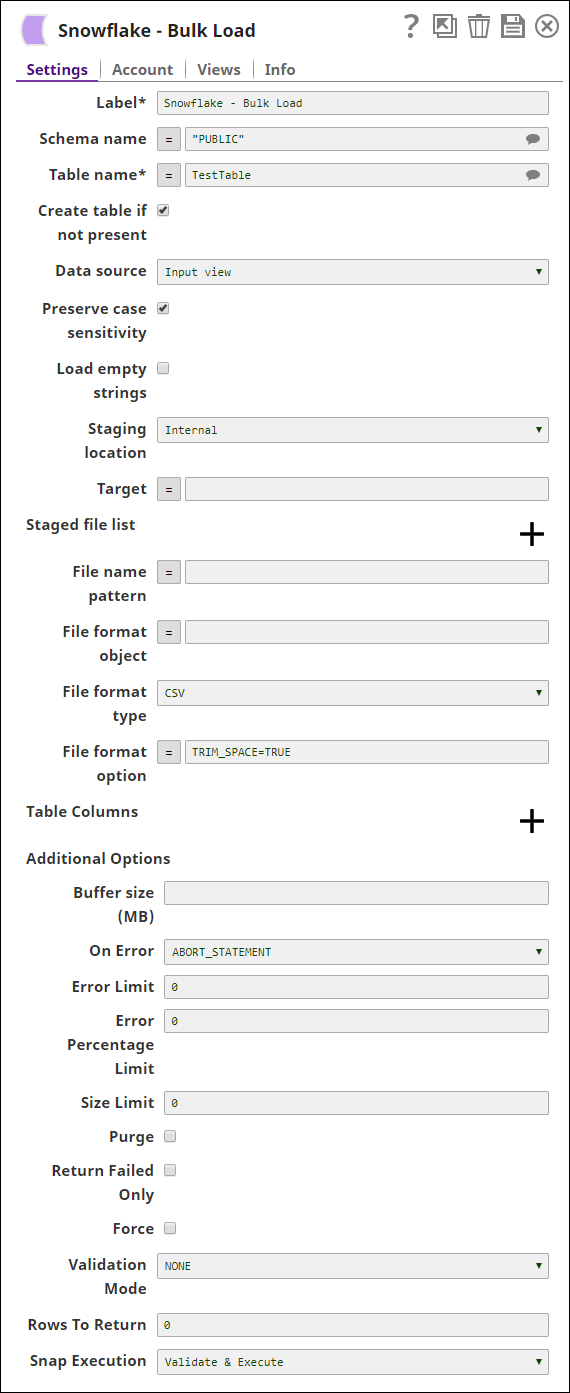

You can remove empty spaces from input documents. |

When you select Input view |

as Data Source, |

enter |

field to remove empty spaces, if any. |

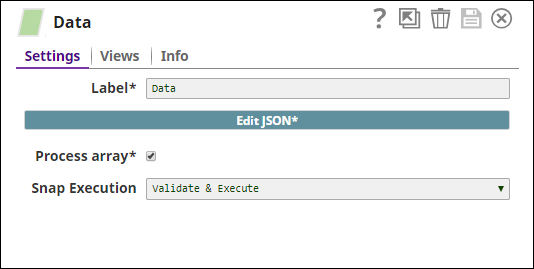

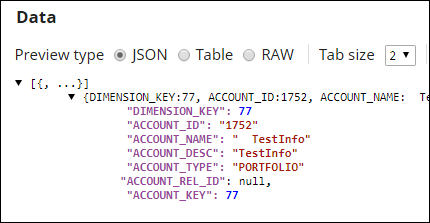

this pipeline. In this example, the Pipeline uses the following Snaps:

Data streams from your database source, and you do not necessarily need a Snap to provide input documents. In this example, however, we use the JSON Generator Snap to provide the input document. Input: Output: As you can see, the value listed against the key ACCOUNT_NAME has empty spaces in it.

Input: |

Notice that the File Format Option is TRIM_SPACE=TRUE. Output:

Table schema is taken from your database source, and you do not necessarily need a Schema Snap to provide the table schema. In this example, however, we use the JSON Generator Snap to provide the table schema. Input: Output:

Input: Output: As you can see, the data no longer contains any spaces. |

| Expand | ||

|---|---|---|

| ||

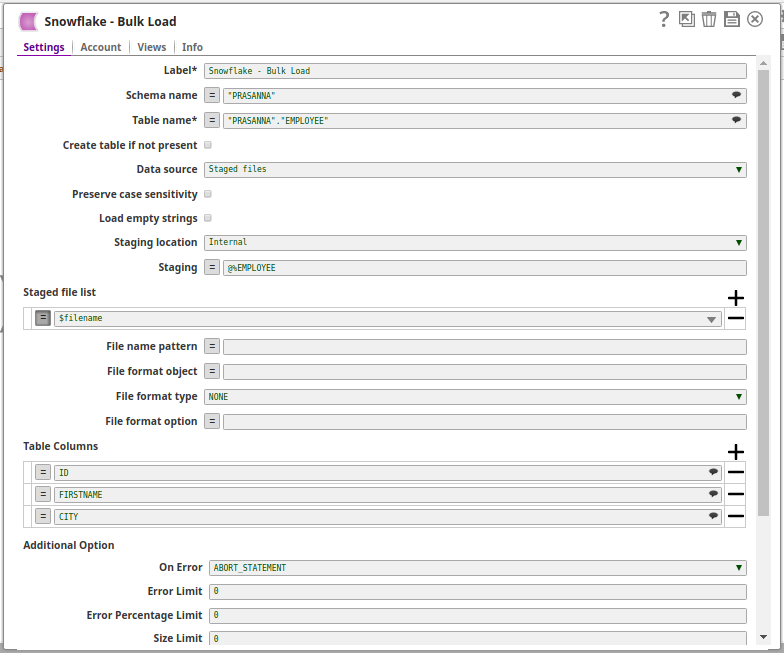

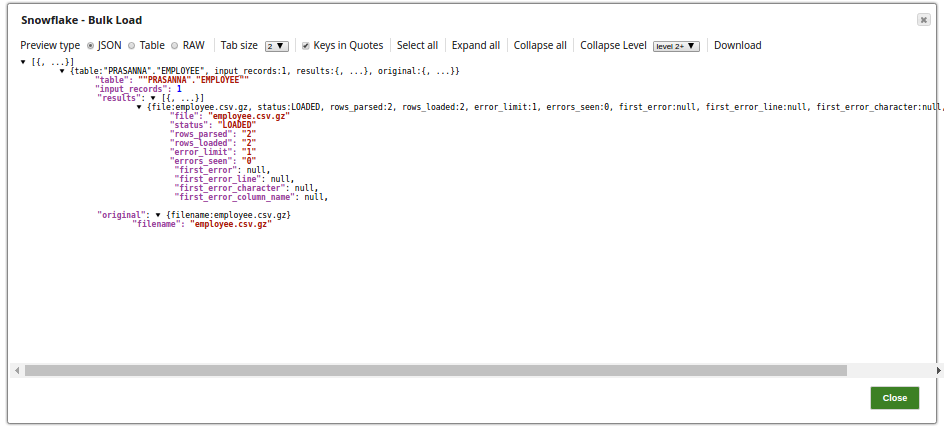

In this pipeline, the Snowflake Bulk Load Snap loads the records from a staged file 'employee.csv content' on to a table on Snowflake. The staged file 'employee.csv content' is passed via the Upstream Snap: The Snowflake Bulk Load Snap is configured with Data source as Staged files and the Table Columns added as ID, FIRSTNAME, CITY, to be loaded into a table "PRASANNA"."EMPLOYEE" on Snowflake. |

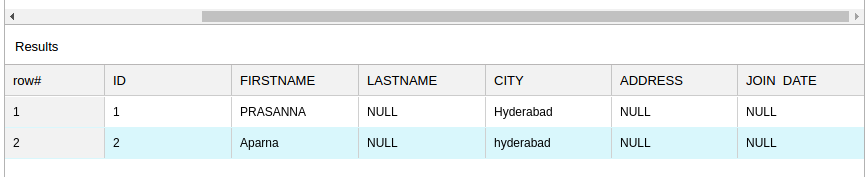

The successful execution of the pipeline displays the below output preview: If the 'employee.csv content' (Staged file)has the below details: 1,PRASANNA,Hyderabad Table Columns added are: then the table, "PRASANNA"."EMPLOYEE" on Snowflake is as below: |

The Snowflake table "PRASANNA"."EMPLOYEE"

Note the columns ID, FIRSTNAME and CITY are populated as provided and the LASTNAME, ADDRESS and JOIN DATE are null. |

| Expand | ||

|---|---|---|

| ||

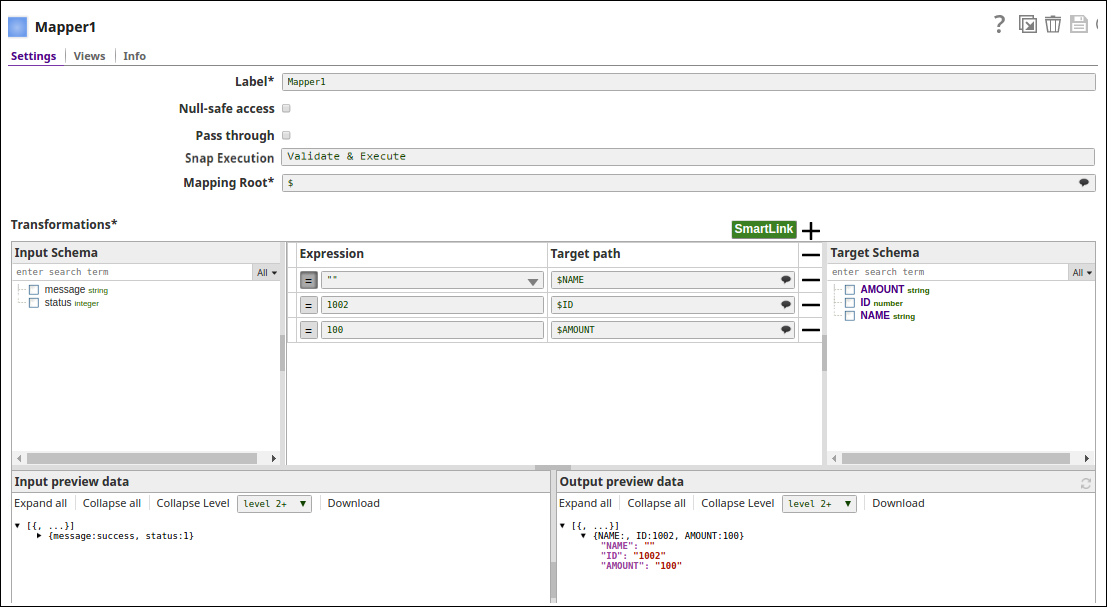

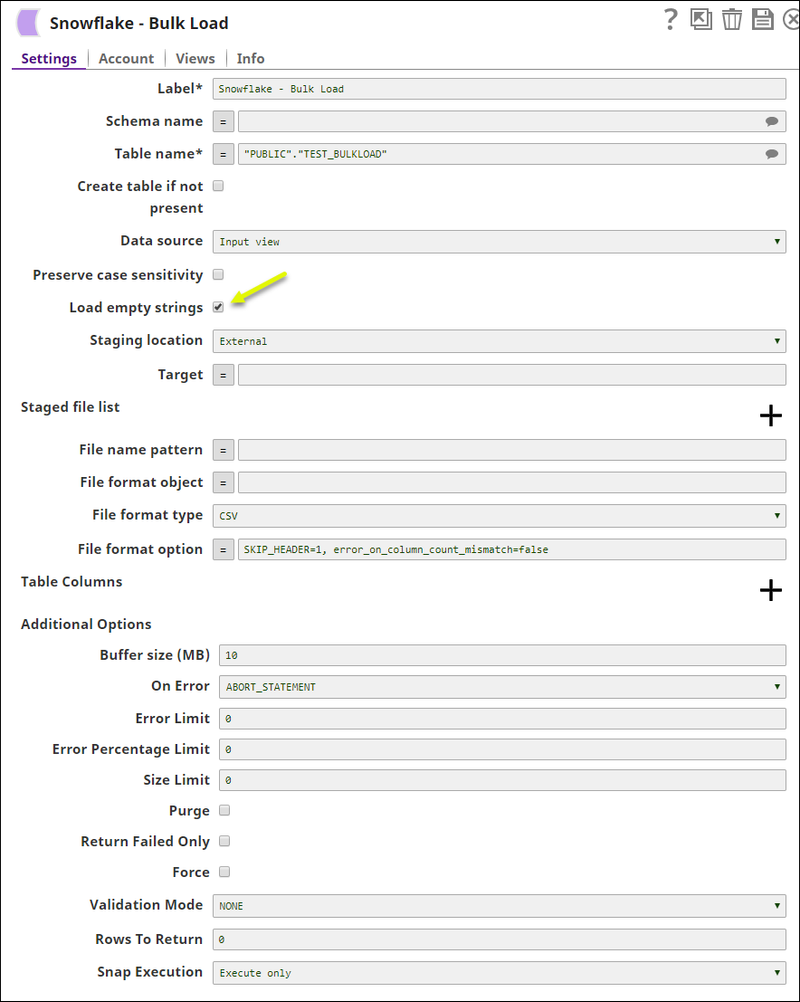

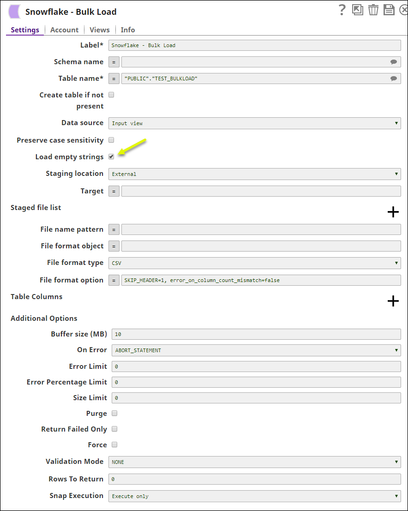

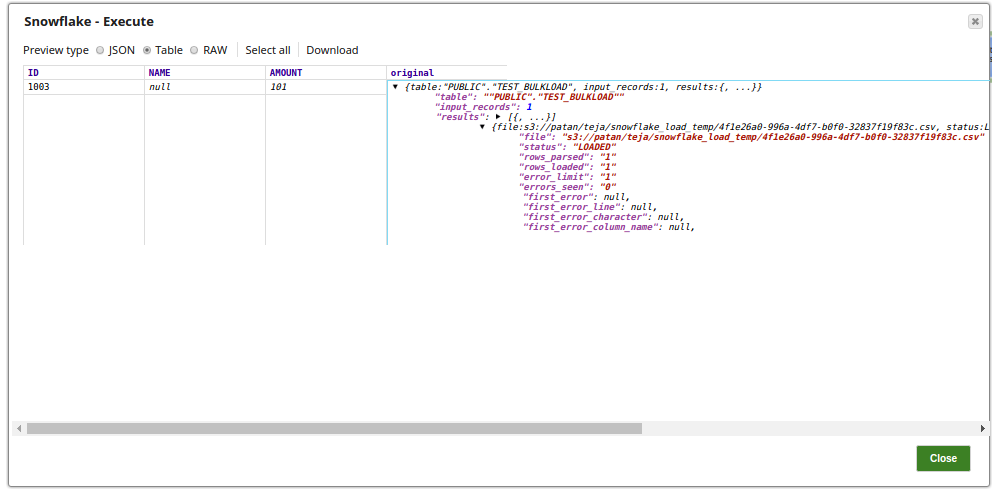

In this pipeline, the Snowflake Bulk Load Snap loads the input document with empty string values as empty strings to the string-type fields. The values to be updated are passed via the upstream Mapper Snap and the Snowflake Execute Snap displays the output view of the table records. The Mapper Snap passes the values to be updated to the table on Snowflake: The Snowflake Bulk Load Snap with Load empty strings property selected: |

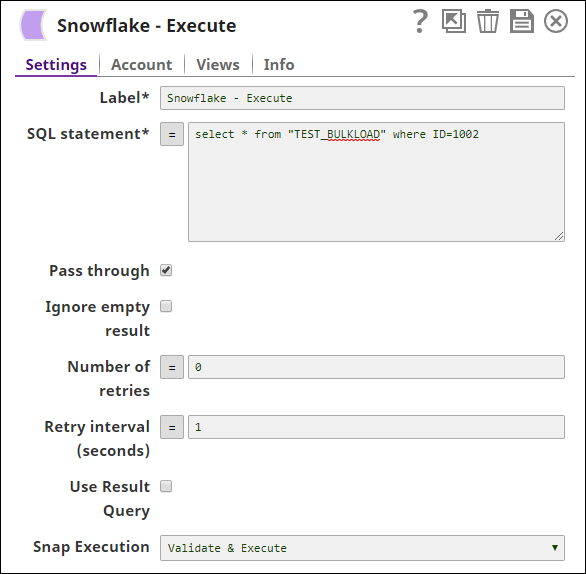

The Snowflake Execute Snap runs the query to select the table 'TEST BULKLOAD' for the 'ID=1002': The successful execution of the pipeline displays the below output preview: |

The Name field is left empty. It means the empty strings are loaded as empty strings only. The below screenshot displays the output preview (on a table with an ID=1003), wherein the Load empty strings property is not selected, and the the Name field has a value 'Null' in it: |

| Expand | ||

|---|---|---|

| ||

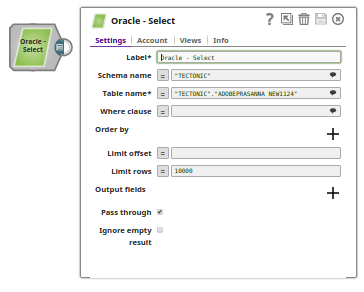

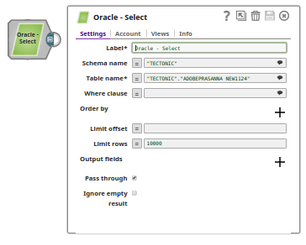

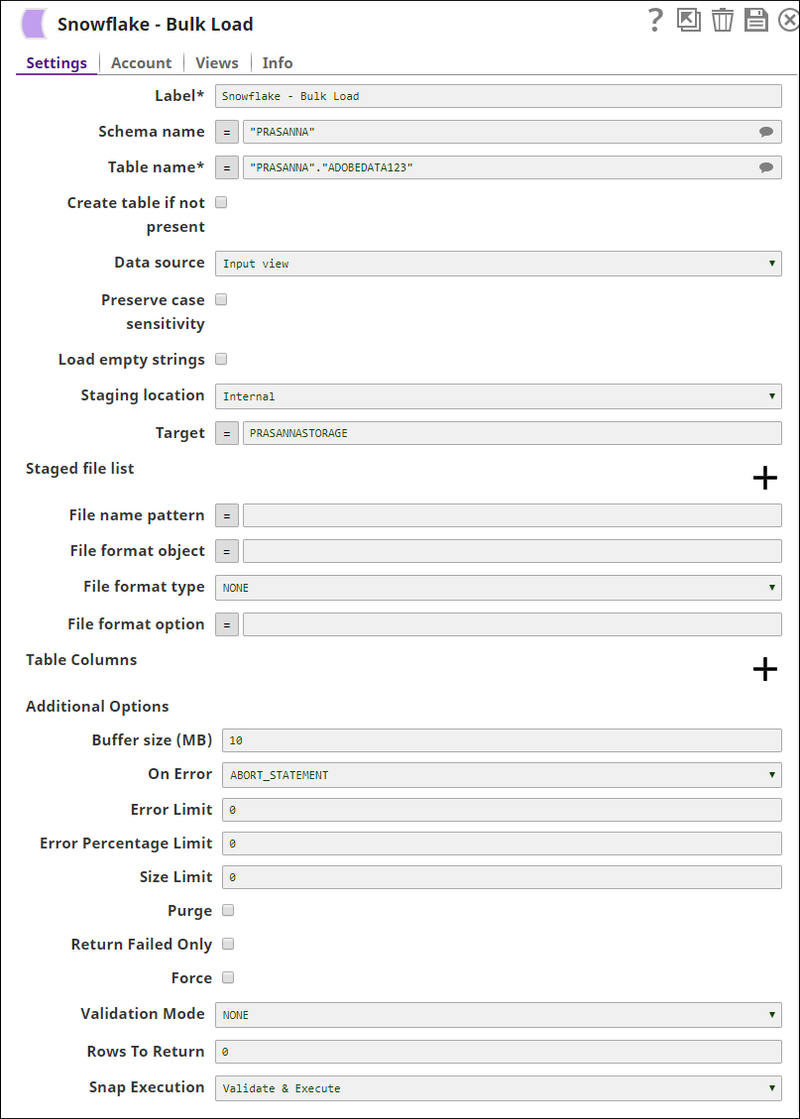

In this example, the data is loaded from the table "TECTONIC"."ADOBEPRASANNA_NEW1124" using Oracle Select Snap to the target table "PRASANNA"."ADOBEDATA123" using Snowflake Bulk Load Snap. The Pipeline: The Oracle Select Snap gets records in the table "TECTONIC"."ADOBEPRASANNA_NEW1124" and passes them to the Snowflake Bulk Load Snap: |

The Snowflake Bulk Load Snap loads records to table "PRASANNA"."ADOBEDATA123":

The output of the Snowflake Bulk Load Snap after executing the pipeline: |

| Expand | ||

|---|---|---|

| ||

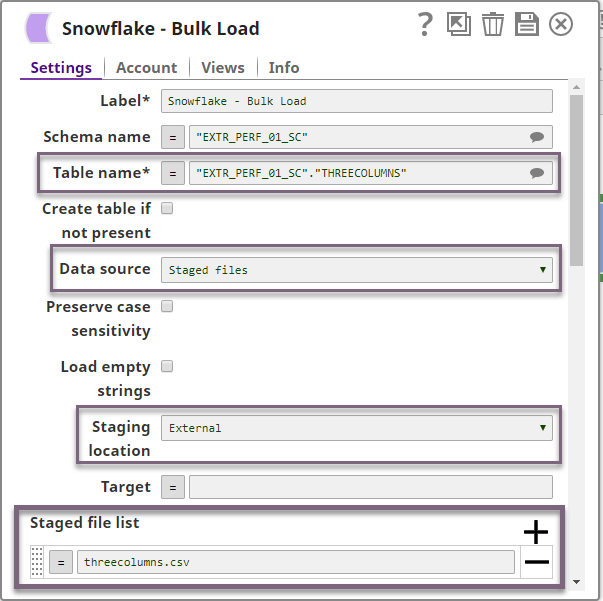

This example demonstrates how you can use the Snowflake Bulk Snap to load files from an external staging location such as S3. It further shows the configuration required when loading numeric data. Download this Pipeline. |

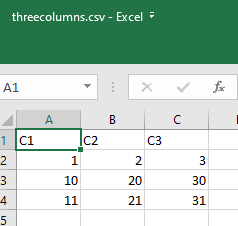

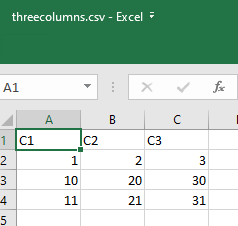

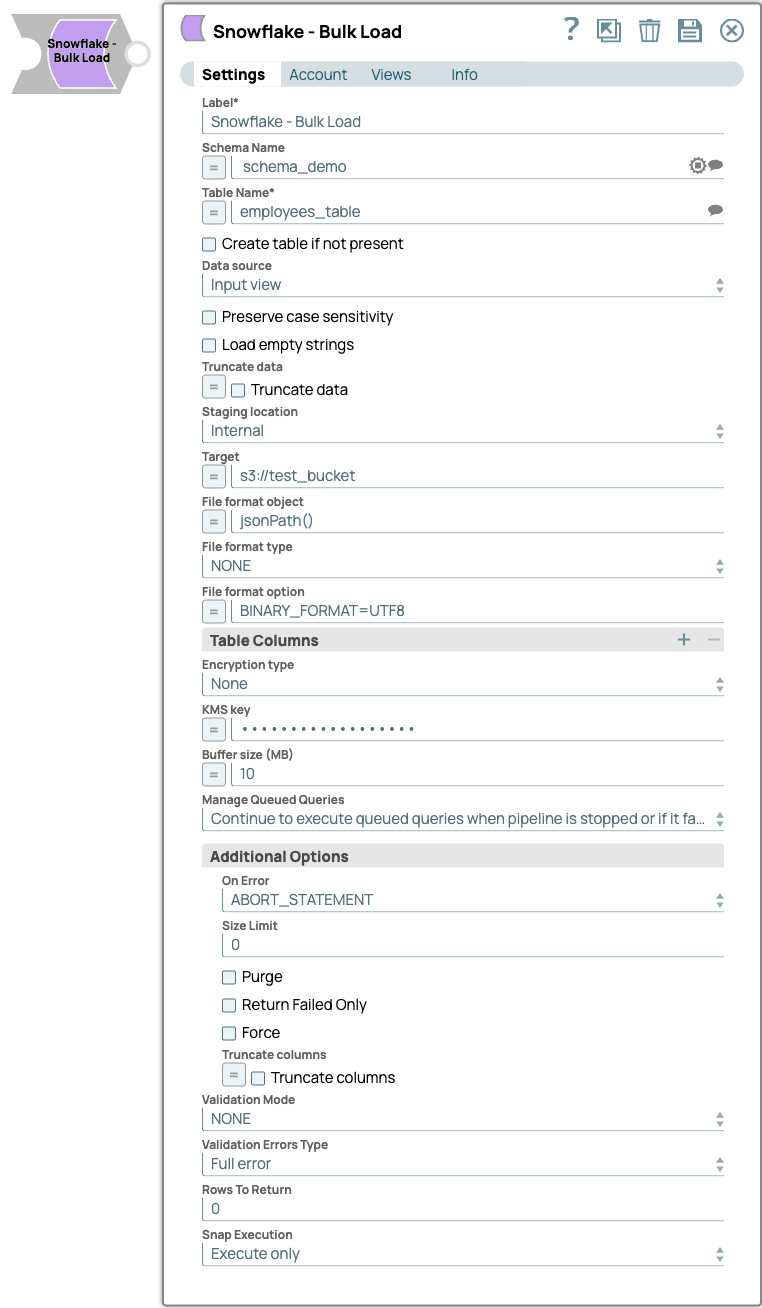

The Snowflake Bulk Load Snap has a minimum of 1 Input view. This is useful when the data source is Input view. Even though, within the scope of this Pipeline, the Snap does not require any input from an upstream Snap, the view cannot be disabled. Data is to be loaded from the staged file threecolumns.csv present in a S3 folder into the table "EXTR_PERF_01_SC"."THREECOLUMNS". Below is a screenshot of the data in threecolumns.csv: |

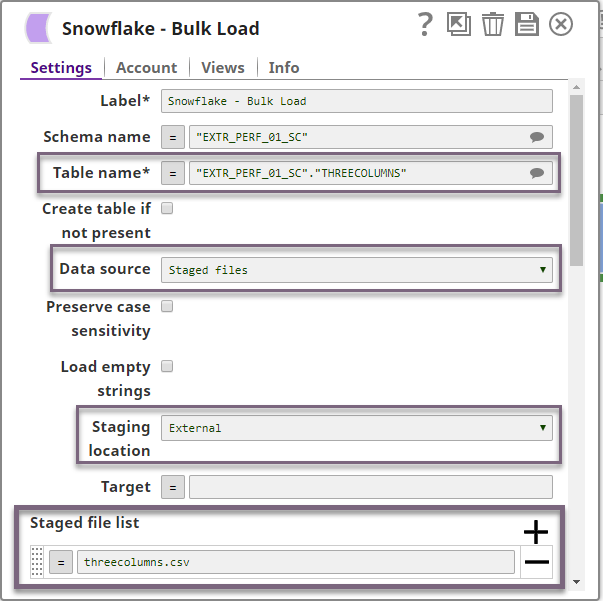

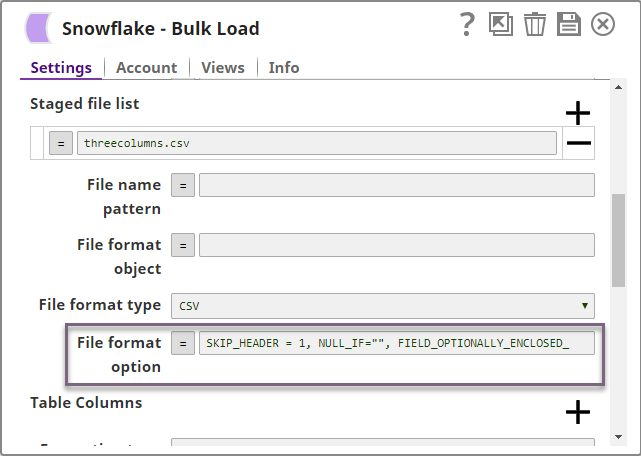

The Snowflake Bulk Load Snap is configured accordingly as follows: |

Furthermore, since this data has numeric values, the Snowflake Bulk Load Snap is configured with the following file format options to handle any string/NULL values that may be present in the dataset:

See Format Type Options for a detailed explanation of the above file format options. |

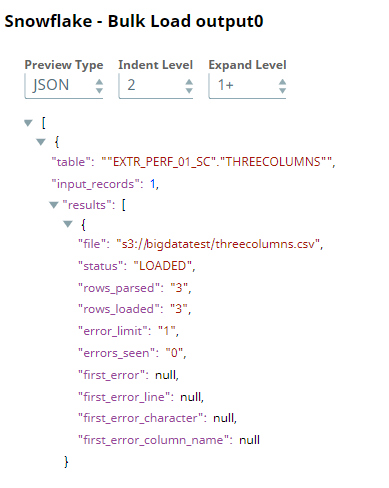

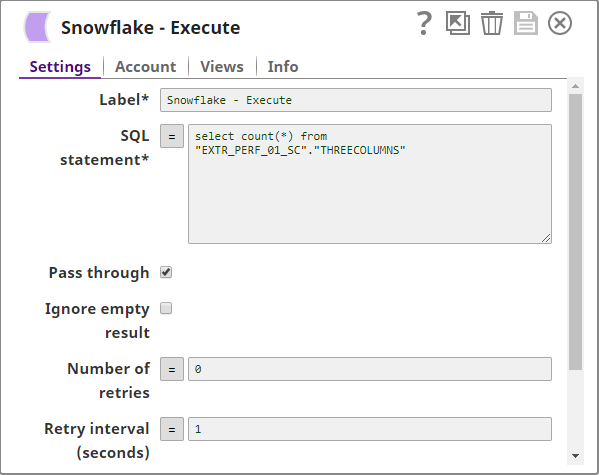

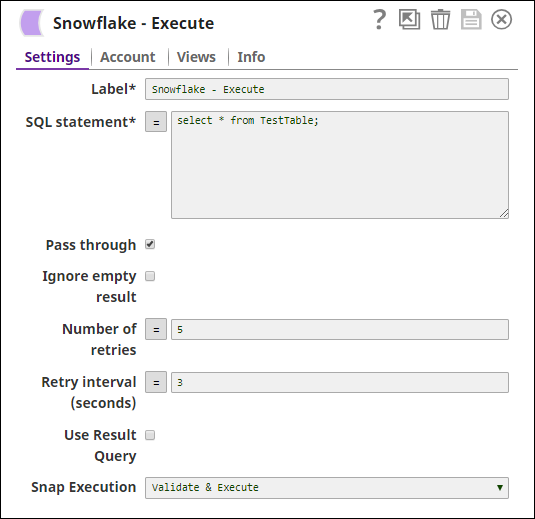

Upon execution, the Snowflake Bulk Load Snap loads three rows: To confirm that three rows were loaded, we use a Snowflake Execute Snap configured to count the number of rows in the target table: |

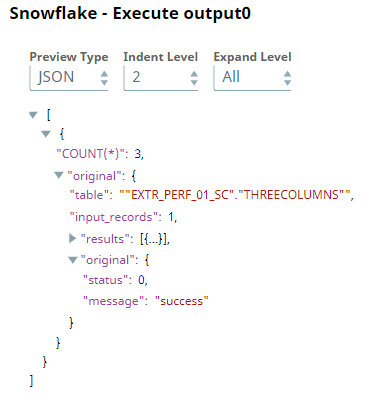

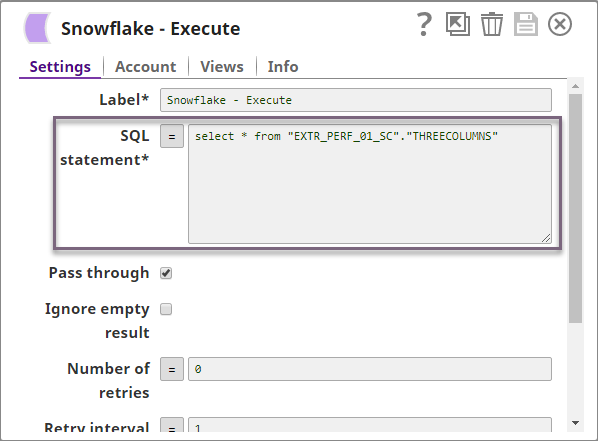

Below is a preview of the output from the Snowflake Execute Snap. We can see that the count is 3, thereby confirming a successful bulk load. You can also modify the SELECT query in the Snowflake Execute Snap to read the data in the table and thus verify the data loaded into the target table.

|

Downloads

| Multiexcerpt include macro | ||||

|---|---|---|---|---|

|

| Attachments | ||

|---|---|---|

|

Snap Pack History

| Expand | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|

|

Related Content

.png?version=1&modificationDate=1489735611683&cacheVersion=1&api=v2&width=900)