In this article

Overview

Use this account type to connect ELT Snaps with data sources that use ELT Database accounts. This account type enables you to write transformed data to target databases hosted in the following cloud locations. The JDBC URL you define for your target database indicates the respective cloud location where the database is hosted.

| Target Database | Supported Cloud Location | Cloud Location Indicated in JDBC URL |

|---|---|---|

| Snowflake | AWS | jdbc:snowflake://<account_name>.snowflakecomputing.com |

| Microsoft Azure | jdbc:snowflake://<account_name>.<region>.azure.snowflakecomputing.com | |

| Google Cloud Platform (GCP) | jdbc:snowflake://<account_name>.<region>.gcp.snowflakecomputing.com | |

| Redshift | AWS | jdbc:redshift://<redshift-cluster-name>.<region>.redshift.amazonaws.com |

| Azure Synapse | Microsoft Azure | jdbc:sqlserver://<yourserver>.database.windows.net |

The Snap Pack does not support mixed accounts from different database-types in the same Pipeline. For example, a Pipeline in which some Snaps are connecting to the Snowflake database cannot have other Snaps connecting to the Redshift database.

Prerequisites

A valid Snowflake/Redshift/Azure Synapse database account.

Limitations

- Supports connecting only to the Snowflake, Redshift, and Azure Synapse databases.

- In case of Azure Synapse database, this account supports:

- Only provisioned clusters of Azure Synapse, but not in Serverless mode.

- The following external locations:

- Blob Storage: https://.blob.core.windows.net//

- ADLS Gen2: https://.dfs.core.windows.net//

Known Issue

Page lookup error: page "Configuring Spark SQL 2.x Accounts" not found.

If you're experiencing issues please see our Troubleshooting Guide.

Account Settings

| Parameter | Data Type | Description | Default Value | Example |

|---|---|---|---|---|

| Label | String | Required. Unique user-provided label for the account. | N/A | ELT Account |

| Account Properties | Required. Use this field set to configure the information required to establish a JDBC connection with the account. This field set consists of the following fields:

| |||

| Database Type | String | Required. Select the target data warehouse into which the queries must be loaded. Available options are:

| N/A | Snowflake |

| Download JDBC Driver Automatically | Check box | Select this check box to allow the Snap account to download the certified JDBC Driver based on the Database Type you have selected. The following fields are disabled when this check box is selected.

To use a JDBC Driver of your choice, deselect this check box, upload (to SLDB), and choose the required JAR files in the JDBC JARs: JDBC Driver field. Use of Custom JDBC JAR version You can use different JAR file versions than those listed here. We recommend that you use the listed JAR file versions. See the latest Release Notes for more details. | Not Selected | Selected |

| JDBC JARs: JDBC Driver | String | Required when you do not select the Download JDBC Driver Automatically check box. Upload the JDBC driver and other JAR files that you want to use into SLDB. Click to add a new row. Add each JDBC JAR file in a separate row. See Downloading the JDBC Driver for more information about JDBC drivers and downloading the appropriate driver for your account. | N/A | snowflake-jdbc-3.9.1.jar |

| JDBC Driver Class | String | Required when you do not select the Download JDBC Driver Automatically check box. Specify the driver class to use for your application. We recommend that you use the following classes to suit your database type as other classes and methods may change due to future enhancements:

| N/A | net.snowflake.client.jdbc.SnowflakeDriver |

| JDBC URL | URL | Required. Enter the JDBC driver connection string that you want to use, based on the Database you are connecting to. Use the appropriate syntax provided below.

Alternatively, for Redshift and Azure Synapse, you can make use of the Username, Password, and Database Name fields, along with the Advanced Properties > URL Properties field set to provide the parameters required for building your JDBC URL. See Passing your JDBC URL for more information. Avoid passing Password inside the JDBC URL If you specify the password inside the JDBC URL, it is saved as it is and is not encrypted. We recommend passing your password using the Password field provided, instead, to ensure that your password is encrypted. | N/A | jdbc:snowflake://xy12345.us-east-2. jdbc:snowflake://xy12345.east-us-2. |

| Username | String | Required. Enter the username provided for your database account. | N/A | Bigdatasnaplogic |

| Password | String | Required. Enter the password for the username specified above. | N/A | <Encrypted> |

| Warehouse | String | Required. Activates when you select the Snowflake option in the Database Type field. Enter the name of the Snowflake warehouse that you want to use. | N/A | 12345abcdefghikj1km2345no |

| Default Database Name | String | Required. Enter the name of the database to use by default. This database is used if you do not specify one in the ELT Select, ELT Insert-Select, or ELT Merge Into Snaps. | N/A | EMPLOYEEDB |

| Source Location | String | Required. Activates when you select the Snowflake or Redshift option in the Database Type field. Select the source data warehouse from which to load data into the target database. You must configure this field if you want to use the ELT Load Snap. Available options are:

| None | S3 Azure Google Cloud Storage |

| Authentication | String | Activates when you select S3, Azure or Google Cloud Storage in the Source Location field. Select the authentication method to use for the loaded data. Available options are:

| Source Location Credentials | Storage Integration |

| Storage Integration | String | Activates when you select the S3 or Google Cloud Storage in the Source Location field and Storage Integration option in the Authentication field. Enter the name of the storage integration in your Snowflake database. Navigate to one of the following links for more information on setting up your Storage Integration in Snowflake. Storage integration is not applicable to the Redshift and Azure Synapse databases. | N/A | my_s3_integration my_azure_integration my_sf_gcs_integration |

| IAM Role | String | Activates when you select the S3 option in the Source Location field and IAM Role option in the Authentication field. Enter the IAM role associated with the target Redshift cluster. See Create an IAM Role for details on creating and associating IAM Role with your Redshift cluster. Applicable to the Redshift database only. | N/A | myiamrole |

| Access Key | String | Activates when you select the S3 option in the Source Location field and Source Location Credentials option in the Authentication field. Enter the access key ID associated with your AWS S3 account. | N/A | ABCDEFGHIJKL1MNOPQRS |

| Secret Key | String | Activates when you select the S3 option in the Source Location field and Source Location Credentials option in the Authentication field. Enter the client secret key associated with your AWS S3 account. | N/A | aBcdeFGhiJKLM/N1OPQRS/t UvWxyZABCDEFGHIJKLMN |

| SAS Token | String | Activates when you select the Azure option in the Source Location field and Source Location Credentials option in the Authentication field. Enter the SAS token part of the SAS URI associated with your Azure storage account. See Getting Started with SAS for details. | N/A | ?sv=2020-08-05&st=2020-08-29T22%3A18%3A26Z&se=2020- 08-30T02%3A23%3A26Z&sr=b& sp=rw&sip=198.1.2.60-198.1.2.70&spr=https&sig= A%1DEFGH1Ijk2Lm3noI3OlW TjEg2tYkboXr1P9ZUXDtkk%3D |

| Storage Account | String | Enter the name of your Azure storage account. Not applicable to the Redshift database. | N/A | employeedata |

| S3 Bucket | String | Activates when you select the S3 option in the Source Location field. Enter the name of the bucket from which to load the data. | N/A | employeedata |

| S3 Bucket Region | String | Activates when you select Redshift as the Database Type and S3 option in the Source Location field. Select the region of your Redshift cluster if it is different from the region of the S3 bucket. Available options are:

| Default | us-east-1 us-east-2 |

| S3 Folder | String | Activates when you select the S3 option in the Source Location field. Enter the name of the folder in the S3 bucket specified above where the source files are located. | N/A | hrdepartment |

| External Location (For Azure Synapse only) | Drop-down list | Activates when you select the Azure Synapse option in the Database Type field. Select the source data location from which to load data into the target database. You must configure this field if you want to use the ELT Load Snap. Available options are:

| Blob Storage | Azure Data Lake Gen2 |

| External Storage Endpoint (For Azure Synapse only) | String | Activates when you select the Azure Synapse option in the Database Type field. Enter the end point path for the selected external location. You must configure this field if you want to use the ELT Load Snap. | N/A | .blob.core.windows.net (for Blob Storage) .dfs.core.windows.net (for ADLS Gen2) |

| Storage Account (For Azure Synapse only) | String | Activates when you select the Azure Synapse option in the Database Type field. Enter your Account name to access the selected Azure external storage location. You must configure this field if you want to use the ELT Load Snap. | N/A | adlsgen2v02 |

| Azure Container | String | Activates when you select the Azure Synapse option in the Database Type field. Also when you choose to use Azure as Source Location with the Snowflake Database Type. Enter the name of the container in the Azure storage account. Not applicable to the Redshift database. | N/A | hrdepartment |

| Azure Folder | String | Activates when you select the Azure Synapse option in the Database Type field. Also when you choose to use Azure as Source Location with the Snowflake Database Type. Enter the relative path for the folder within the container specified above. Leave this field blank to use all the folders in the specified container. Not applicable to the Redshift database. | N/A | salaries |

| Google Cloud Storage (GCS) Bucket | String | Activates when you select Google Cloud Storage in the Source Location field and Storage Integration option in the Authentication field. Enter the name of the GCS bucket from which to load the data to your Snowflake database. | N/A | elt_gcs_bucket_1 |

| Google Cloud Storage (GCS) Folder | String | Activates when you select Google Cloud Storage in the Source Location field and Storage Integration option in the Authentication field. Enter the name of the folder in the GCS bucket where the source files are located. | N/A | elt_gcs_bucket_1_CSV_Files |

| Azure Auth Type (For Azure Synapse only) | Drop-down list | Activates when you select the Azure Synapse option in the Database Type field. Select the authentication type to use for accessing the selected Azure external storage location. Then, provide the values for Azure Identity, Azure Secret, Storage Key fields for the selected authentication type. See COPY INTO (Transact-SQL) in Microsoft Docs for more information on these authentication types. Available options are:

| Storage Account Key | AAD User |

| Azure Identity (For Azure Synapse only) | String | Activates when you select an option other than AAD User in the Azure Auth Type field. Enter the Azure Identity (the constant value) corresponding to the selected authentication type. See COPY INTO (Transact-SQL) in Microsoft Docs for more information on the suggested values for this field. | N/A | Storage Account Key |

| Azure Secret (For Azure Synapse only) | String | Activates when you select Storage Account Key, Shared Access Signature, or Service Principals option in the Azure Auth Type field. Enter the Azure Secret corresponding to the selected authentication type. See COPY INTO (Transact-SQL) in Microsoft Docs for more information on the expected values for this field. | N/A | UvhVF65Bukk9hVF65BuUvhVF |

| Storage Key (For Azure Synapse only) | String | Activates when you select Shared Access Signature, Managed Identity, or Service Principals option in the Azure Auth Type field. Enter the storage access key value corresponding to the Azure account mentioned in Storage Account field. See COPY INTO (Transact-SQL) in Microsoft Docs for more information on the expected values for this field. This field is needed only when you want to define the File Name Pattern in the ELT Load Snap to locate and read the file/s from the Azure Folder path. Else, leave it blank. | N/A | ufv!befDIuf#fnb$KH&_hweuf |

| Client ID | String | Activates when you select AAD User option in the Azure Auth Type field. Enter the Client ID of the application created in the Azure Active Directory portal - https://portal.azure.com. This field is needed only when you want to define the File Name Pattern in the ELT Load Snap to locate and read the file/s from the Azure Folder path. Else, leave it blank. | N/A | hewr42ap-32jd-pd95-ms38-b342bnasdh80 |

| Client Secret | String | Activates when you select AAD User option in the Azure Auth Type field. Enter the Client ID of the application created in the Azure Active Directory portal - https://portal.azure.com. This field is needed only when you want to define the File Name Pattern in the ELT Load Snap to locate and read the file/s from the Azure Folder path. Else, leave it blank. | N/A | jhweufv!befDIufwb*dsfwujfnb$KH&_ |

| Tenant ID | String | Activates when you select AAD User option in the Azure Auth Type field. Enter the Tenant ID of the application created in the Azure Active Directory portal - https://portal.azure.com. This field is needed only when you want to define the File Name Pattern in the ELT Load Snap to locate and read the file/s from the Azure Folder path. Else, leave it blank. | N/A | b342bnas-he38-apms-dhjd-pd95wr423280 |

| Advanced Properties | Other parameters that you want to specify as URL properties. See the following resources for a list of parameters that can be specified in this field set.

In case of Snowflake, DO NOT configure parameters that are already present in the Account Properties field set. For example:

This field set consists of the following fields:

| |||

| URL Properties | String/Integer | The account parameter's name and its corresponding value. Click + to add more rows. Add each URL property-value pair in a separate row. Specify the name of the parameter in the URL Property Name field and its value in the URL Property Value field. | N/A | MAX_CONCURRENCY_LEVEL: 3 |

Click Validate after entering the required details to ensure that all fields have been filled accurately. Click Apply to save the settings.

Passing your JDBC URL

Order of Precedence

The parameter values for the URL used to connect to your target database are governed by the following order of precedence:

- JDBC URL field

- Snap Properties (including Advanced Properties)

- Default values

Default Properties set internally

The following properties are passed in the URL internally, by default:

| Target Database | Parameter | Value |

|---|---|---|

| Snowflake | None | None |

| Redshift | tcpKeepAlive | true |

| Azure Synapse | encrypt | true |

trustServerCertificate | false | |

hostNameInCertificate | *.database.windows.net | |

loginTimeout | 300 |

Specific Scenarios

When setting default values for the Database Name, Username, and Password fields:

As a best practice, ensure that the Default Database Name provided in the Snap's properties and the database name in the JDBC URL field match.

Else, make sure that both the database names exist.

However, the username and password from the JDBC URL take precedence over the values provided in the respective fields.

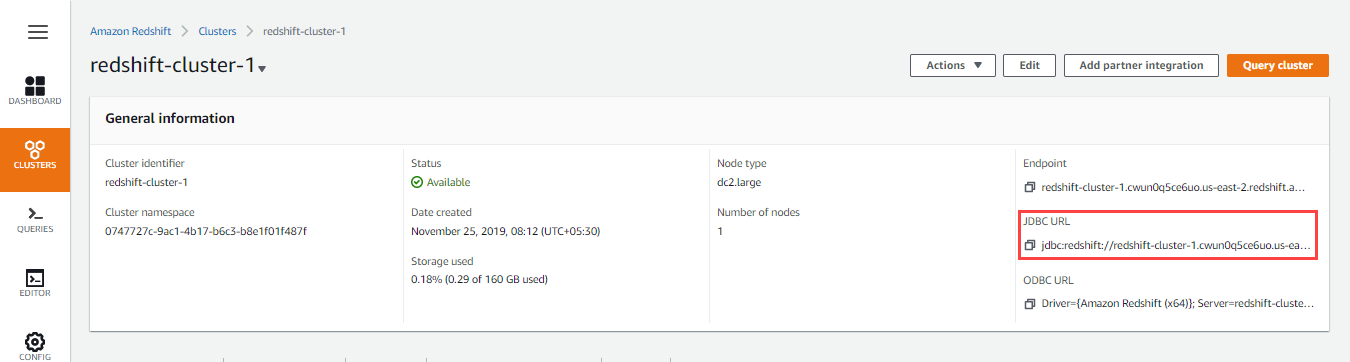

Locating the JDBC URL for Redshift database

Follow the steps below to locate and use the JDBC URL for your Redshift database instance.

- Log into your AWS Console.

- Click Amazon Redshift.

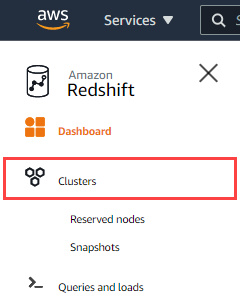

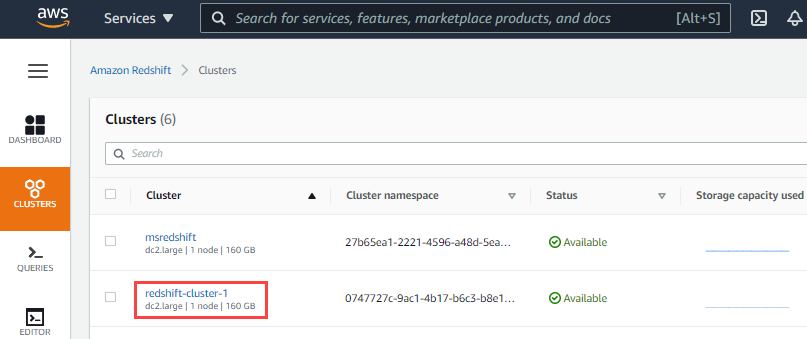

- Click Clusters in the left navigation menu. Ensure that your admin has provided access to the Redshift cluster that you want to use.

- Click the Cluster name hyperlink in the list of clusters provided.

- Click next to the JDBC URL to copy the JDBC URL displayed. Paste this URL in your Snap's account settings.

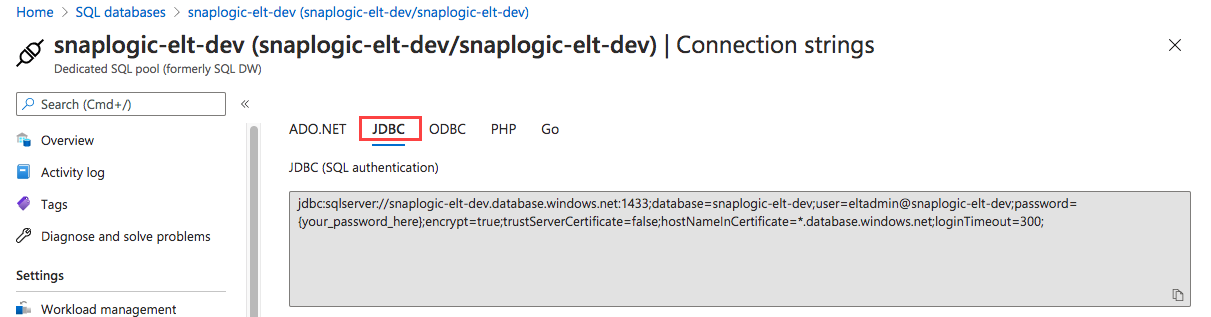

Locating the JDBC URL for Azure Synapse database

Follow the steps below to locate and use the JDBC URL for your Azure Synapse database instance.

- Log into portal.azure.com.

- Click SQL Databases.

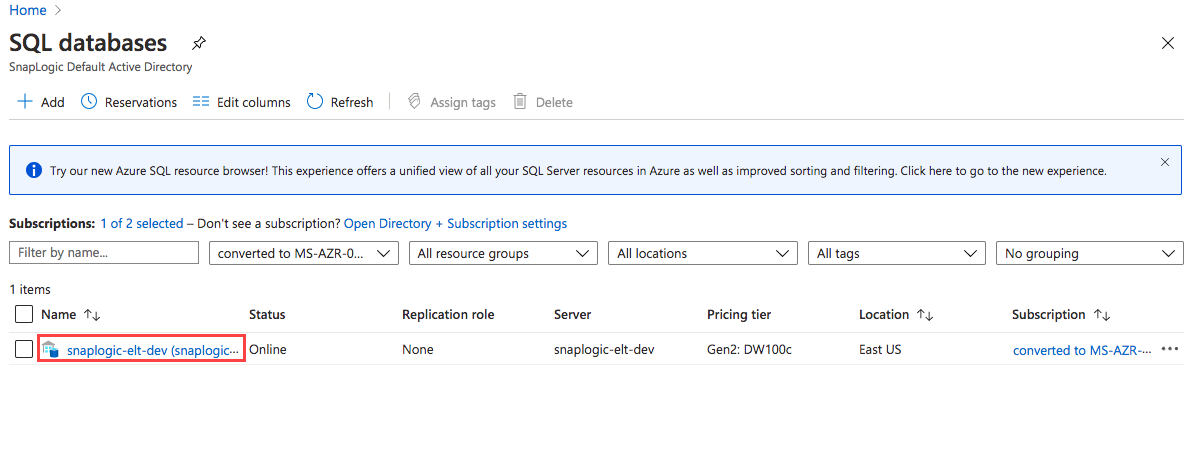

- Click on the Database configured for your organization.

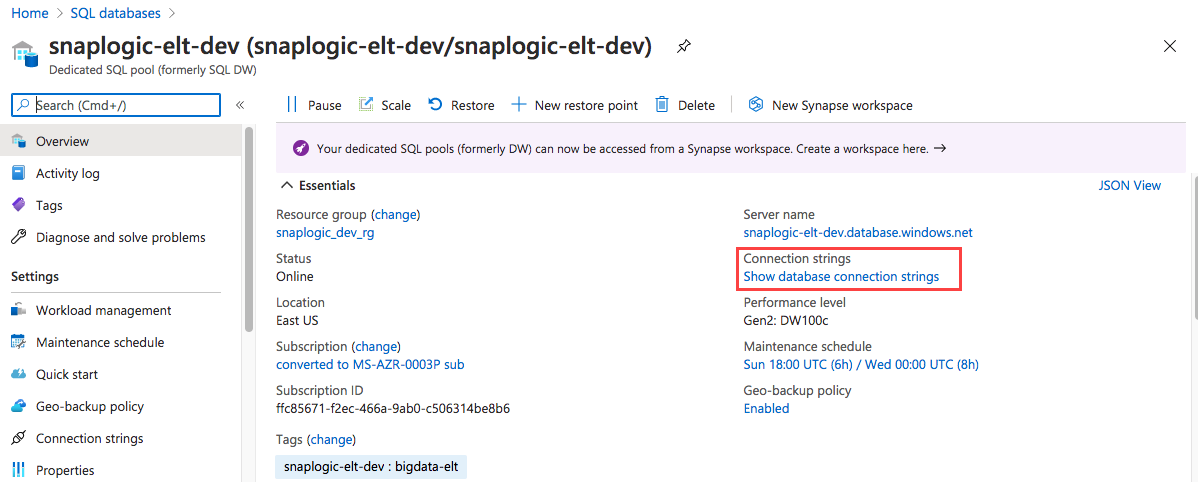

- Click Show Database Connection Strings and click JDBC to get the actual connection string.

- Click to copy the JDBC URL displayed. Paste this URL in your Snap's account settings.

Alternatively, you can separately pass the base URL in the JDBC URL field and the remaining parameters as a combination of the Snap's account fields and Advanced Properties > URL Properties.

Troubleshooting

None.

Example

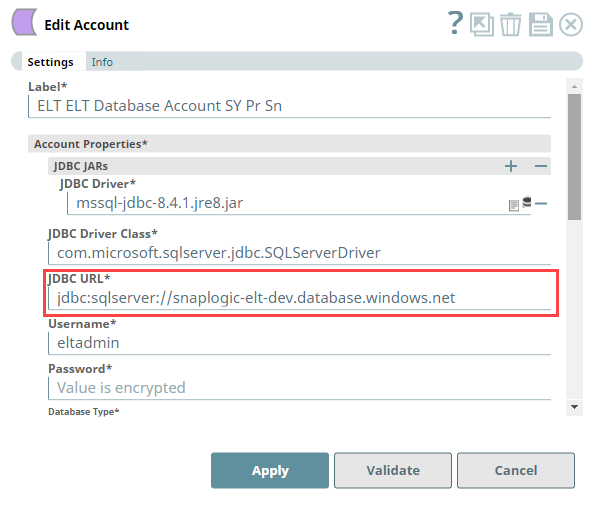

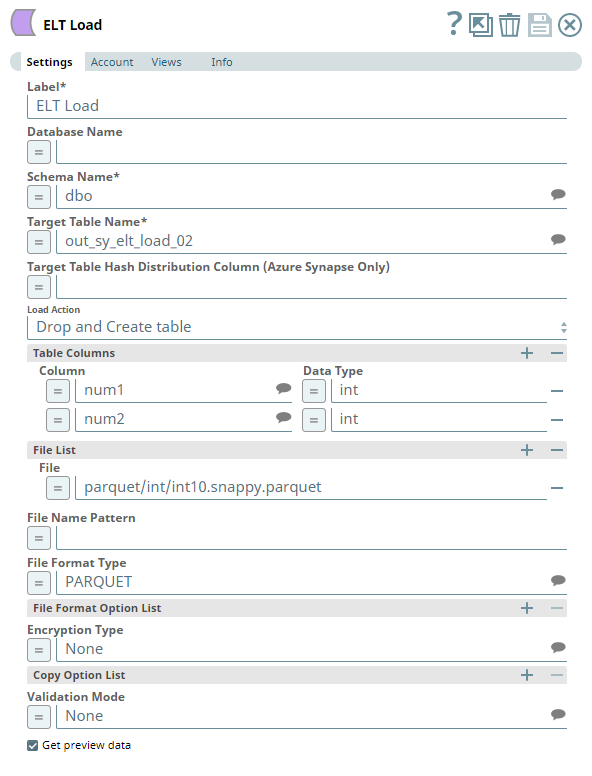

Configuring the ELT Database Account for connecting to a Azure Synapse Database

The following Pipeline is designed to extract data from a Parquet file residing in the ADLS Gen2 storage and load it into a new table in the Azure Synapse database.

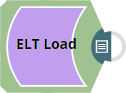

Snap Settings

The Pipeline uses an ELT Load Snap to define the source Parquet file in the Azure Data Lake Storage and is configured to perform the following functions.

- Create a new table out_sy_elt_load_02 in the specified target database (Azure Synapse).

- Load the data from Parquet file to this newly-created target table based on the table columns specified.

Account Settings

The Pipeline accesses the Parquet file using the Storage Account Key mode of authentication.