On this page

List of articles in this section

Overview

You can configure your Groundplex nodes through the Create Snaplex or Update Snaplex dialogs in SnapLogic Manager or directly through the Snaplex properties file. Additionally, you can customize your Groundplex configuration through the global.properties fields.

For defining network settings in your Grounplex, refer to Groundplex Network Setup.

Node Property Configuration

Do not make changes to your configuration without consulting your SnapLogic CSM.

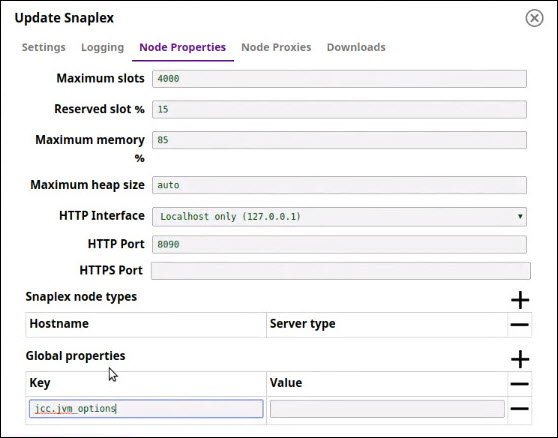

Various configuration options can be added, or the existing values updated, in the Node Properties tab on the Update Snaplex dialog in SnapLogic Manager.

Making Snaplex Configuration Changes

Snaplex nodes are typically configured using the slpropz configuration file located in the $SL_ROOT/etc folder.

If you use the slpropz file as your Snaplex configuration, then you can expect the following:

After a Snaplex node is started with the slpropz configuration, subsequent configuration updates are applied automatically.

Changing the Snaplex properties in Manager causes each Snaplex node to download the updated

slpropzand do a rolling restart with no downtime on Snaplexes with more than one node.Some configuration changes, such as an update to the logging properties, do not require a restart and are applied immediately.

Some configuration properties, like the Environment value, cannot be changed without doing manual updates for the

slpropzfiles on the Snaplex nodes.

The Groundplex nodes must be set up to use a slpropz configuration file before changes to these properties take effect. If you make changes that affect the software configuration, but there are nodes in the Snaplex that are not set up to use a slpropz configuration file, a warning dialog appears with a listing of the unmanaged nodes. Learn more about setting up a node to use this configuration mechanism when you add a node to your Snaplex. for more information on setting up a node to use this configuration mechanism.

Restarting the Groundplex Nodes

To restart the Snaplex process on your Groundplex nodes, run the following commands, depending on your OS:

Linux:

/opt/snaplogic/bin/jcc.sh restart

Windows:

c:\opt\snaplogic\bin\jcc.bat restart.

Managing Older Groundplex Installations

If you have an older Snaplex installation with a configuration defined in the global.properties file, then the Environment value must match the jcc.environment value In the JCC global.properties file. To migrate your Snaplex configuration to the slpropz mechanism, see Migrating Older Snaplex Nodes.

You should always configure your Snaplex instances using the slpropz file because you do not have to edit the configuration files manually. Changes to the Snaplex done through Manager are applied automatically to all nodes in that Snaplex.

Temporary Folder

The temporary folder stores unencrypted data. These temporary files are deleted when the Snap/Pipeline execution completes. You can update your Snaplex to point to a different temporary location in the Global properties table by entering the following:

jcc.jvm_options = -Djava.io.tmpdir=/new/tmp/folder

The following Snaps write to temporary files on your local disk:

Anaplan: Upload, Write

Binary: Sort, Join

Box: Read, Write

Confluent Kafka: All Snaps that use either Kerberos and SSL accounts

Database: When using local disk staging for read-type Snaps

Data Science (Machine Learning) Snaps: Profile, AutoML, Sample, Shuffle, Deduplicate, Match

Email: Sender

Hadoop: Read, Write (Parquet and ORC formats)

JMS: When the user provides a JAR file

Salesforce: Bulk Query, Snaps that process CSV data

Script: PySpark

Snowflake: When using internal staging

Teradata: TPT FastExport

Transform: Aggregate, Avro Parser, Excel Parser, Join, Unique, Sort

Vertica: Bulk Load

Workday Prism Analytics: Bulk Load

Heap Space

To change the maximum heap space used by your Snaplex, edit the Maximum Heap Size field setting in the Snaplex dialog.

Default Auto Setting Behavior

The default is auto, meaning that SnapLogic automatically sets the maximum heap size based on the available memory. The auto setting uses the optimum fraction of physical memory available to the system and leaves sufficient memory for operating system usage, as follows:

For RAM up to 4 GB, 75% is allocated to Java Virtual Machine (JVM).

For RAM over 4 GB and up to 8 GB, 80% is allocated to JVM.

For RAM over 8 GB and up to 32 GB, 85% is allocated to JVM.

For RAM over 32 GB, 90% is allocated to JVM.

Custom Heap Setting

If you enter your own heap space value, one method is to set the value to approximately 1 GB less than the amount of RAM available on the machine.

We recommend that you appropriately set the heap space for optimum Snaplex performance.

If the heap space value is excessively high, this can cause the machine to swap memory to disk, degrading performance.

If the heap space value is excessively low, this can cause pipelines that require higher memory to fail or degrade in performance.

Compressed Class Space

In Java, objects are instantiations of classes. Object data is stored on the heap, and class data is stored in nonheap space for memory allocation.

The default compressed class space size is automatically raised to 2 GB to prevent pipeline preparation errors when the JVM heap space size is more than 10 GB.

If a Pipeline Failed to Prepare error displays related to compressed class space, you can customize this setting in the Snaplex Global Properties.

To override the default, add the following global property, where N is the custom size of the compressed class space.

Key | Value |

|---|---|

|

|

Configure Snaplex Memory for Dynamic Workloads

If you either expect the Snaplex workload to be ad-hoc or cannot plan for the memory requirements, then the default heap memory settings on the Snaplex might not be appropriate. For example, these scenarios might require customizing the settings:

The Pipeline data volume to process varies significantly based on input data.

The Pipeline development or execution is performed by multiple teams, which makes capacity planning difficult.

The resources available in your test or development environment are lower than the production Snaplex.

In all these scenarios, setting the maximum heap size to a lower value than the available memory can result in memory issues. Because the workload is dynamic, if your existing Pipelines that are running use additional memory, then the JCC node might hit your configured maximum memory limit. When this limit is reached, the JCC displays an OutOfMemory exception error and restarts, causing all Pipelines that are currently running to fail.

To allow the JCC to manage memory limits optimally, you can use the swap configuration on the Snaplex nodes. Doing so allows your JCC node to operate with the configured memory in normal conditions, and if memory is inadequate, the JCC node can use the swap memory with minimal degradation in Pipeline performance.

For Linux-based Groundplexes, we recommend that you use the following swap values:

Node Name | Node Type | Minimum Swap | Recommended Swap |

|---|---|---|---|

Medium Node | 2 vCPU 8 GB memory, 40 GB storage | 8 GB | 8 GB |

Large Node | 4 vCPU 16 GB memory, 60 GB storage | 8 GB | 8 GB |

X-Large Node | 8 vCPU 32 GB memory, 300 GB storage | 8 GB | 16 GB |

2X-Large Node | 16 vCPU 64 GB memory, 400 GB storage | 8 GB | 32 GB |

Medium M.O. Node | 2 vCPU 16 GB memory, 40 GB storage | 8 GB | 8GB |

Large M.O. Node | 4 vCPU 32 GB memory, 60 GB storage | 8 GB | 16 GB |

X-Large M.O. Node | 8 vCPU 64 GB memory, 300 GB storage | 8 GB | 32 GB |

2X-Large M.O. Node | 16 vCPU 128 GB memory, 400 GB storage | 8 GB | 64 GB |

Linux gives you two options for allocating swap space: a swap partition or a swap file. Configure a swap file, and you do not have to restart the node. Learn more about using a swap file on Linux machines and configuring swap in AWS.

Performance Implications

When enabling the swap, the IO performance of the volume is critical to achieve an acceptable performance when the swap is utilized.

On AWS, we recommend that you use Instance Store instead of EBS volume to mount the swap data. Refer to Instance store swap volumes for details.

When your workload exceeds the available physical memory and the swap is utilized, the JCC node can become slower because of additional IO overhead caused by swapping. Therefore, configure a higher timeout for

jcc.status_timeout_secondsandjcc.jcc_poll_timeout_secondsfor the JCC node health checks.Even after configuring the swap, the JCC process can still run out of resources if all the available memory is exhausted. This scenario triggers the JCC process to restart, and all running Pipelines are terminated. We recommend that you use larger nodes with additional memory for the workload to complete successfully.

We recommend that you limit setting the swap to the maximum swap to be used by the JCC node. Using a larger swap configuration causes performance degradation during the JRE garbage collection operations.

Best Practices

Memory swapping can result in performance degradation because of disk IO, especially if the Pipeline workload also utilizes local disk IO.

When the pipeline workload is dynamic and capacity planning is difficult, we strongly recommend the minimum swap configuration.

Swap Memory Configuration Workflow

To utilize swap for the JCC process, you can use this workflow:

Enable swap on your host machine. The steps depend on your operating system (OS). For example, for Ubuntu Linux, you can use the steps in this tutorial.

Update your Maximum memory Snaplex setting to a lower percentage value, such that the absolute value is lower than the available memory. The load balancer uses this value when allocating Pipelines. The default is to set to 85%, which means that if the node memory usage is above 85% of the maximum heap size, then additional pipelines cannot start on the node.

Add the following two properties in the Global properties section of the Node Properties tab in the Update Snaplex dialog:

jcc_poll_timeout_secondsis the timeout (default value is 10 seconds) for each health check poll request from the Monitor.status_timeout_secondsis the time period (default value is 300 seconds) that the Monitor process waits for before the JCC is restarted if the health check requests continuously fail.

Example:

Key | Value |

|---|---|

|

|

|

|

Ring Buffer

The size of the buffer between Snaps is configured by setting the feature flags on the org com.snaplogic.cc.jstream.view.publisher.AbstractPublisher.DOC_RING_BUFFER_SIZE, where the default value is 1024 and com.snaplogic.cc.jstream.view.publisher.AbstractPublisher.BINARY_RING_BUFFER_SIZE, where the default value is 128.

com.snaplogic.cc.jstream.view.publisher.AbstractPublisher.DOC_RING_BUFFER_SIZE=1024 com.snaplogic.cc.jstream.view.publisher.AbstractPublisher.BINARY_RING_BUFFER_SIZE=128

Clearing Node Cache Files

A clearcache option is added to the jcc.sh/jcc.bat file to clear the cache files from the node.

You must ensure that the JCC is stopped before running the clearcache command on both Windows and Linux systems.

Customizing Version Updates

The Snaplex process automatically updates itself to run the same version as running on the SnapLogic cloud. If there is a mismatch in the versions, the Snaplex cannot be used to run Pipelines.

When the Snaplex service is started, two Java processes are started. First is the Monitor process, which then starts the actual Snaplex process. The monitor keeps track of the Snaplex process state, restarting it in case of failure. The Snaplex by default upgrades itself to run the same binaries that are running on the SnapLogic cloud. The monitor process continues running always, running from the binary which was used when the Monitor was originally started. Running jcc.sh restart or jcc.bat restart restarts both the monitor and the Snaplex processes.

In case a custom Snaplex patch is provided for a customer, it is provided as a jcc.war file. If this is copied into the /opt/snaplogic/run/lib directory and the JCC node is restarted, the JCC again downloads the latest version of the war file. To prevent the Snaplex from going back to the default binaries, you can setup the Snaplex to disable downloading the current version from the SnapLogic cloud. To do this, check the value of build_tag as returned by https://elastic.snaplogic.com/status. For example, if the build tag is mrc27, adding the following line in etc/global.properties prevents the Snaplex from downloading the mrc27 version from the cloud.

jcc.skip_version = mrc27 |

When the next release is available on the cloud and the custom patch is no longer required, the Snaplex automatically downloads the next version.

The download of latest binaries and automatic updates can be disabled on the Snaplex. If so, the next time there is a new version available on the SnapLogic cloud, the Snaplex is no longer be usable since there would be a version mismatch. The automatic download would have to be re-enabled for the Snaplex to be usable again.

To avoid such issues, the skip_version approach above is the recommended method to run with a custom version of the Snaplex binaries.

To prevent the new binaries from being downloaded, set (default is True):

jcc.enable_auto_download = False

To disable the automatic restart of the Snaplex after new binaries are downloaded, set (default is True):

jcc.auto_restart = False

JCC Node Debug Mode

If you are using the Snaplex for Snap development and it needs to be put in debug mode, you can configure the monitor process to start the JVM with debug options enabled. Add the following line in etc/global.properties

jcc.jvm_options = -agentlib:jdwp=transport=dt_socket,server=y,suspend=n,address=8000

This starts the Snaplex process with the debug port set to 8000. Otherwise, any Pipeline executions sent to the Snaplex would hit breakpoints set in the Snap code.

Configuring Bouncy Castle as the first Security Provider in your Snaplex

The JCC node contains a list of security providers to enable Snaps to work with SSL or private keys. SUN is the default and the main security provider. However, the SUN provider might not handle some private keys, resulting in an InvalidAlgorithmException error. In such cases, you must ensure that the Bouncy Castle security provider is used as the main security provider; otherwise, the Pipelines fail.

You can enable a flag in the node properties to make Bouncy Castle the first security provider.

In the Node Properties tab of your target Snaplex, add the following key/value pair under Global Properties:

Key:

jcc.jvm_optionsValue:

-Dsnaplogic.security.provider=BC

Click Update, and then restart the Snaplex node.

If you switch to Bouncy Castle as the main security provider, then Redshift accounts working with Amazon Redshift JDBC Driver fail if the driver version is below 2.0.0.4. As a workaround, use the Redshift driver 2.0.0.4 or later and provide the JDBC URL.