| Snap Type: | Write | ||||||

|---|---|---|---|---|---|---|---|

| Description: | This Snap performs bulk load operation into a Google BigQuery database, it does so either by using data from incoming documents or by using existing files in the Google Cloud Storage bucket depending upon the Snap's configuration. The Snap supports all three file types supported by Google BigQuery - CSV, JSON, and AVRO. When using incoming documents: In the case where data from incoming documents is being loaded, the data is first uploaded to a temporary file on Google Cloud Storage and from that temporary file the data is loaded into the destination table. The user can choose to either retain or delete this temporary file after the Snap terminates. When using existing files from Google Cloud Storage: In the case where existing files from Google Cloud Storage are being used, the data is loaded directly from the specified files into the destination table, there are no temporary files created for this operation. However, the user can choose to either retain or delete these existing files after the Snap terminates. ETL Transformations & Data FlowThe Google BigQuery Bulk Load (Cloud Storage) Snap performs a bulk load of the input records into the specified database. If the data is being loaded from incoming documents, it is sent to a temporary file in the cloud storage and from there to the destination table. The temporary file is retained after Snap's execution however the user can choose to delete it if they so wish and configure the Snap accordingly. Input & Output

Modes

The following points must be considered when using this Snap:

| ||||||

| Prerequisites: | Write access to the Google BigQuery Account and Read & Write access from/to the Google Cloud Storage account is required. | ||||||

| Limitations and Known Issues |

| ||||||

| Configurations: | Account & AccessThis Snap uses account references created on the Accounts page of SnapLogic Manager to handle access to this endpoint. See Google BigQuery Account for information on setting up this type of account. Views

| ||||||

| Troubleshooting: | Mismatch in the order of columns/number of columns between incoming document/existing Google Cloud Storage files in CSV format:

| ||||||

Settings | |||||||

Label | Error rendering macro 'excerpt-include' : No link could be created for 'Label'. | ||||||

| Project ID | Required. Project ID of the project containing the table, this is a suggestible field and can be populated based on the Account settings. Example: project1234 Default value: [None] | ||||||

| Dataset ID | Required. Dataset ID of the dataset containing the table, this is a suggestible field and all the datasets in the specified project will be populated. Example: dataset1234 Default value: [None] | ||||||

| Table ID | Required. Name of the target table into which the records are to be loaded, this is a suggestible field and all the tables in the datasets will be listed. Example: table1234 Default value: [None] | ||||||

| Create table if not present | Specifies that the table should be created if not already present in the database. Default value: Not selected When selecting <i>Use existing files</i> in <b>Upload type</b> Ensure that this property is not selected when choosing the Upload exising files on Google Cloud Storage option in Upload type property, else an exception will be thrown and the Snap will not execute. | ||||||

| Bucket name | Name of the Google Cloud Storage bucket to be used for the operation. This is a suggestible field and will list all the buckets within the given account. Example: project1234 Default value: [None] | ||||||

| Upload type | This is a drop-down menu consisting of two options: Upload incoming documents and Upload existing files from Google Cloud Storage. It specifies the data source to the Snap, that is incoming files or existing files in Google Cloud Storage bucket have to be uploaded. To upload data from existing files in Google Cloud Storage bucket select the option Upload existing files from Google Cloud Storage. Default value: Upload existing files from Google Cloud Storage. Based on the selection here, only one of the succeeding sections has to be configured. The succeeding sections being Properties for uploading incoming documents and Properties for uploading existing files from Google Cloud Storage. | ||||||

| Properties for uploading incoming documents | |||||||

| This sub-section has to be configured if the option Upload incoming documents was selected in the Upload type property. | |||||||

| File format | For selecting the file preferred format of the temporary file. This is a drop-down list that has three options: CSV, JSON, and AVRO. Default value: CSV File formats and their limitations The file format should be selected based on the data type they support, for example if the incoming document contains arrays or lists then selecting CSV in the File format property will throw an execption and the Snap will not execute. In order to avoid this, AVRO or JSON must be selected. Similarly, AVRO file format should not be used for Date Time data types. If the incoming documents do not contain all the table columns in the same order as the destination table then do not use CSV. | ||||||

| Temp file name | The name of the temporary file that will be created on the Google Cloud Storage bucket, if not provided then a system generated file name will be used. Default value: [None] | ||||||

| Preserve temp file | Specifies whether the temporary file created for the load operation has to be retained or deleted after the Snap's execution. By default the temporary file will be deleted. Default value: Selected | ||||||

| Properties for uploading existing files from Google Cloud Storage | |||||||

| This sub-section has to be configured if the option "Upload existing files from Google Cloud Storage" was selected in the Upload type property. | |||||||

| File paths | Multiple files can be selected based on the need. When the pipeline is executed the output data will have as many records listed. Based on the number of files added, the Snap will group them into categories (CSV with header & delimiter, CSV with delimiter but without header, JSON, and AVRO) and this distinction will be maintained in the output preview as well (shown distinctly according to File type). | ||||||

| File format | This is a drop-down list that has three options: CSV, JSON, and AVRO. Default value: CSV | ||||||

| File path | The file's location in the Google Cloud Storage bucket. Example: gs://gcs_existingbucket/exisitng_file.csv. Default value: [None] | ||||||

| CSV file contains headers | Specifies that the CSV file contains headers, using this option will enable the Snap in differentiating between the headers and records. Default value: Not selected | ||||||

| CSV delimiter | Specifies the delimiter for the CSV file. This is needed only for CSV file types. Example: | (pipe) Default value: , (comma) Custom delimiters All custom delimiters supported by BigQuery are supported as well. | ||||||

| Delete files upon exit | Similar operation as Preserve temp file, the files from which the data is loaded to the destination table will be deleted after Snap's execution if this option is enabled. Default value: Not selected | ||||||

| Execute during preview | Executes the Snap during a pipeline Save operation so that the output view can produce the preview data. Default value: Not selected | ||||||

Examples

Basic Use Case - 1 (Upload incoming documents)

In this example, incoming documents are used for the bulk load. It will cover two scenarios:

- Temporary file is preserved

- Temporary file is deleted

The following pipeline is executed:

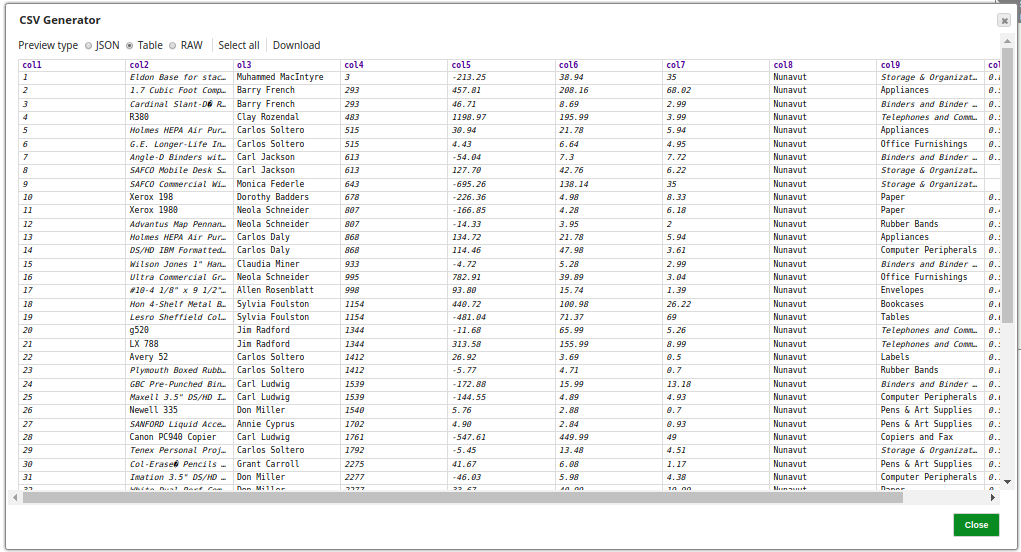

The CSV Generator Snap provides the input data to the Snap, a preview of the sample data from the incoming document is shown below:

Scenario - 1 - Temporary File Preserved

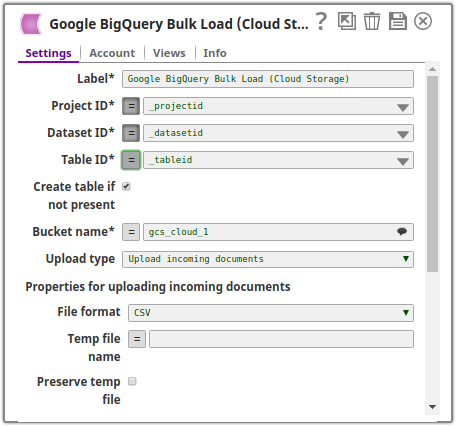

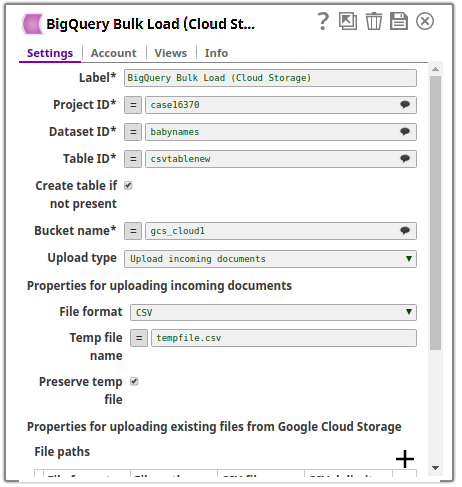

The following image is the configuration of the Google BigQuery Bulk Load (Cloud Storage) Snap:

The Preserve temp file checkbox is selected and the temprary file is specified as tempfile.csv. Upon execution the records from the incoming CSV file will be loaded into the temporary file present in the gcs_cloud_1 bucket and from there it is loaded into the destination table csvtablenew in the babynames dataset within the case16370 project.

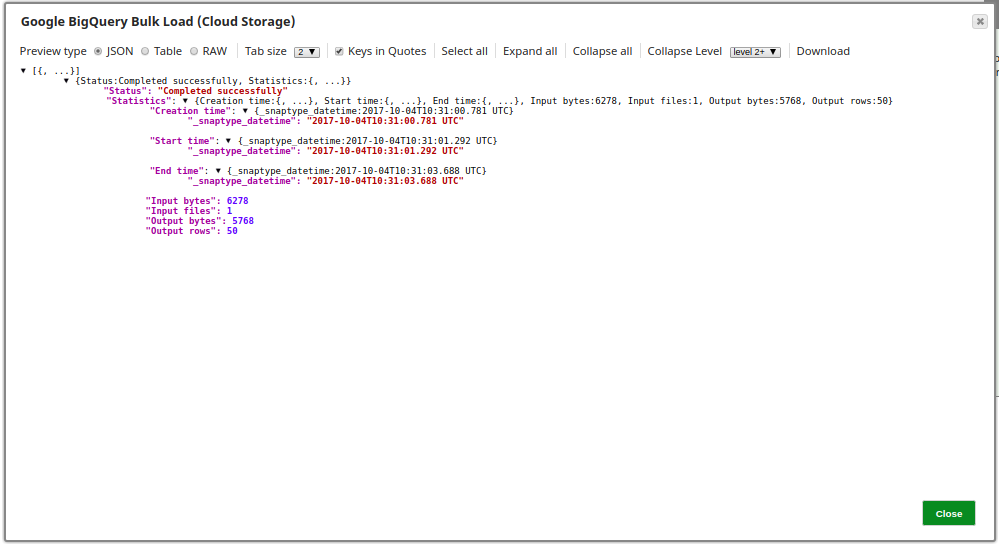

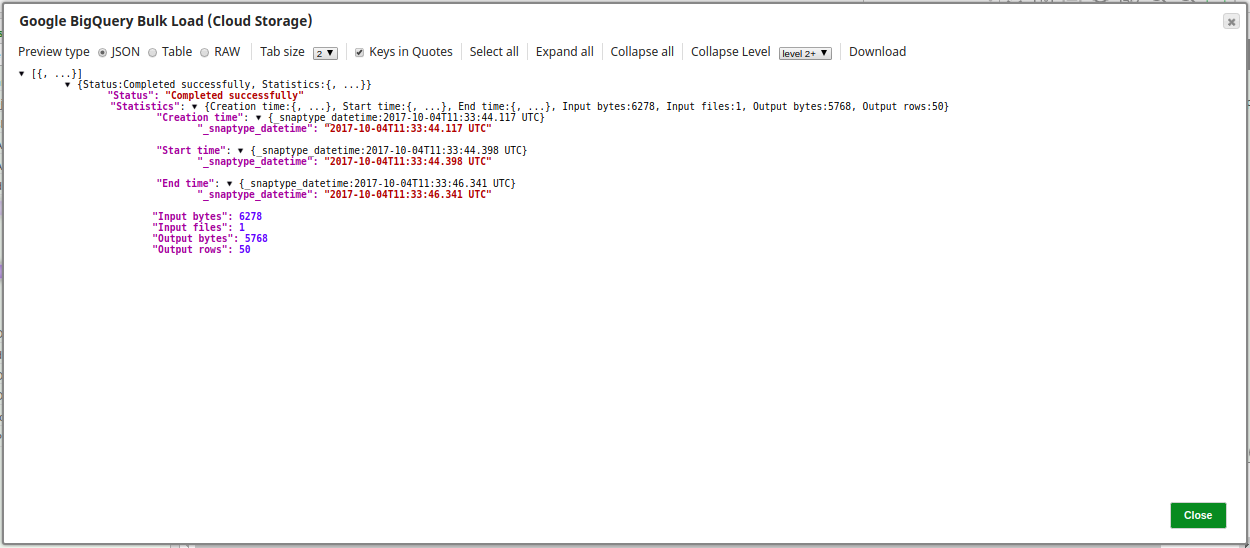

The following is a preview of the data output from the Google BigQuery Bulk Load (Cloud Storage) Snap:

Scenario - 2 - Temporary File Deleted

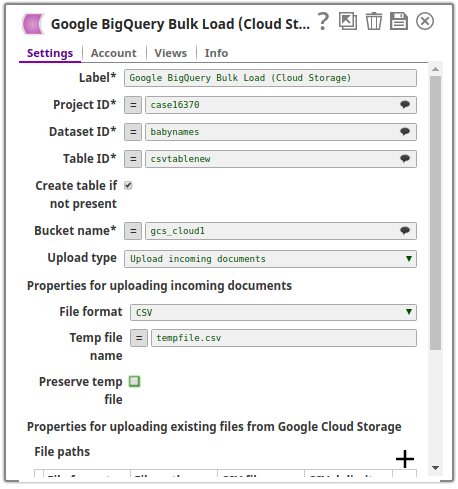

The following image is the configuration of the Google BigQuery Bulk Load (Cloud Storage) Snap:

The Preserve temp file checkbox is not selected and the temprary file is specified as tempfile.csv. Upon execution the records from the incoming CSV file will be loaded into the temporary file present in the gcs_cloud_1 bucket and from there it is loaded into the destination table csvtablenew in the babynames dataset within the case16370 project. The temporary file is deleted.

The following is a preview of the data output from the Google BigQuery Bulk Load (Cloud Storage) Snap:

Basic Use Case - 2 (Upload Existing Files)

In this example, incoming documents are used for the bulk load. It will cover two scenarios:

- Cloud Storage file is preserved

- Cloud Storage file is deleted

The following pipeline is executed:

Scenario - 1 - Cloud Storage File Preserved

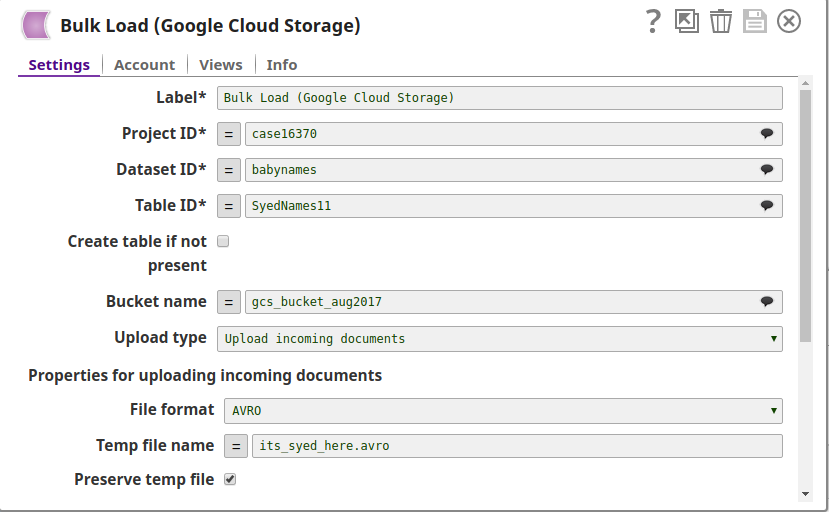

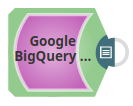

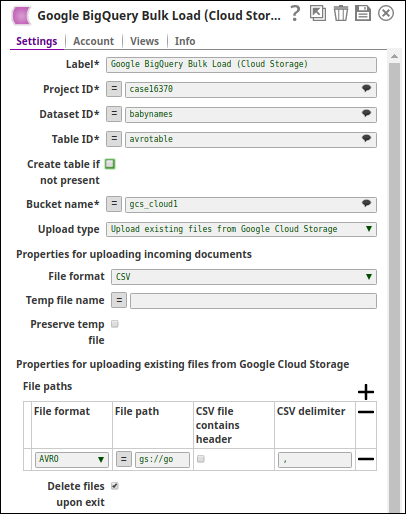

The following image is the configuration of the Google BigQuery Bulk Load (Cloud Storage) Snap:

An AVRO file is chosen as the input file and the Delete files upon exit checkbox is not selected. Upon the Snap's execution, the data from the input file will be loaded into the table named avrotable in the babynames dataset within the case16370 project. The AVRO file is not deleted.

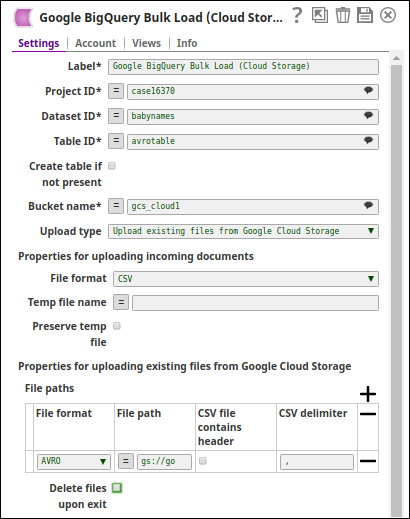

The following is a preview of the Snap's output:

Scenario - 2 - Cloud Storage File Deleted

The following image is the configuration of the Google BigQuery Bulk Load (Cloud Storage) Snap:

The following is a preview of the Snap's output:

Exported pipeline available in Downloads section below.

Typical Snap Configurations

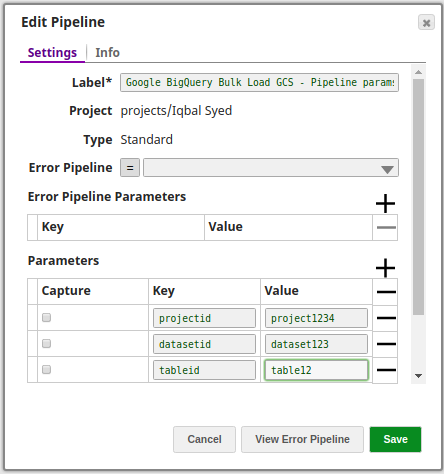

The key configuration of the Snap lies in how the values are passed to the Snap, this can be done in the following ways:

- Without expressions

The values are passed to the Snap directly. - With expressions

- Using pipeline parameters

The values are passed as pipeline parameters (the parameters to be applied have to be selected by enabling the corresponding checkbox under the Capture column).

- Using pipeline parameters

Advanced Use Case

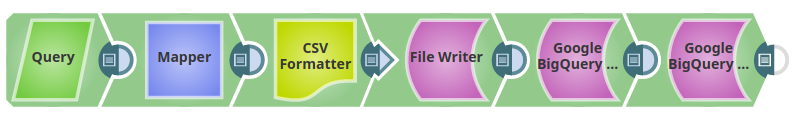

The following describes a pipeline, with a broader business logic involving multiple ETL transformations, that shows how typically in an enterprise environment, Bulk Load functionality can be used.

This pipeline reads social data and writes it into an existing file in the Google Cloud Storage. Bulk load of this social data is then performed from the existing file in the Google Cloud Storage to the destination table.

- Extract: Social data matching a certain criteria is extracted by the Query Snap.

- Transform: The extracted data from the Query Snap is transformed by the CSV Formatter Snap.

- Load: The incoming data from the CSV Formatter Snap is written into a CSV file in Cloud Storage by the File Writer Snap.

- Extract & Load: The Google BigQuery Bulk Load (Cloud Storage) Snap extracts the data from the existing CSV file in Cloud Storage and performs bulk load of this extracted data into the destination table.

- Extract: The Google BigQuery Execute Snap extracts the data inserted into the destination table by the Google BigQuery Bulk Load (Cloud Storage) Snap.

Exported pipeline is available in the Downloads section below.

Downloads

.png?version=1&modificationDate=1510459236359&cacheVersion=1&api=v2)