On this Page

...

You can use the Snowflake - Bulk Upsert Snap to bulk update the existing records if present or inserts insert records to the a target Snowflake table.

Depending on the account that you configure with the Snap, you can use the Snowflake Bulk Upsert Snap to upsert data into AWS S3 buckets or Microsoft Azure Storage Blobs or Google Cloud Storage.

...

SELECT FROM TABLE: SELECT as a statement enables you to query the database and retrieve a set of rows. SELECT as a clause enables you to define the set of columns returned by a query.

COPY INTO <table>: Enables loading data from staged files to an existing table.

CREATE TEMPORARY TABLE: Enables creating a temporary table in the database. See Create Temporary Table Privilege for more information.

MERGE: Enables inserting, updating, and deleting values in a table based on values in a second table or a subquery.

| Info |

|---|

Support for Ultra Pipelines

Works in Ultra Pipelines. However, we recommend that you not use this Snap in an Ultra Pipeline.

Limitations

The special character '~' is not supported if it is there in temp directory name for Windows. It is reserved for user's home directory.

Known Issues

Because of performance issues, all Snowflake Snaps now ignore the Cancel queued queries when pipeline is stopped or if it fails option for Manage Queued Queries, even when selected. Snaps behave as though the default Continue to execute queued queries when the Pipeline is stopped or if it fails option were selected.

...

Behavior Change

In the 4.31 main18944 release and later, the Snowflake - Bulk Upsert Snap no longer supports values from an upstream input document in the Key columns field when the expression button is enabled.

To avoid breaking your existing Pipelines that use a value from the input schema in the Key columns field, update the Snap settings to use an input value from Pipeline parameters instead.

Snap Views

...

Type

...

Format

...

Number of Views

...

Examples of Upstream and Downstream Snaps

...

Description

...

Input

...

Document

...

Min: 1

Max: 1

...

JSON Generator

Binary to Document

...

Incoming documents are first written to a staging file on Snowflake's internal staging area. A temporary table is created on Snowflake with the contents of the staging file. An update operation is then run to update existing records in the target table and/or an insert operation is run to insert new records into the target table.

...

Output

...

Document

...

Min: 0

Max: 1

...

Mapper

Snowflake Execute

If an output view is available, then the output document displays the number of input records and the status of the bulk upload as follows:

...

Error

...

Error handling is a generic way to handle errors without losing data or failing the Snap execution. You can handle the errors that the Snap might encounter when running the Pipeline by choosing one of the following options from the When errors occur list under the Views tab:

Stop Pipeline Execution: Stops the current pipeline execution if the Snap encounters an error.

Discard Error Data and Continue: Ignores the error, discards that record, and continues with the remaining records.

Route Error Data to Error View: Routes the error data to an error view without stopping the Snap execution.

Learn more about Error handling in Pipelines.

Snap Settings

| Info |

|---|

|

...

Field Name

...

Field Type

...

Field Dependency

...

Description

Label*

Default Value: Snowflake - Bulk Upsert

Example: Load Employee Tables

...

String

...

N/A

...

Specify the name for the instance. You can modify this to be more specific, especially if you have more than one of the same Snap in your Pipeline.

Schema Name

Default Value: N/A

Example: schema_demo

String/Expression/Suggestion

...

N/A

Specify the database schema name. In case it is not defined, then the suggestion for the Table Name retrieves all tables names of all schemas. The property is suggestible and will retrieve available database schemas during suggest values.

The values can be passed using the Pipeline parameters but not the upstream parameter.

Table Name*

Default Value: N/A

Example: employees_table

String/Expression/Suggestion

...

N/A

Specify the name of the table to execute bulk load operation on.

The values can be passed using the Pipeline parameters but not the upstream parameter.

Staging location

Default Value: Internal

Example: External

...

Dropdown list/Expression

...

N/A

Select the type of staging location that is to be used for data loading:

...

External: Location that is not managed by Snowflake. The location should be an AWS S3 Bucket or Microsoft Azure Storage Blob or Google Cloud Storage. These credentials are mandatory while validating the Account.

...

Requirements for External Storage LocationThe following are mandatory when using an external staging location: When using an Amazon S3 bucket for storage:

When using a Microsoft Azure storage blob:

When using a Google Cloud Storage:

|

Support for Ultra Pipelines

Works in Ultra Pipelines. However, we recommend that you not use this Snap in an Ultra Pipeline.

Limitations

The special character tilde (~) is not supported if it exists in the temp directory name for Windows. It is reserved for the user's home directory.

Known Issues

Because of performance issues, all Snowflake Snaps now ignore the Cancel queued queries when pipeline is stopped or if it fails option for Manage Queued Queries, even when selected. Snaps behave as though the default Continue to execute queued queries when the Pipeline is stopped or if it fails option were selected.

...

Behavior Change

In the 4.31 main18944 release and later, the Snowflake - Bulk Upsert Snap no longer supports values from an upstream input document in the Key columns field when the expression button is enabled.

To avoid breaking your existing Pipelines that use a value from the input schema in the Key columns field, update the Snap settings to use an input value from Pipeline parameters instead.

Snap Views

Type | Format | Number of Views | Examples of Upstream and Downstream Snaps | Description |

|---|---|---|---|---|

Input | Document |

|

| Incoming documents are first written to a staging file on Snowflake's internal staging area. A temporary table is created on Snowflake with the contents of the staging file. An update operation is then run to update existing records in the target table and/or an insert operation is run to insert new records into the target table. |

Output | Document |

|

| If an output view is available, then the output document displays the number of input records and the status of the bulk upload. |

Error | Error handling is a generic way to handle errors without losing data or failing the Snap execution. You can handle the errors that the Snap might encounter when running the Pipeline by choosing one of the following options from the When errors occur list under the Views tab:

Learn more about Error handling in Pipelines. | |||

Snap Settings

| Info |

|---|

|

Field Name | Field Type | Field Dependency | Description |

|---|---|---|---|

Label* Default Value: Snowflake - Bulk Upsert | String | N/A | Specify the name for the instance. You can modify this to be more specific, especially if you have more than one of the same Snap in your Pipeline. |

Schema Name Default Value: N/A | String/Expression/Suggestion | N/A | Specify the database schema name. In case it is not defined, then the suggestion for the Table Name retrieves all tables names of all schemas. The property is suggestible and will retrieve available database schemas during suggest values. The values can be passed using the Pipeline parameters but not the upstream parameter. |

Table Name* Default Value: N/A | String/Expression/Suggestion | N/A | Specify the name of the table to execute bulk load operation on. The values can be passed using the Pipeline parameters but not the upstream parameter. |

Staging location Default Value: Internal | Dropdown list/Expression | N/A | Select the type of staging location that is to be used for data loading:

Vector support:

|

Flush chunk size (in bytes) | String/Expression | Appears when you select Input view for Data source and Internal for Staging location. | When using internal staging, data from the input view is written to a temporary chunk file on the local disk. When the size of a chunk file exceeds the specified value, the current chunk file is copied to the Snowflake stage and then deleted. A new chunk file simultaneously starts to store the subsequent chunk of input data. The default size is 100,000,000 bytes (100 MB), which is used if this value is left blank. |

File format object Default Value: None | String/Expression |

N/A | Specify an existing file format object to use for loading data into the table. The specified file format object determines the format type such as CSV, JSON, XML, AVRO, or other format options for data files. | |

File format type Default Value: None |

String/Expression/Suggestion | N/A | Specify a predefined file format object to use for loading data into the table. The available file formats include CSV, JSON, XML, and AVRO. This Snap supports only CSV and NONE file format types. | |||||

File format option Default value: N/A | String/Expression | N/A | Specify the file format option. Separate multiple options by using blank spaces and commas.

| ||||

Encryption type Default Value: None | Dropdown list | N/A | Specify the type of encryption to be used on the data. The available encryption options are:

The KMS Encryption option is available only for S3 Accounts (not for Azure Accounts) with Snowflake. If Staging Location is set to Internal, and when Data source is Input view, the Server Side Encryption and Server-Side KMS Encryption options are not supported for Snowflake snaps: This happens because Snowflake encrypts loading data in its internal staging area and does not allow the user to specify the type of encryption in the PUT API. Learn more: Snowflake PUT Command Documentation. | ||||

KMS key Default Value: N/A | String/Expression | N/A | Specify the KMS key that you want to use for S3 encryption. Learn more about the KMS key: AWS KMS Overview and Using Server Side Encryption. This property applies only when you select Server-Side KMS Encryption in the Encryption Type field above. | ||||

Buffer size (MB) Default Value: 10MB | String/Expression | N/A | Specify the data in MB to be loaded into the S3 bucket at a time. This property is required when bulk loading to Snowflake using AWS S3 as the external staging area. Minimum value: 6 MB Maximum value: 5000 MB S3 allows a maximum of 10000 parts to be uploaded so this property must be configured accordingly to optimize the bulk load. Refer to Upload Part for more information on uploading to S3. | ||||

Key columns* Default Value: None | Specify the column to use for existing entries in the target table.

| ||||||

|---|---|---|---|---|---|---|---|

Delete Upsert Condition Default: N/A | String | N/A | Delete Upsert Condition when true, causes the case to be executed. | ||||

Preserve case sensitivity Default Value: Deselected | Checkbox | N/A | Select this check box to preserve the case sensitivity of the column names.

| ||||

Manage Queued Queries Default Value: Continue to execute queued queries when the Pipeline is stopped or if it fails | Dropdown list | N/A | |||||

Choose an option to determine whether the Snap should continue or cancel the execution of the queued |

queries when |

the pipeline stops or fails. If you select Cancel queued queries when the Pipeline is stopped or if it fails, then the read queries under execution are canceled, whereas the write type of queries under execution are not canceled. Snowflake internally determines which queries are safe to be canceled and cancels those queries. | |||

Load empty strings Default value: Selected | Checkbox | N/A | If selected, empty string values in the input documents are loaded as empty strings to the string-type fields. Otherwise, empty string values in the input documents are loaded as null. Null values are loaded as null regardless. |

Additional Options | |||

On Error Default Value: ABORT_STATEMENT | Dropdown list | N/A | Select an action to perform when errors are encountered in a file. The available actions are:

|

Error Limit Default Value: 0 | Integer | Appears when you select SKIP_FILE_*error_limit* for On Error. | Specify the error limit to skip file. When the number of errors in the file exceeds the specified error limit or when SKIP_FILE_number is selected for On Error. |

Error Percentage Limit Default Value: 0 | Integer | Appears when you select SKIP_FILE_*error_percent_limit*% | Specify the percentage of errors to skip file. If the file exceeds the specified percentage when SKIP_FILE_number% is selected for On Error. |

Snap Execution Default Value: Execute only | Dropdown list | N/A | Select one of the three modes in which the Snap executes. Available options are:

|

Troubleshooting

Error | Reason | Resolution |

|---|---|---|

Cannot lookup a property on a null value. | The value referenced in the Key Column field is null. This Snap does not support values from an upstream input document in the Key columns field when the expression button is enabled. | Update the Snap settings to use an input value from pipeline parameters and run the pipeline again. |

Data can only be read from Google Cloud Storage (GCS) with the supplied account credentials (not written to it). | Snowflake Google Storage Database accounts do not support external staging when the Data source is the Input view. Data can only be read from GCS with the supplied account credentials (not written to it). | Use internal staging if the data source is the input view or change the data source to staged files for Google Storage external staging. |

Example

...

Upsert records

This example Pipeline demonstrates how you can efficiently update and delete data (rows) using the Key Column ID field and Upsert Delete condition. We use Snowflake - Bulk Upsert Snap to accomplish this task.

...

First, we configure the Mapper Snap with the required details to pass them as inputs to the downstream Snap.

...

After validation, the Mapper Snap prepares the output as shown below to pass to the Snowflake Bulk - Upsert Snap.

...

Next, we configure the Snowflake - Bulk Upsert Snap to:

Upsert the existing row for P_ID column, (so, we provide P_ID in the Key column field).

Delete the rows where the FIRSTNAME is snaplogic in the target table, (so, we specify FIRSTNAME = 'snaplogic' in the Delete Upsert Condition field).

...

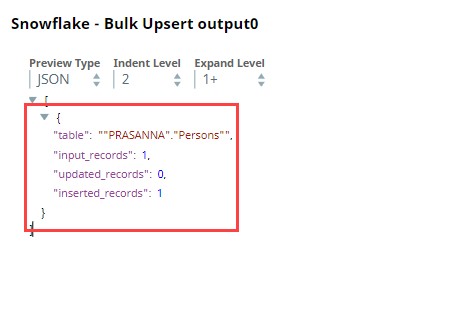

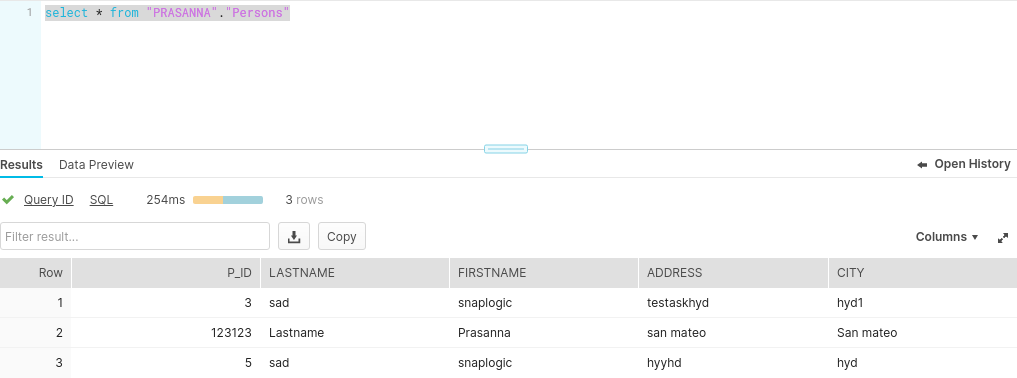

After execution, this Snap inserts a new record into the existing row for the P_ID key column in Snowflake.

Inserted Records Output in JSON | Inserted Records in Snowflake |

|---|---|

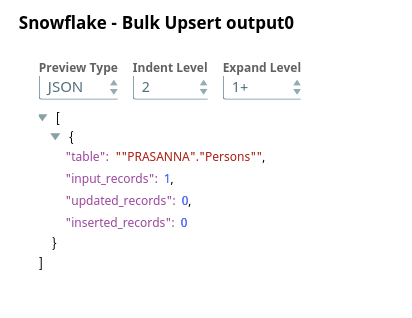

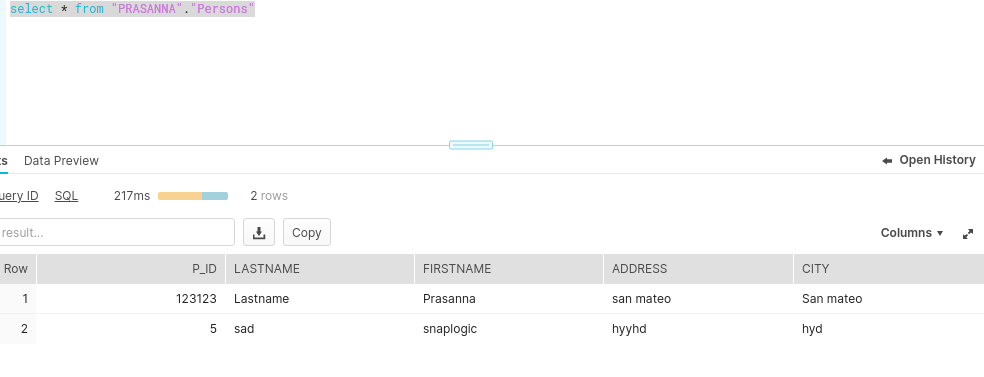

Upon execution, if the Delete Upsert condition is true, the Snap deletes the records in the target table as shown below.

Output in JSON | Deleted Record in Snowflake |

|---|---|

Bulk

...

load records

In the following example, we update a record using the Snowflake Bulk Upsert Snap. The invalid records which cannot be inserted will be routed to an error view.

...