In this article

Overview

Use this Snap to run multiple child Pipelines through one parent Pipeline.

Key Features

The Pipeline Execute Snap enables you to do the following:

- Structure complex Pipelines into smaller segments through child Pipelines.

- Initiate parallel data processing using the Pooling option.

- Orchestrate data processing across nodes, within the Snaplex or across Snaplexes.

- Distribute global values through Pipeline parameters across a set of child Pipeline Snaps.

Supported Modes for Pipelines

- Standard mode (default): In the standard mode, a new child Pipeline is started per input document. If you set the pool size to n (where n is any number with the default setting value being 1), then n number of concurrent child Pipelines can run; each child Pipeline processes one document from the parent and then completes.

Reuse mode: In reuse mode, child Pipelines are started and each child Pipeline instance can process multiple input documents from the parent. If you set the pool size to n (default 1), then n number of child pipelines are started and they will process input document in a streaming manner. The child pipeline needs to have an unlinked input view for use in reuse mode.

Reuse mode is more performant, but reuse mode has the restriction that the child pipeline has to be a streaming pipeline.Replaces Deprecated Snaps

This Snap replaces the ForEach and Task Execute Snaps, as well as the Nested Pipeline mechanism.

Resumable Child Pipeline: Resumable Pipeline does not support the Pipeline Execute Snap. A regular mode pipeline can use the Pipeline Execute Snap to call a Resumable mode pipeline. If the child pipeline is a Resumable pipeline, then the batch size cannot be greater than one.

Works in Ultra Task Pipelines with the following exception: Reusing a runtime on another Snaplex is not supported. See Snap Support for Ultra Pipelines.

Without reuse enabled, the one-in-one-out requirement for Ultra means batching will not be supported. There will be a runtime check which will fail the parent pipeline if the batch size is set greater than 1. This would be similar to the current behavior in DB insert and other snaps in ultra mode.- ELT Mode: A Pipeline can use the Pipeline Execute Snap to call another Pipeline with ELT Snaps only in the standard mode.

Pooling Enabled: The pool size and batch size can both be set to greater than one, in which case the input documents are spread across the child pipeline in a round-robin fashion to ensure that if the child Pipeline is doing any external calls that are slow, then the processing is spread across the children in parallel. The limitation of this option is that the document order is not maintained.

Prerequisites

None.

Limitations

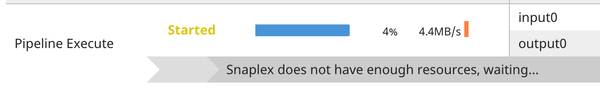

If there are not enough Snaplex nodes to execute the Pipeline on the Snaplex, then the Snap waits until Snaplex resources are available. When this situation occurs, the following message appears in the execution statistics dialog:

Because a large number of Pipeline runtimes can be generated by this Snap, only the last 100 completed child Pipeline runs are saved for inspection in the Dashboard.

- The Pipeline Execute Snap cannot exceed a depth of 64 child Pipelines before they begin failing.

- Child Pipelines do not display data preview details. You can view the data preview for any child Pipeline when the Pipeline Execute Snap completes execution in the parent Pipeline.

Ultra Pipelines do not support batching.

Unlike the Group By N Snap, when you configure the Batch field, the documents are processed one by one by the Pipeline Execute Snap and then transferred to the child Pipeline as soon as the parent Pipeline receives it. When the batch is over or the input stream ends, the child Pipeline is closed.

Snap Input and Output

The Input/Output views on the Views tab are configurable.

| Input/Output | Type of View | Number of Views | Examples of Upstream and Downstream Snaps | Description |

|---|---|---|---|---|

| Input | Binary or Document |

|

| The document or binary data to send to the child Pipeline. |

| Output | Binary or Document |

|

|

For Pool Size Usage If the value in the Pool Size field is greater than one, then the order of records in the output is not guaranteed to be the same as that of the input. |

| Error | N/A | N/A | N/A | This Snap has at most one document error view and produces zero or more documents in the view. If the Pipeline execution does not complete successfully, a document is written to this error view. |

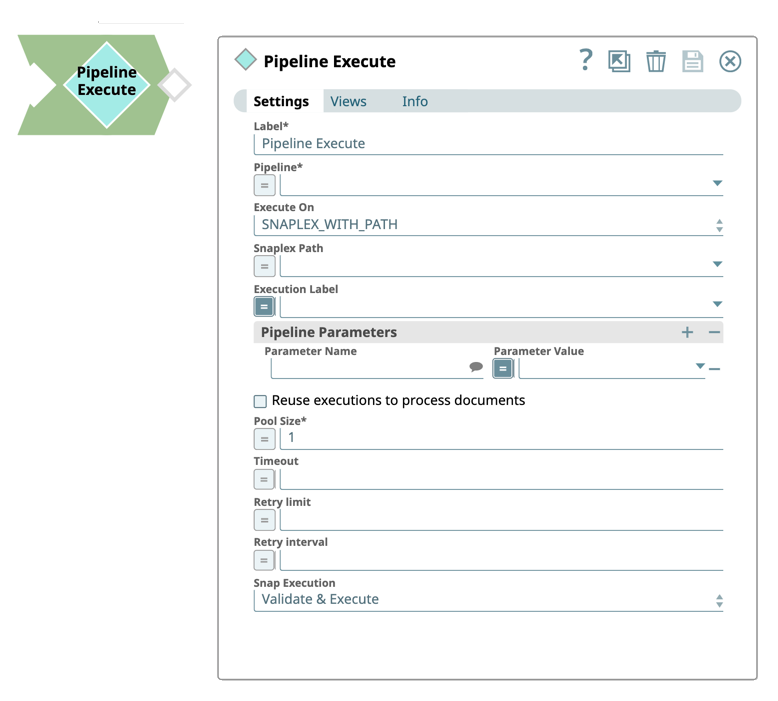

Snap Settings

| Parameter Name | Data Type | Description | Default Value | Example |

|---|---|---|---|---|

| Label | String | Specify a name for the Snap. You can modify this to be more specific, especially if you have more than one of the same Snap in your pipeline. | N/A | ExecuteCustomerUpdatePipeline |

| Pipeline | String | Required. Enter the absolute or relative (expression-based) path to the child Pipeline to run. If you only enter the Pipeline name, then the Snap searches for the Pipeline in the following folders in this order:

You can specify the absolute path to the target project using the Org/project_space/project notation. You can also dynamically choose the Pipeline to run by entering an expression in this field when Expression enabled. For example, to run all of the Pipelines in a project, you can connect the SnapLogic List Snap to this Snap to retrieve the list of Pipelines in the project and run each one. | N/A | NetSuite-Create-Credit-Memo |

| Execute On | String | Select the one of the following Snaplex options to specify the target Snaplex for the child Pipeline:

Best PracticesWhen choosing which Snaplex to run, always consider first the LOCAL_NODE option. With this selection, the child Pipeline runtime occurs on the same Snaplex node as that of the parent Pipeline. Due to absence of network traffic required to start the child Pipeline on another node, having the runtime on the same node makes the execution faster and more reliable. In both of these cases, you do not need to set an Org-specific property. This scenario facilitates usage for the user importing the Pipeline. However, if you do need to run the child Pipeline on a different Snaplex than the parent Pipeline, then select SNAPLEX_WITH_PATH and enter the target Snaplex name. In this case, you can take advantage of the following strategies:

Ultra Pipelines We do not recommend using the | SNAPLEX_WITH_PATH | groundplex4-West |

| Snaplex Path | String | Enter the name of the Snaplex on which you want the child Pipeline to run. Click to select from the list of Snaplex instances available in your Org. If you do not provide a Snaplex Path, then by default, the child Pipeline runs on the same node as the parent Pipeline. | N/A | DevPlex-1 |

| Execution label | String | The label to display in the Pipeline view of the Dashboard. You can use this field to differentiate one Pipeline execution from another. | The label for the child Pipeline. | NetSuite-Create-Credit-Memo |

Pipeline Parameters | Specify the Pipeline Parameters for the Pipeline selected in the Pipeline field. Click to add a row and enter key and value pairs for the following fields:

The Parameter Value can be an expression that you configure based on incoming documents, or the value can be a constant. When you select Reuse executions to process documents, you cannot change parameter values from one Pipeline invocation to the next. | |||

| Parameter name | String | Enter the name of the parameter. You can select the Pipeline Parameters defined for the Pipeline selected in the Pipeline field. | N/A | Postal_Code |

| Parameter value | String | Enter the value for the Pipeline Parameter, which can be an expression based on incoming documents or a constant. If you configure the value as an expression based on the input, then each incoming document or binary data is evaluated against that expression when invoking the Pipeline. The result of the expression is JSON-encoded if it is not a string. The child Pipeline then needs to use the When Reuse executions to process documents is enabled, the parameter values cannot change from one invocation to the next. | N/A | 94402 |

| Reuse executions to process documents | Checkbox | Select this option to start a child Pipeline and pass multiple inputs to the Pipeline. Reusable executions continue to live until all of the input documents to this Snap have been fully processed. When you enable this option and the Pipeline parameters use expressions, the expressions are evaluated using the first document. The parameter value in the child Pipeline does not change across documents. If you do not select this option, then a new Pipeline execution is created for each input document. The Reuse mode does not support the Batch Size option. | Not selected | Selected |

| Batch Size | Enter the number of documents in the batch size. If Batch Size is set to N, then N input documents are sent to each child Pipeline that is started. After N documents, the child Pipeline input view is closed until that the child pipeline completes. The output of the child Pipeline (one or more documents) passes to the Pipeline Execute output view. New child Pipelines are started after the original Pipeline completes. The Batch Size field is not displayed if Reuse executions to process documents is selected. Backward compatibilityFor existing Pipeline Execute Snap instances, null and zero values for the property are treated as a Batch size of 1. The behavior for existing Pipelines should not change, the child pipeline should get one document per execution. This feature is incompatible with reusable executions. | |||

| Number of Retries | String | Enter the maximum number of retry attempts that the Snap must make in case of a network failure. If the child Pipeline does not execute successfully, an error document is written to the error view. This feature is incompatible with reusable executions. | 0 | 3 |

| Retry Interval | String | Enter the minimum number of seconds for which the Snap must wait between two successive retry requests. A retry happens only when the previous attempt resulted in an error. | 1 | 10 |

| Pool size | String | Enter multiple input documents or binary data to be processed concurrently by specifying an execution pool size. When the pool size is greater than one, then the Snap starts Pipeline executions as needed for up to the specified pool size. When you select Reuse executions to process documents, the Snap starts a new execution only if all executions are busy working on documents or binary data and the total number of executions is below the pool size. | 1 | 4 |

| Timeout | String | Enter the maximum number of seconds for which the Snap must wait for the child Pipeline to complete the runtime. If the child Pipeline does not complete the runtime before the timeout, the execution process stops and is marked as failed. | No timeout is the default | 10 |

| Snap execution | Page lookup error: page "Anaplan Read" not found. If you're experiencing issues please see our Troubleshooting Guide. | Execute and Validate | Disabled | |

Guidelines for Child Pipelines

No unlinked binary input or output views. When you enter an expression in the Pipeline field, the Snap needs to contact the SnapLogic cloud servers to load the Pipeline runtime information. Also, if Reuse executions to process documents is enabled, the result of the expression cannot change between documents.

- When reusing Pipeline executions, there must be one unlinked input view and zero or one unlinked output view.

- If you do not enable Reuse executions to process documents, then use at most one unlinked input view and one unlinked output view.

- If the child Pipeline has an unlinked input view, make sure the Pipeline Execute Snap has an input view because the input document or binary data is fed into the child Pipeline.

- If you rename the child Pipeline, then you must manually update the reference to it in the Pipeline field; otherwise, the connection between child and parent Pipeline breaks.

- The child Pipeline is executed in preview mode when the Pipeline containing the Pipeline Execute Snap is saved. Consequently, any Snaps marked not to execute in preview mode are not executed and the child Pipeline only processes 50 documents.

- You cannot have a Pipeline call itself: recursion is not supported.

Schema Propagation Guidelines

- If the child Pipeline has a Snap that supports schema suggest, such as the JSON Formatter Snap or MySQL Insert Snap, then the schema is back propagated to the parent Pipeline. This configuration is useful in mapping input values in the parent Pipeline to the corresponding fields in the child Pipeline using a Mapper Snap in the parent Pipeline.

- For the schema suggest to work, the parent Pipeline must be validated first; only then is the schema from the child Pipeline visible in the parent Pipeline.

- The Pipeline Execute Snap is capable of propagating schema in both directions: upstream as well as downstream. See the example Schema Propagation in Parent Pipeline – 3.

Ultra Mode Compatibility

- If you selected Reuse executions to process documents for this Snap in an Ultra Pipeline, then the Snaps in the child Pipeline must also be Ultra-compatible.

- If you need to use Snaps that are not Ultra-compatible in an Ultra Pipeline, you can create a child Pipeline with those Snaps and use a Pipeline Execute Snap with Reuse executions to process documents disabled to invoke the Pipeline. Since the child Pipeline is executed for every input document, the Ultra Pipeline restrictions do not apply.

For example, if you want to run an SQL Select operation on a table that would return more than one document, you can put a Select Snap followed by a Group By N Snap with the group size set to zero in a child Pipeline. In this configuration, the child Pipeline performs the select operation during execution, and then the Group By Snap gathers all of the outputs into a single document or as binary data. That single output document or binary data can then be used as the output of the Ultra Pipeline.

Creating a Child Pipeline by Accessing Pipelines in the Pipeline Catalog in Designer

You can now browse the Pipeline Catalog for the target child Pipeline, and then select, drag and drop it in the Canvas. The SnapLogic Designer automatically adds the child Pipeline using a Pipeline Execute Snap.

Likewise, you can preview a child Pipeline by hovering over a Pipeline Execute Snap while the parent Pipeline is open on the Designer Canvas.

Returning Child Pipeline Output to the Parent Pipeline

A common use case for the Pipeline Execute Snap is to run a child Pipeline whose output is immediately returned to the parent Pipeline for further processing. You can achieve this return with the following Pipeline design for the child Pipeline.

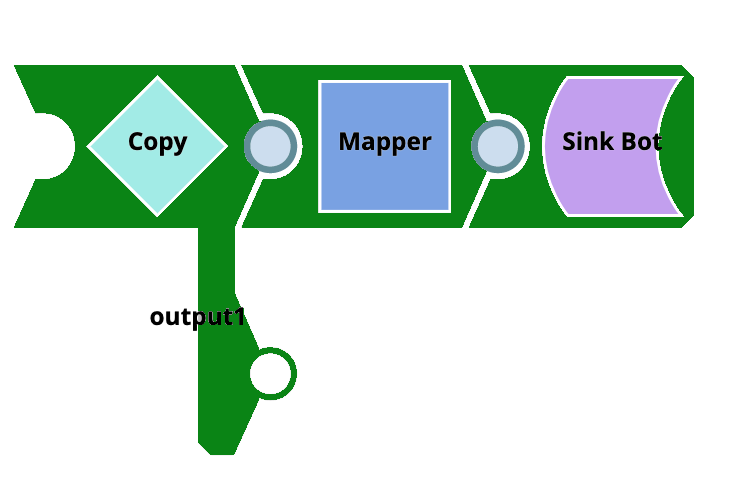

In this example, the document in this child Pipeline is sent to the parent Pipeline through output1 of the Copy Snap. Any unconnected output view is returned to the parent Pipeline. You can use any Snap that completes execution in this way.

Examples

Run a Child Pipeline Multiple Times

The PE_Multiple_Executions project demonstrates how you can configure the Pipeline Execute Snap to execute a child Pipeline multiple times. The project contains the following Pipelines:

- PE_Multiple_Executions_Child: A simple child Pipeline that writes out a document with static string and the number of input documents received by the Snap.

- PE_Multiple_Executions_NoReuse_Parent: A parent Pipeline that executes the PE_Multiple_Executions_Child Pipeline five times. You can save the Pipeline to examine the output documents. Note that the output contains a copy of the original document and the $inCount field is always set to one because the Pipeline was separately executed five times.

- PE_Multiple_Executions_Reuse_Parent: A parent Pipeline that executes the PE_Multiple_Executions_Child Pipeline once and feeds the child Pipeline execution five documents. You can save the Pipeline to examine the output documents. Note that the output does not contain a copy of the original document and the $inCount field goes up for each document since the same Snap instance is being used to process each document.

- PE_Multiple_Executions_UltraSplitAggregate_Parent: A parent Pipeline that is an example of using Snaps that are not Ultra-compatible in an Ultra Pipeline. This Pipeline can be turned into an Ultra Pipeline by removing the JSON Generator Snap at the head of the Pipeline and creating an Ultra Task.

- PE_Multiple_Executions_UltraSplitAggregate_Child: A child Pipeline that splits an array field in the input document and sums the values of the $num field in the resulting documents.

Propagate a Schema Backward –

The project, PE_Backward_Schema_Propagation_Contacts, demonstrates the schema suggest feature of the Pipeline Execute Snap. It contains the following files:

- PE_Backward_Schema_Propagation_Contacts_Parent

- PE_Backward_Schema_Propagation_Contacts_Child

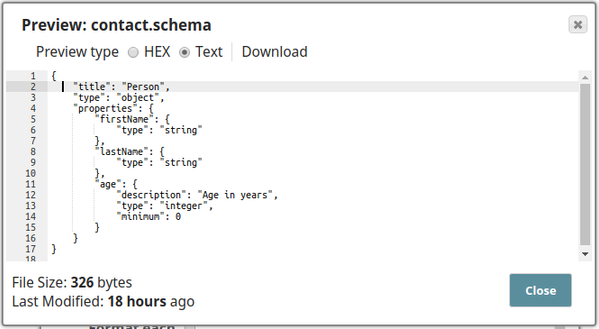

- contact.schema (Schema file)

- test.json (Output file)

The parent Pipeline is shown below:

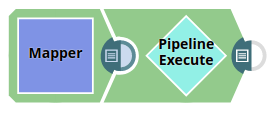

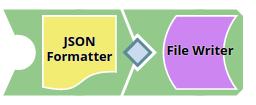

The child Pipeline is as shown below:

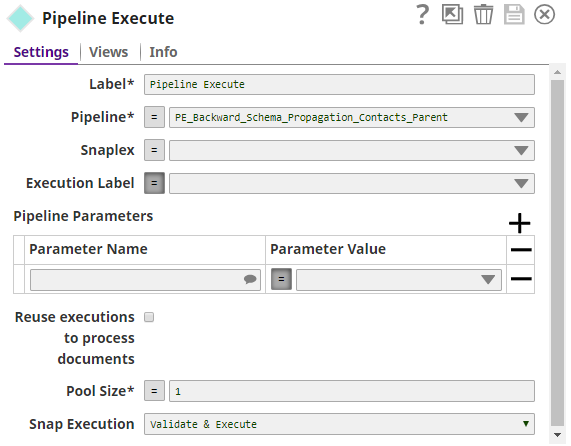

The Pipeline Execute Snap is configured as:

The following schema is provided in the JSON Formatter Snap. It has three properties - $firsName, $lastName, and $age. This schema is back propagated to the parent Pipeline.

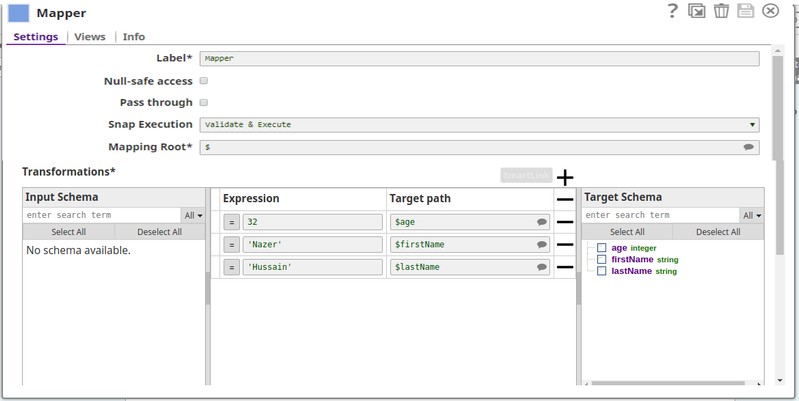

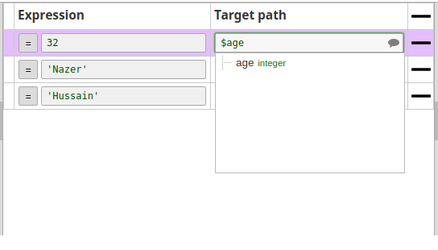

The parent Pipeline must be validated in order for the child Pipeline's schema to be back-propagated to the parent Pipeline. Below is the Mapper Snap in the parent Pipeline:

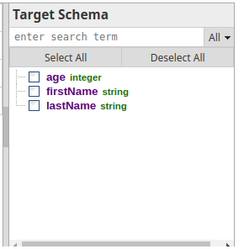

Notice that the Target Schema section shows the three properties of the schema in the child Pipeline:

Upon execution the data passed in the Mapper Snap will be written into the test.json file in the child Pipeline. The exported project is available in the Downloads section below.

Propagate Schema Backward and Forward

- PE_Backward_Forward_Schema_Propagation_Parent

- PE_Backward_Forward_Schema_Propagation_Child

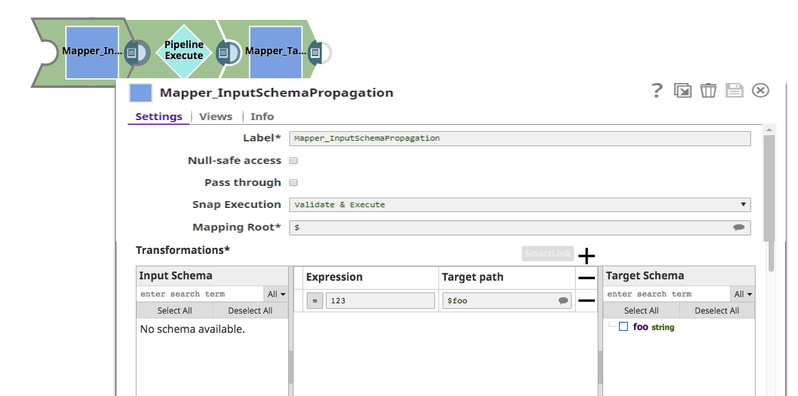

- The string expression $foo is propagated from the child Pipeline to the Pipeline Execute Snap.

- The Pipeline Execute Snap propagates it to the upstream Mapper Snap (Mapper_InputSchemaPropagation), as visible in the Target Schema section. Here it is assigned the value 123.

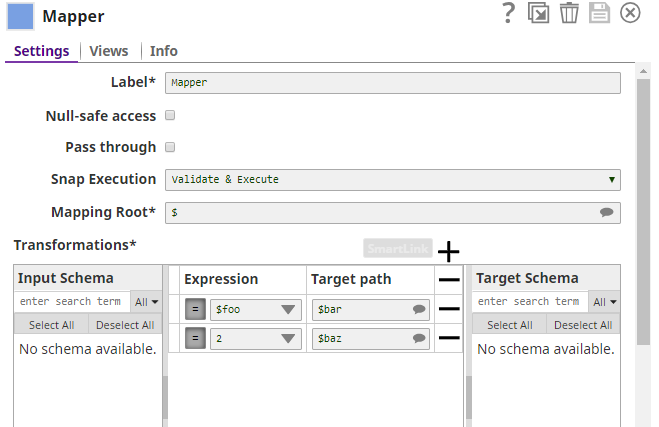

- This is passed from the Mapper to the Pipeline Execute Snap that internally passes the value to the child Pipeline. Here $foo is mapped to $bar. $baz is another string expression in the child Pipeline (assigned the value 2).

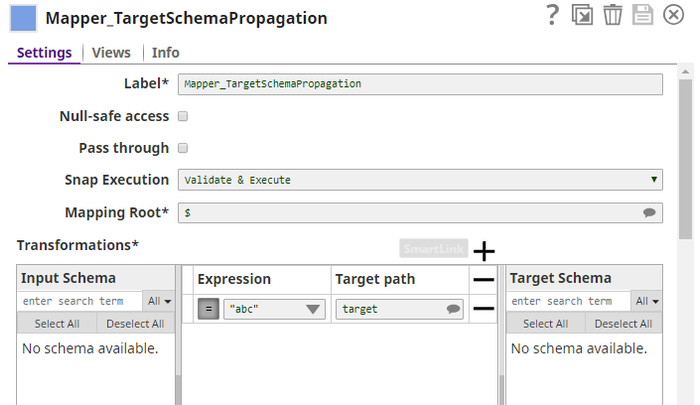

- $bar, and $baz are propagated to the Pipeline Execute Snap and propagated forward to the downstream Mapper Snap (Mapper_TargetSchemaPropagation). This can be seen in the Input Schema section of the Mapper Snap.

Migrating from Legacy Nested Pipelines

The Pipeline Execute Snap can replace some uses of the Nested Pipeline mechanism as well as the ForEach and Task Execute Snaps. For now, this Snap only supports child Pipelines with unlinked document views (binary views are not supported). If these limitations are not a problem for your use case, read on to find out how you can transition to this Snap and the advantages of doing so.

Nested Pipeline

Converting a Nested Pipeline will require the child Pipeline to be adapted to have no more than a single unlinked document input view and no more than a single unlinked document output view. If the child Pipeline can be made compatible, then you can use this Snap by dropping it on the canvas and selecting the child Pipeline for the Pipeline property. You will also want to enable the Reuse property to preserve the existing execution semantics of Nested Pipelines. The advantages of using this Snap over Nested Pipelines are:

- Multiple executions can be started to process documents in parallel.

- The Pipeline to execute can be determined dynamically by an expression.

- The original document or binary data is attached to any output documents or binary data if reuse is not enabled.

ForEach

Converting a ForEach Snap to the Pipeline Execute Snap is pretty straightforward since they have a similar set of properties. You should be able to select the Pipeline you would like to run and populate the parameter listing. The advantages of using this Snap over the ForEach Snap are:

- Documents or binary data fed into the Pipeline Execute Snap can be sent to the child Pipeline execution through an unlinked input view in the child.

Documents or binary data sent out of an unlinked output view in the child Pipeline execution is written out of the Pipeline Execute's output view. - The execution label can be changed.

- The Pipeline to execute can be determined dynamically by an expression.

- Executing a Pipeline does not require communication with the cloud servers.

Task Execute

Converting a Task Execute Snap to the Pipeline Execute Snap is also straightforward since the properties are similar. To start, you only need to select the Pipeline you would like to use, you no longer have to create a Triggered Task. If you set the Batch Size property in the Task Execute to one, then you will not want to enable the Reuse property. If the Batch Size was greater than one, then you should enable Reuse. The Pipeline parameters should be the same between the Snaps. The advantages of using this Snap over the Task Execute Snap are:

- A task does not need to be created.

- Multiple executions can be started to process documents in parallel.

- There is no limit on the number of documents that can be processed by a single execution (that is, no batch size).

- The execution label can be changed.

- The Pipeline to execute can be determined dynamically by an expression.

- The original document will be attached to any output documents if reuse is not enabled.

- Executing a Pipeline does not require communication with the cloud servers.

"Auto" Router

Converting a Pipeline that uses the Router Snap in "auto" mode can be done by moving the duplicated portions of the Pipeline into a new Pipeline and then calling that Pipeline using a Pipeline Execute. After refactoring the Pipeline, you can adjust the "Pool Size" of the Pipeline Execute Snap to control how many operations are done in parallel. The advantages of using this Snap over an "Auto" Router are:

- De-duplication of Snaps in the Pipeline.

- Adjusting the level of parallelism is trivially done by changing the Pool Size value.

Downloads

Important Steps to Successfully Reuse Pipelines

- Download the zip files, extract the files, and import the Pipelines into SnapLogic.

- Configure Snap accounts as applicable.

- Provide Pipeline parameters as applicable.