Cassandra - Execute

On this Page

Snap type: | Write | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

Description: | This Snap allows you to execute arbitrary Cassandra query language (CQL) supported by JDBC. This Snap works only with single queries. You can drop your database with it, so be careful.

Snaps in this Snap Pack display an exception (raised by the JDBC driver) when you query a map column that has a timestamp as the key. Example

The Cassandra Execute Snap is for simple DML (SELECT, INSERT, UPDATE, DELETE) type statements. | |||||||||

| Prerequisites: | Validated Cassandra account, verified network connectivity to Cassandra server and port, and the Cassandra server running. | |||||||||

| Support and limitations: |

| |||||||||

| Account: | This Snap uses account references created on the Accounts page of SnapLogic Manager to handle access to this endpoint. See Configuring Cassandra Accounts for information on setting up this type of account. | |||||||||

| Views: |

| |||||||||

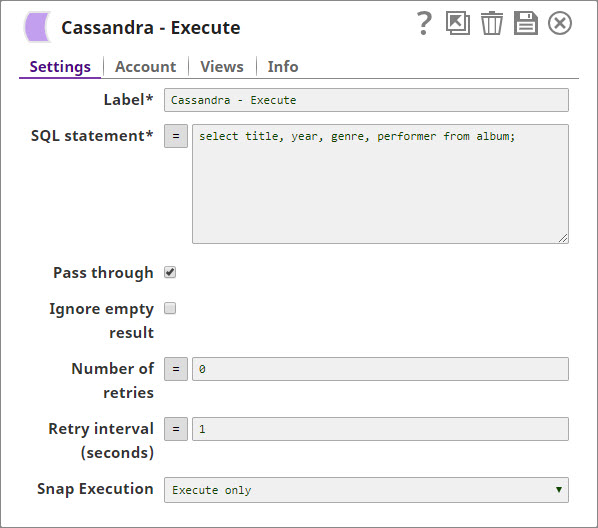

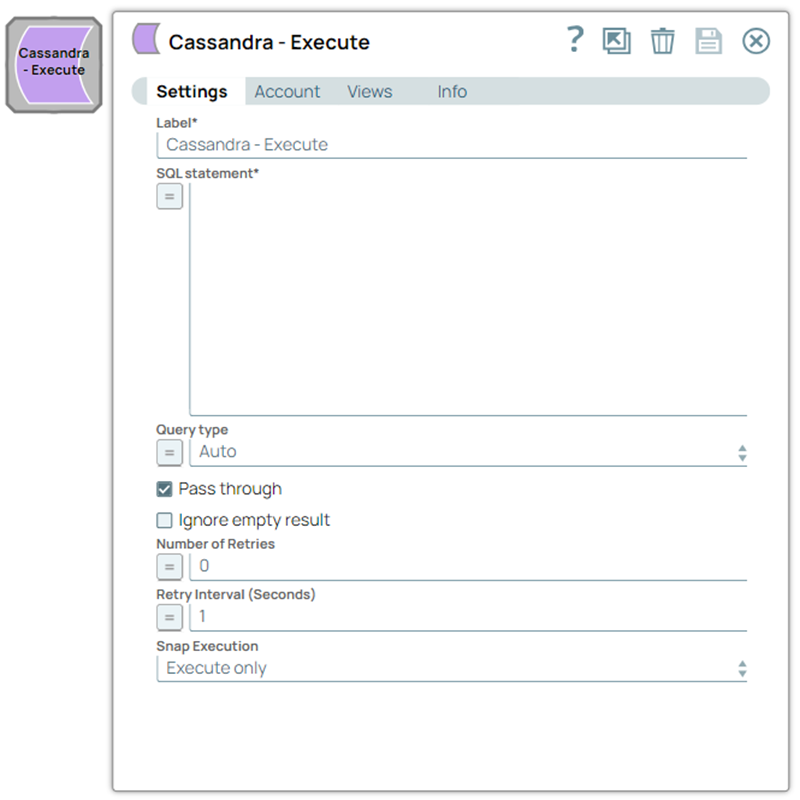

Settings | ||||||||||

Label | Required. The name for the Snap. You can modify this to be more specific, especially if you have more than one of the same Snap in your pipeline. | |||||||||

| SQL statement | Required. Specifies the SQL statement to execute on the server. We recommend you to add a single query in the SQL Statement field. Cassandra Snaps do not support batch operations, which is why this field does not support SQL bind variables in it. There are two possible scenarios that you encounter when working with SQL statements in SnapLogic. You must understand the following scenarios to successfully execute your SQL statements: Scenario 1: Executing SQL statements without expressions

Examples:

Additionally, the JSON path is allowed only in the WHERE clause. If the SQL statement starts with SELECT (case-insensitive), the Snap regards it as a select-type query and executes once per input document. If not, it regards it as write-type query and executes in batch mode. Scenario 2: Executing SQL queries with expressions

Examples:

Caution Using expressions that join strings together to create SQL queries or conditions has a potential SQL injection risk and hence unsafe. Ensure that you understand all implications and risks involved before using concatenation of strings with '=' Expression enabled. Single quotes in values must be escaped Any relational database (RDBMS) treats single quotes ( For example:

Default value: [None] This Snap does not allow you to inject SQL, such as select * from people where $columnName = "abc". Only values can be substituted since it uses prepared statements for execution, which, for example, results in select * from people where address = ?. The '$' sign and identifier characters, such as double quotes (“), single quotes ('), or back quotes (`), are reserved characters and should not be used in comments or for purposes other than their originally intended purpose. | |||||||||

| Query type | Select the type of query for your SQL statement (Read or Write). When Auto is selected, the Snap tries to determine the query type automatically. Default Value: Auto | |||||||||

Pass through | If checked, the input document will be passed through to the output view under the key 'original'. This property applies only to the Execute Snaps with SELECT statement. Default value: True | |||||||||

Ignore empty result | If selected, no document will be written to the output view when a SELECT operation does not produce any result. If this property is not selected and the Pass through property is selected, the input document will be passed through to the output view. | |||||||||

| Number of retries | Specify the maximum number of retry attempts the Snap must make in case there is a network failure and is unable to read the target file. The request is terminated if the attempts do not result in a response.

Ensure that the local drive has sufficient free disk space to store the temporary local file. | |||||||||

| Retry interval (seconds) | Number of seconds between retries. | |||||||||

Snap execution | Select one of the three modes in which the Snap executes. Available options are:

| |||||||||

Troubleshooting

- Run Cassandra JDBC driver using another JDBC tool to verify syntax and results.

Example

Snap Pack History

Have feedback? Email documentation@snaplogic.com | Ask a question in the SnapLogic Community

© 2017-2025 SnapLogic, Inc.

.png?version=1&modificationDate=1489905761918&cacheVersion=1&api=v2)

.png?version=1&modificationDate=1489910764343&cacheVersion=1&api=v2)