PolyBase Bulk Load

On this Page

Snap type: | Write | |||||||

|---|---|---|---|---|---|---|---|---|

Description: | This Snap Performs a bulk load operation from the input view document stream to the target table. The Snap supports SQL Server database with PolyBase feature, which includes SQL Server 2016 (on-premise) and Data Warehouse. It first formats the input view document stream to a temporary CSV file in Azure Blob storage and then sends a bulk load request to the database to load the temporary Blob file to the target table. ETL Transformations & Data FlowThis Snap enables the following ETL operations/flows: Loads data into a temporarily created Azure blob. Executes the SQl server command to load the above blob into the target table.

Input & Output

| |||||||

| Prerequisites: | Bulk load requires a minimum of SQL Server 2016 to work properly. The database should have PolyBase feature enabled in it. | |||||||

| Support and limitations: |

| |||||||

| Account: | This Snap uses account references created on the Accounts page of SnapLogic Manager to handle access to this endpoint. See Configuring Azure SQL Accounts for information on setting up this type of account. | |||||||

| Views: |

| |||||||

| Troubleshooting: |

| |||||||

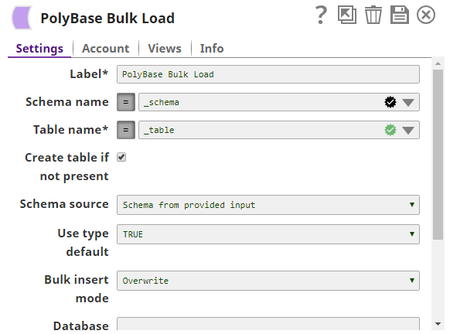

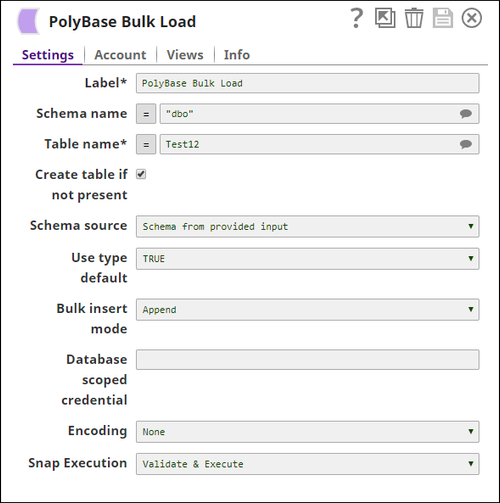

Settings | ||||||||

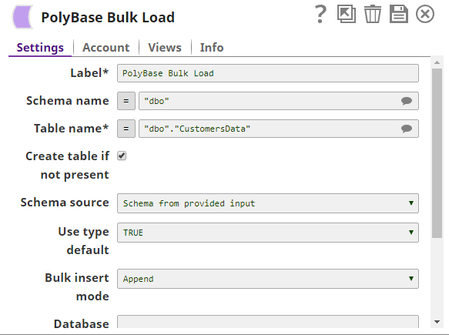

Label | Required. The name for the Snap. You can modify this to be more specific, especially if you have more than one of the same Snap in your pipeline. | |||||||

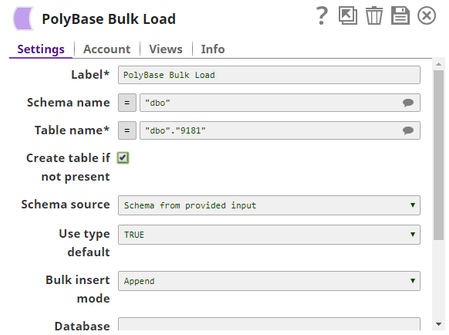

Schema Name | The database schema name. In case it is not defined, then the suggestion for the table name will retrieve all tables names of all schemas. The property is suggest-able and will retrieve available database schemas during suggest values. The values can be passed using the pipeline parameters but not the upstream parameter. Example: SYS Default value: None | |||||||

Table Name | Required. The target table to load the incoming data into. The values can be passed using the pipeline parameters but not the upstream parameter. Example: users Default value: None | |||||||

Create table if not present | Select this property to create target table in case it does not exist; otherwise the system will through table not found error. Example: table1 Default value: None | |||||||

| Schema source | Specifies if the schema must be fetched from the input document or from the existing table while loading data into the temporary blob at the time of bulk upload. The options available are: Schema from provided input and Schema from existing table. Default value: Schema from provided input | |||||||

| Use type default | Specifies how to handle any missing values in input documents. The options available are TRUE and FALSE. If you select TRUE, the Snap replaces every missing value in the input document with its default value in the external table. Supported data types and their default values are:

If you select FALSE, the Snap replaces every missing value in the input document with a null value in the external table. Default value: TRUE | |||||||

Bulk insert mode | Specifies if the incoming data should be appended to the target table or overwrite the existing data in that table. The options available are: Append and Overwrite. Example: Append, Overwrite Default value: Append If you select Overwrite, the Snap overwrites the existing table and schema with the input data. | |||||||

Database scoped credential | The Scoped credential is used to execute the queries in the bulk load operation. To do bulk load via storage blob, external database resources are required to be created. This, in turn, requires a "Database Scoped Credential". Refer to https://msdn.microsoft.com/en-us/library/mt270260.aspx for additional information. Provide the scoped credentials if one exists on the DB or the Snap will create the temporary scoped credentials and deletes them once the operation is completed. Default value: None | |||||||

| Encoding | The encoding standard for the Input data to be loaded on to the database. The available options are: None - Select this option only when using the Polybase Bulk Load with SQL Server 2016. UTF-8 - Select this option for the input standard in UTF-8 when using the Snap with Azure database. UTF-16 - Select this option for the input standard in UTF-16 when using the Snap with Azure database. Default value: None | |||||||

Snap execution | Select one of the three modes in which the Snap executes. Available options are:

| |||||||

Basic Use Case

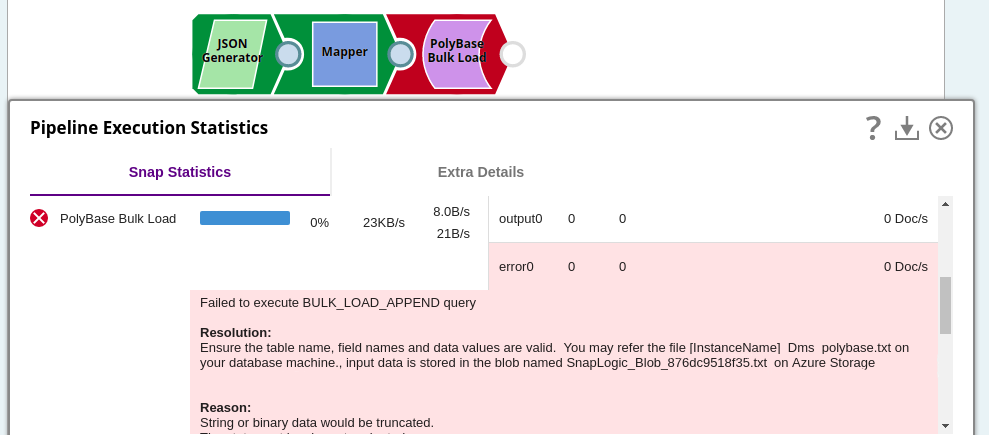

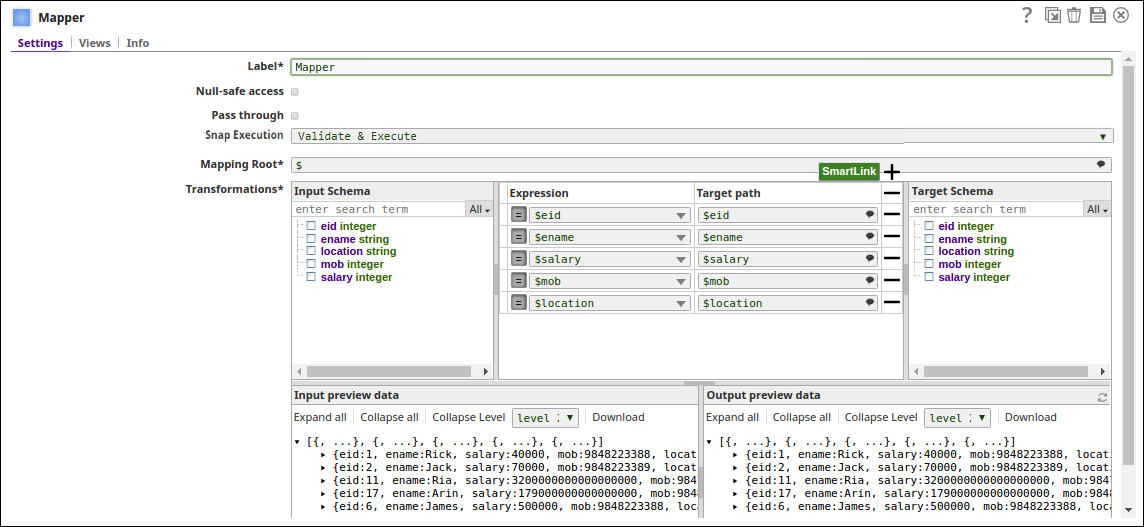

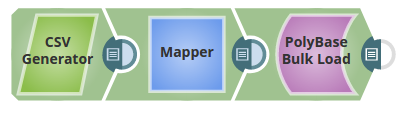

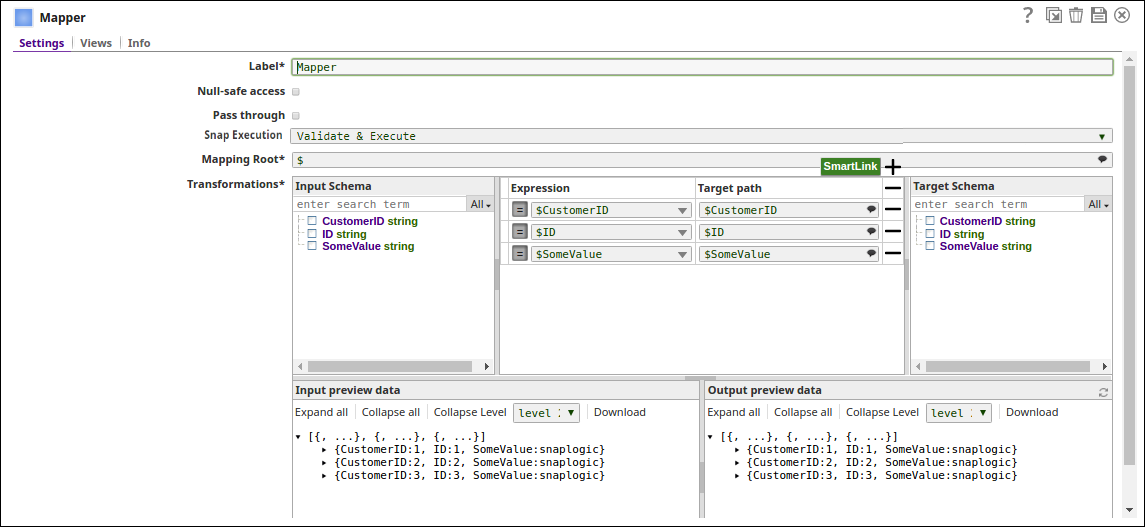

In the below example, the Mapper Snap maps the input schema from the upstream to the target metadata on the PolyBase Bulk Load.

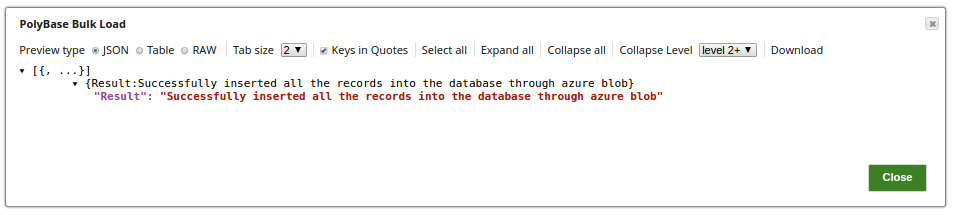

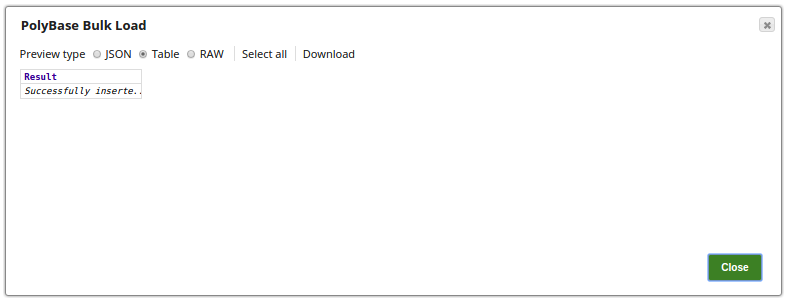

The PolyBase Bulk Load Snap loads the records into the table, "dbo"."9181", The successful execution of the pipeline displays the success status of the loaded records.

The pipeline performs the below ETL transformations:

Extract: The JSON Generator Snap gets the records to be loaded into the PolyBase table

Transform: The Mapper Snap maps the input schema to the metadata of the PolyBase Bulk Load Snap

Load: The PolyBase Bulk Load Snap loads the records into the required table.

Typical Snap Configurations

The key configuration of the Snap lies in how you pass the statement to write the records . As it applies in SnapLogic, you can pass SQL statements in the following manner:

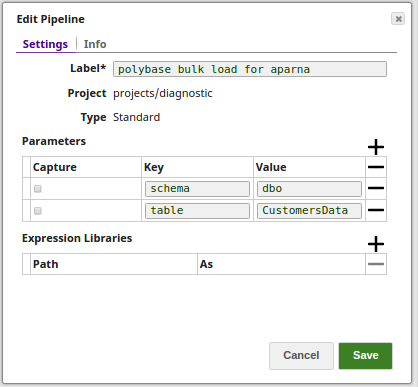

Without Expression: Directly passing the required statement in the PolyBase Bulk Load Snap.

- With Expressions

- Pipeline Parameter: Pipeline parameter set to pass the required values to the PolyBase Bulk Load Snap.

- Pipeline Parameter: Pipeline parameter set to pass the required values to the PolyBase Bulk Load Snap.

The Mapper Snap maps the input schema to the target fields on the PolyBase table.

The pipeline properties as set to be passed into the Snap:

Advanced Use Case

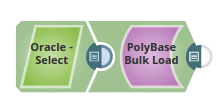

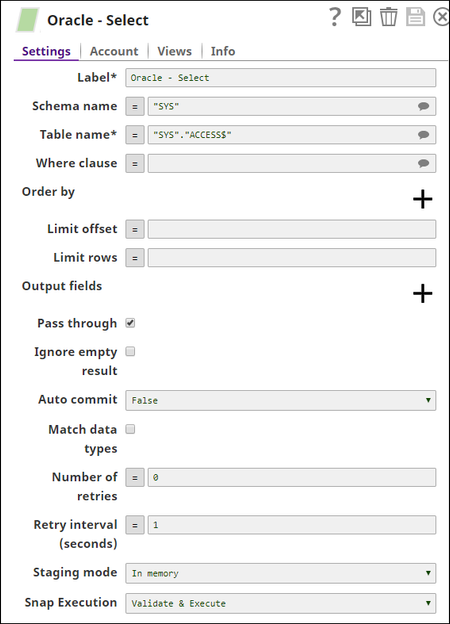

The following describes a pipeline, with a broader business logic involving multiple ETL transformations, that shows how typically in an enterprise environment, PolyBase functionality is used. Pipeline download link below.

In this pipeline, the PolyBase Bulk Load Snap extracts the data from a table on the Oracle DB using a Oracle Select Snap and bulk loads into the table on the PolyBase table. The Output preview displays the status of the execution.

Extract: The Oracle Select Snap reads the records from the Oracle Database.

Load: The PolyBase Bulk load Snap loads the records into the Azure SQL Database.

Downloads

Important steps to successfully reuse Pipelines

- Download and import the pipeline into the SnapLogic application.

- Configure Snap accounts as applicable.

- Provide pipeline parameters as applicable.

Snap Pack History

Have feedback? Email documentation@snaplogic.com | Ask a question in the SnapLogic Community

© 2017-2025 SnapLogic, Inc.