TPT FastExport

On this Page

Snap type: | Read | |||||||

|---|---|---|---|---|---|---|---|---|

Description: | The TPT (Teradata Parallel Transport) FastExport Snap allows you to export data from a Teradata database by executing the script generated using the fields provided by the user in the Snap fields. The Snap writes the exported data to the specified file in the local file system and provides the console output and status code on the output view for any downstream Snap checking for successful execution. Queries produced by the Snap have the format: SELECT TOP [limit] * FROM [table] WHERE [where clause] ORDER BY [ordering] .. and uses QUALIFY() for LIMIT

| |||||||

| Prerequisites: | TPT Snaps (TPT Insert, TPT Update, TPT Delete, TPT Upsert and TPT Load) uses the 'tbuild' utility for the respective operations. In order to use these Snaps in the pipelines, the respective TPT utilities should be installed/available on the Snapplexes. All the required TPT utilities must be installed on the node where JCC is running or the Snap simply executes but may not perform the operation. Any utility/library file missing on the node may cause the Snap to perform a failed operation. | |||||||

| Basic steps for installing the TPT utilities: | Procedure to install the Teradata tools and utilities:

Flow: If the tbuild or fastload is already available on the OS path then the corresponding TPT Snap can invoke the required utility, however, if these binaries are not available on the OS path, then we need to provide the absolute path of the respective binary in the Snap. | |||||||

| Support and limitations: | Works in Ultra Tasks. | |||||||

| Account: | This Snap uses account references created on the Accounts page of SnapLogic Manager to handle access to this endpoint. See Configuring Teradata Database Accounts for information on setting up this type of account. | |||||||

| Views: |

| |||||||

Settings | ||||||||

Label | Required. The name for the Snap. You can modify this to be more specific, especially if you have more than one of the same Snap in your pipeline. | |||||||

Schema name | The database schema name. In case it is not defined, the suggestion for the table name will retrieve all the table names of all the schemas. The property is suggestible and will retrieve available database schemas during suggest values. Example: SYS Default value: [None] | |||||||

| Table name | Required. Name of the table to execute a select query on. Example: people Default value: [None] | |||||||

| Where clause | Where clause of the select statement. This supports document value substitution (such as $person.firstname will be substituted with the value found in the incoming document at the path). However, you may not use a value substitution after "IS" or "is" word. Examples:

Caution Using expressions that join strings together to create SQL queries or conditions has a potential SQL injection risk and is hence unsafe. Ensure that you understand all implications and risks involved before using concatenation of strings with '=' Expression enabled. Default value: [None] | |||||||

| Order by | Enter in the columns in the order in which you want to order by. The default database sort order will be used. Example: Default value: [None] | |||||||

Limit offset | The starting row for the query. Default value: [None] | |||||||

Limit rows | The maximum number of rows the query should return. Default value: [None] | |||||||

Output fields | Enter or select output field names for SQL SELECT statement. To select all fields, leave it at default. Example: email, address, first, last, etc. Default value: [None] | |||||||

| Query band | Specify the name-value pairs to use in the session's generated query band statement. The query band is passed to the Teradata database as a list of name-value pairs separated by semi-colons. Default Value: N/A | |||||||

| Number of retries | Specify the maximum number of reconnection attempts that the Snap must perform, in case of connection failure or timeout. Default Value: 0 | |||||||

Retry interval (seconds) | Enter in seconds the duration for which the Snap must wait between two reconnection attempts, until the number of retries is reached. Default Value: 1 | |||||||

File Action | Required. Select an action when the specified file already exists in the directory. The options available include: OVERWRITE, IGNORE and ERROR. Default value: ERROR | |||||||

TBUILD location | Required. Location of the Teradata application. The TBUILD location will be available on the Snaplex. Default value: /usr/bin/tbuild | |||||||

Output directory | Required. Attribute specifies the full path to the output directory. Specify a local directory path. An absolute path should be entered. Example: /home/Snap/tpt/ file:///home/Snap/tpt/ (local file system path where the file should be stored) Default value: ERROR | |||||||

Output File | Required. Location of exported data file. If there is a white space, then the path should be in quotes as per the Teradata documentation. Default value: [None] | |||||||

File Format | Required. Attribute that specifies the logical record format of the exported data file. The options available include: Binary, Delimited, Formatted, Unformatted and Text. Click TPT Reference, to know more about the details of each format. Text format requires all column data types to be CHAR or DATE in the selected Output fields. If DATE data type is included, the Date format property should be set to Text. Default value: Delimited | |||||||

Text Delimiter Type | Required. Attribute that specifies Type of Text Delimiter that is to be used. The options available include: TextDelimiter and TextDelimiterHEX. Default value: TextDelimiter | |||||||

Text Delimiter | Attribute that specifies the bytes that separate fields in delimited records. Any number of characters can be defined via the attribute assignment. The default delimiter character is the pipe character ( | ). To embed a pipe delimiter character in your data, precede the pipe character with a backslash ( \ ). Use Hex code for Hex Type and Text for the other. To use the tab character as the delimiter character, specify TextDelimiter = 'TAB'. Use uppercase “TAB” not lowercase “tab”. The backslash is required if you want to embed a tab character in your data. Default value: ( | ) | |||||||

Escape Text Delimiter Type | Required. Attribute that specifies Type of Escape Text Delimiter that is to be used. The options available include: EscapeDelimiterText and EscapeDelimiterHEX. Default value: EscapeTextDelimiter | |||||||

Escape Text Delimiter | Attribute that allows you to define the delimiter escape character within delimited data. There is no default data. When processing data in delimited format, if the backslash precedes the delimiter, that instance of the delimiter is included in the data rather than marking the end of the column. Use Hex code for Hex Type and Text for the other. Default value: ( \ ) | |||||||

Date format | Required. Date format used in the output file in text mode. The options available include: Integer and Text. If this property is set to 'Integer' and the File Format property is set to Binary/Formatted /Unformatted, the DATE type values are written to the output file as an integer. Default value: Integer | |||||||

Quoted Data | Required. Determines if data is expected to be enclosed within quotation marks. Default value: Not Selected | |||||||

Snap Execution |

| |||||||

Troubleshooting

| Error | Reason | Resolution |

|---|---|---|

| The Snap is unable to read the Fastload absolute path. | Leave the field blank or update the Fastload absolute path with For example, if you used |

| A syntax error was found in the QUERY_BAND. | Check that the query band is in the form specified in the Query band field above. For example, if you used |

For the Suggest in the Order by columns and the Output fields properties, the value of the Table name property should be an actual table name instead of an expression. If it is an expression, it will display an error message "Could not evaluate accessor: ..." when the Suggest button is pressed. This is because, at the time the Suggest button is pressed, the input document is not available for the Snap to evaluate the expression in the Table name property. The input document is available to the Snap only during the preview or execution time.

Temporary Files

During execution, data processing on Snaplex nodes occurs principally in-memory as streaming and is unencrypted. When larger datasets are processed that exceeds the available compute memory, the Snap writes Pipeline data to local storage as unencrypted to optimize the performance. These temporary files are deleted when the Snap/Pipeline execution completes. You can configure the temporary data's location in the Global properties table of the Snaplex's node properties, which can also help avoid Pipeline errors due to the unavailability of space. For more information, see Temporary Folder in Configuration Options.Example

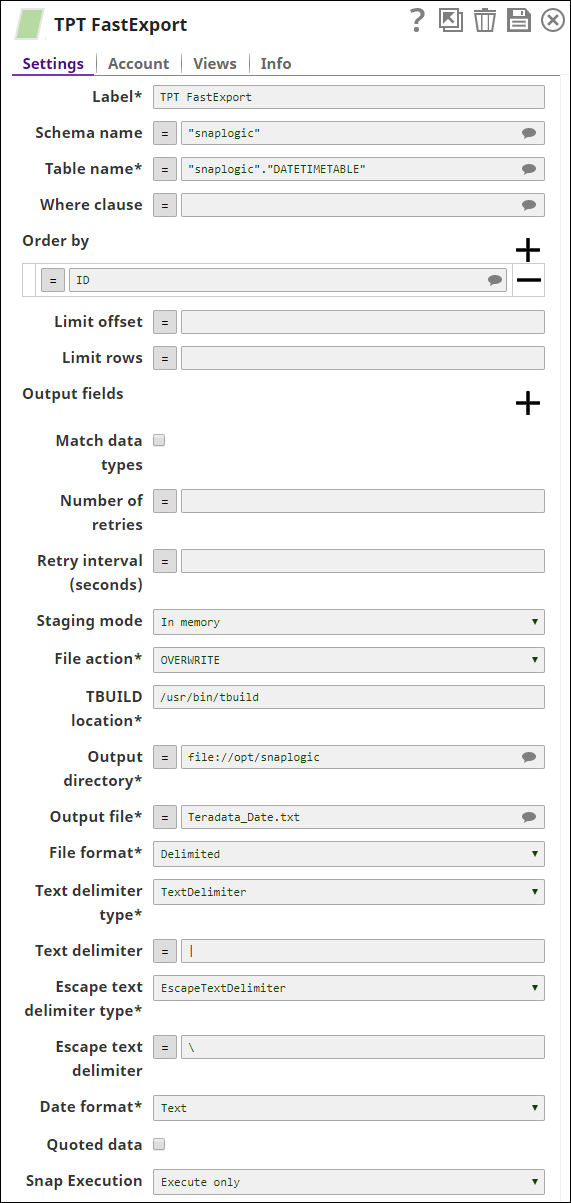

In this example, the pipeline exports the data from a table to a flat file using the TPT FastExport Snap.The data thus exported is read and parsed to an out put view using the File Reader and the CSV Parser Snaps respectively.

TPT FastExport exports the data from the table DATETIMETABLE under the schema, Snaplogic to a flat file, Teradata_Date.txt under the Output directory path, file:///opt/Snaplogic.

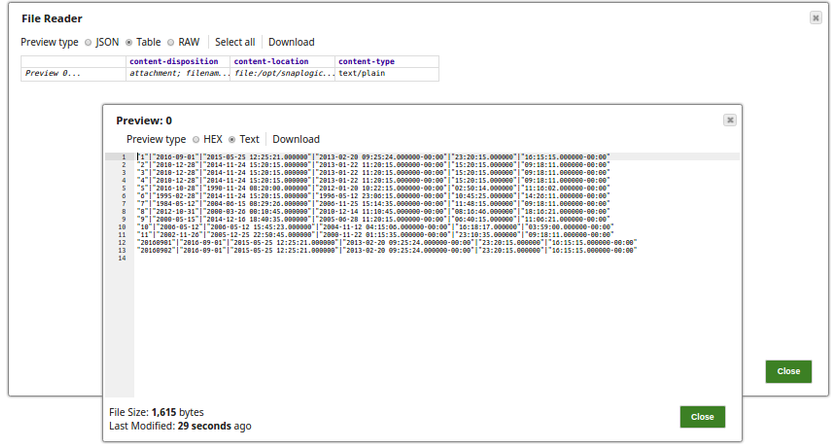

The preview of the data read by the File Reader Snap is:

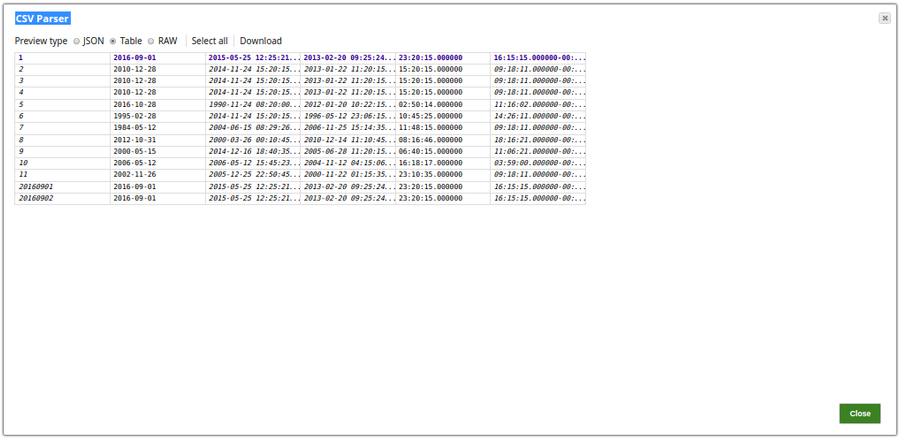

The data is parsed using the CSV Parser Snap. Successful execution of the pipeline displays the below output preview:

Snap Pack History

Have feedback? Email documentation@snaplogic.com | Ask a question in the SnapLogic Community

© 2017-2025 SnapLogic, Inc.