DynamoDB Bulk Write

On this Page

Snap type: | Write | |||||||

|---|---|---|---|---|---|---|---|---|

Description: | This Snap provides the ability to write the data into DynamoDB table. The table becomes suggestible once the account is defined.

| |||||||

| Prerequisites: | [None] | |||||||

| Support and limitations: | Works in Ultra Tasks. | |||||||

| Account: | This Snap uses account references created on the Accounts page of SnapLogic Manager to handle access to this endpoint. See DynamoDB Account for information on setting up this type of account. | |||||||

| Views: |

| |||||||

Settings | ||||||||

Label | Required. The name for the Snap. You can modify this to be more specific, especially if you have more than one of the same Snap in your pipeline. | |||||||

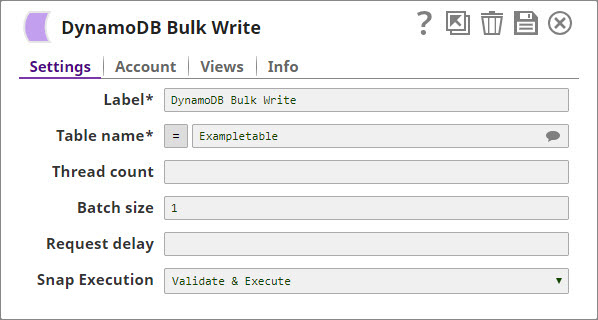

Table name | Required. The name of the table to write the data into. This property is suggestible, that shown the list of the tables in the DB. | |||||||

| Thread count | This property represents the total number of parallel threads to be created for Bulk Write operation. | |||||||

| Batch size | Number of records batched for each request. | |||||||

| Request delay | Delay between two successive batch requests in seconds | |||||||

Snap execution | Select one of the three modes in which the Snap executes. Available options are:

| |||||||

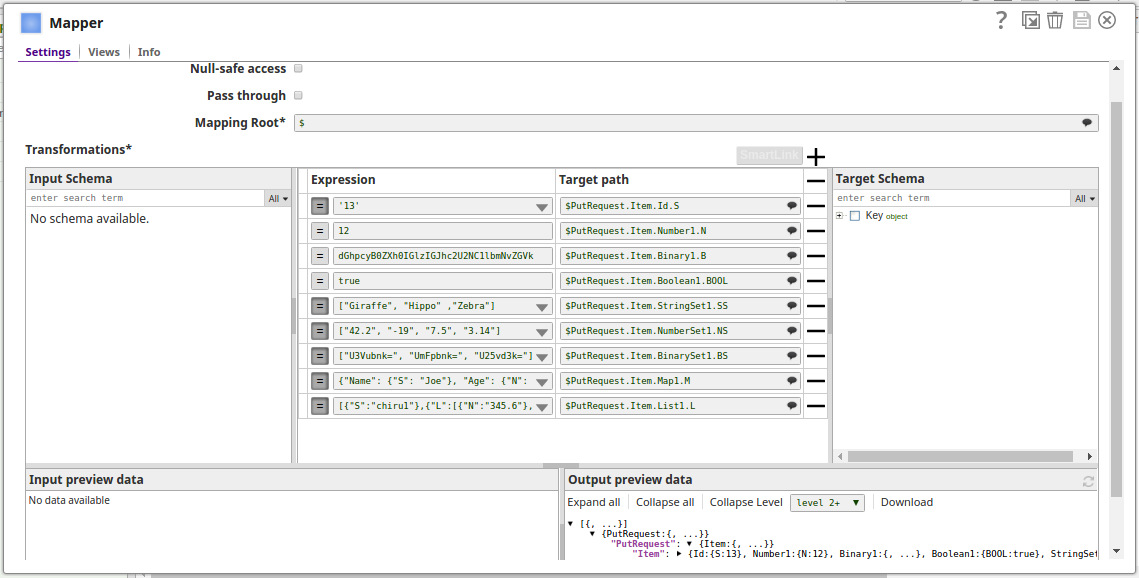

Mandatory Path Mappings in upstream Mapper

The DynamoDB Bulk Write Snap expects an upstream Mapper Snap to feed the right mappings between input stream and the DynamoDB table. The Mapper must output a file/document that has the data in a readable and writable form for the DynamoDB Bulk Write Snap to populate the DynamoDB tables. See Examples for more details.

Input stream mappings are mandatory

In the absence of input stream mappings from the upstream (Mapper) Snap, the DynamoDB Bulk Write Snap fails during Pipeline execution.

Examples

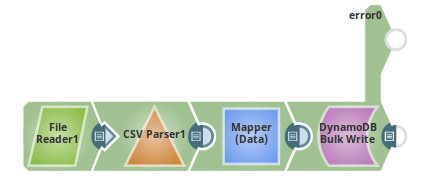

Writing CSV data to a DynamoDB table

The following example will illustrate the usage of the DynamoDB BulkWrite Snap.

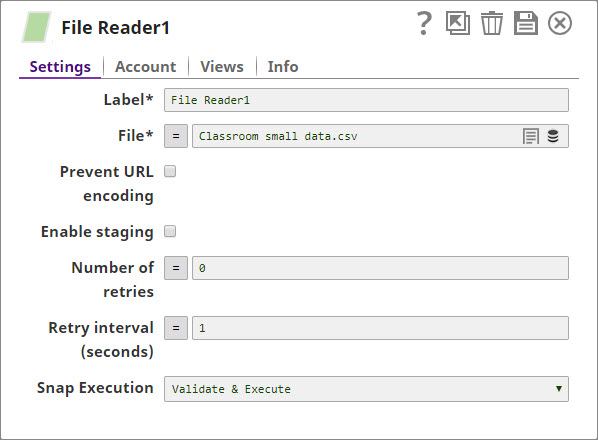

In this pipeline, we read a table data using the File Reader Snap and write the data to the table Exampletable using the Dynamo Bulk Write Snap.

The following images shows the CSV input data passed from the File Reader Snap:

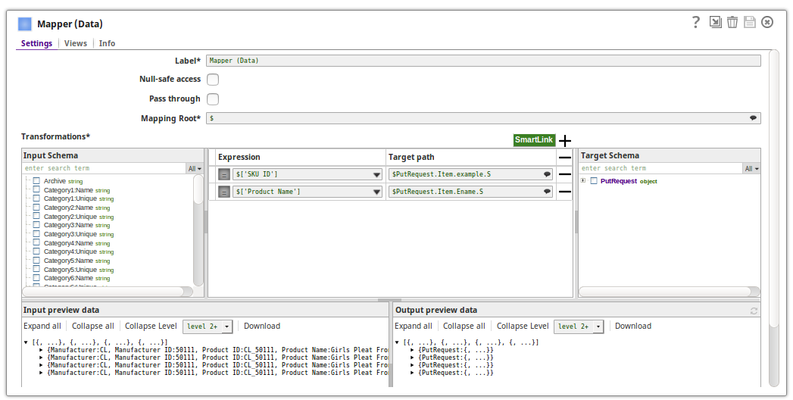

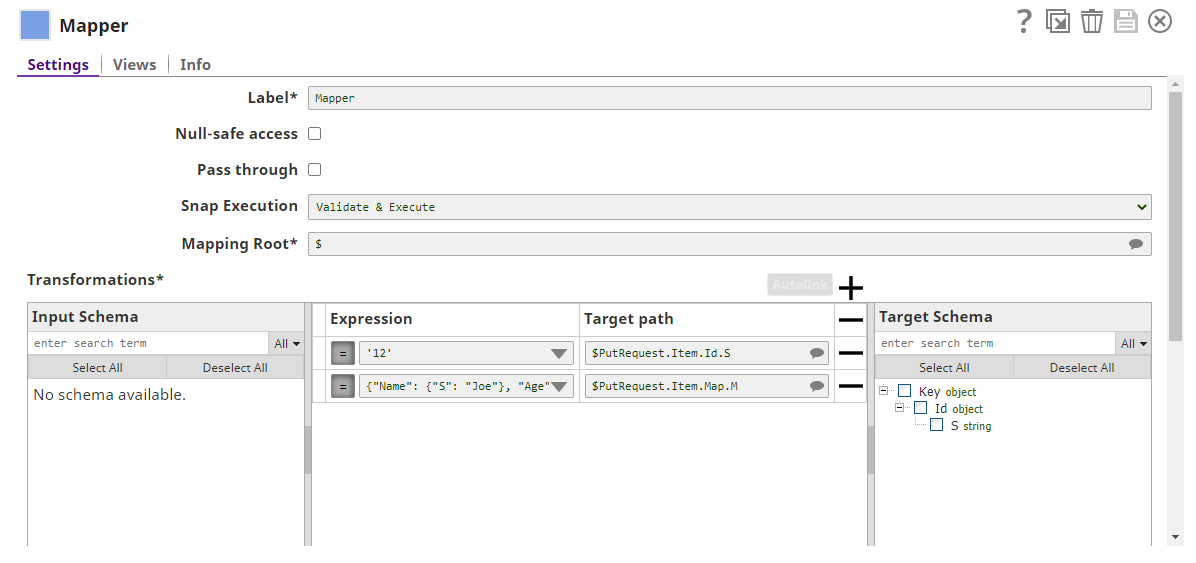

The Mapper Snap maps the object record details that need to be updated in the input view of the DynamoDB Bulk Write Snap:

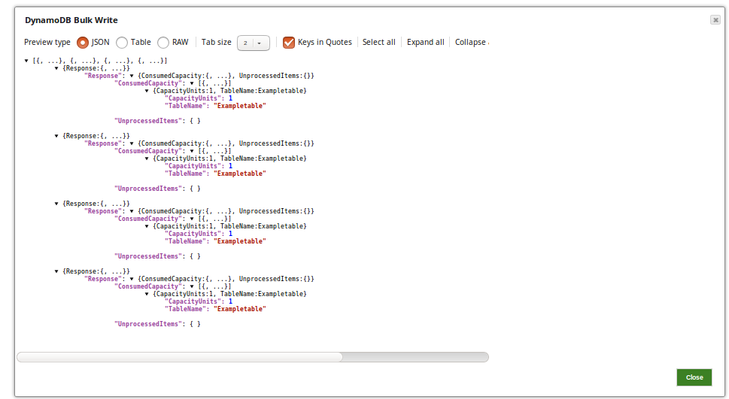

The data is loaded to the DynamoDB table Exampletable.

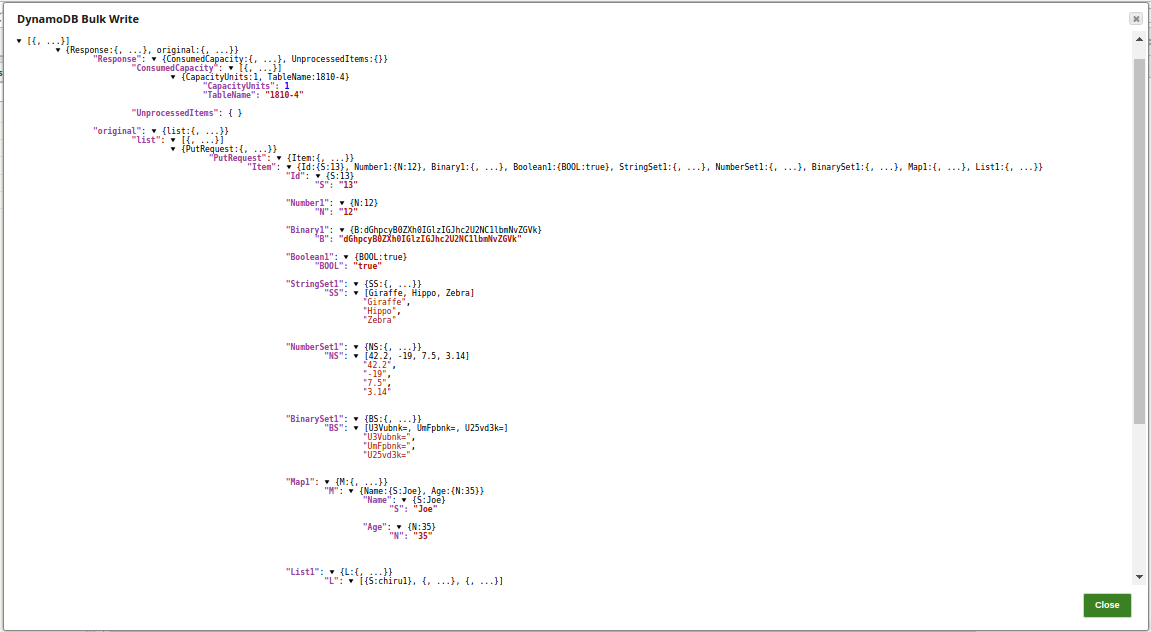

Successful execution of the above Snap gives the following data preview:

Path mappings in upstream Mapper

The following Pipeline illustrates the path mappings needed for writing multiple records using DynamoDB Bulk Write Snap.

To write multiple records into a DynamoDB table, you must first define by using the mappings in the Mapper Snap, the mappings between incoming data and the DynamoDB table schema.

- Ensure the Target path in the Mapping table contains

$PutRequest.Item.preceding every mapping entry. This prefix is mandatory to update the DynamoDB table using this Snap. - The entry in the target path must follow the syntax: $PutRequest.Item.<column>.<datatype>. , both for creating and updating the respective column in the target table.

- For any DynamoDB table target, the Target Schema displays only the (primary) key column details irrespective of the number of mappings defined.

Examples

$PutRequest.Item.id.S, $PutRequest.Item.Map.M

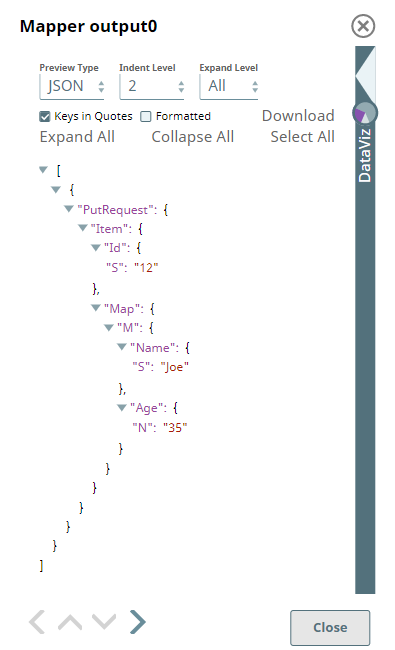

The Mapper generates the mappings output for DynamoDB Bulk Write Snap.

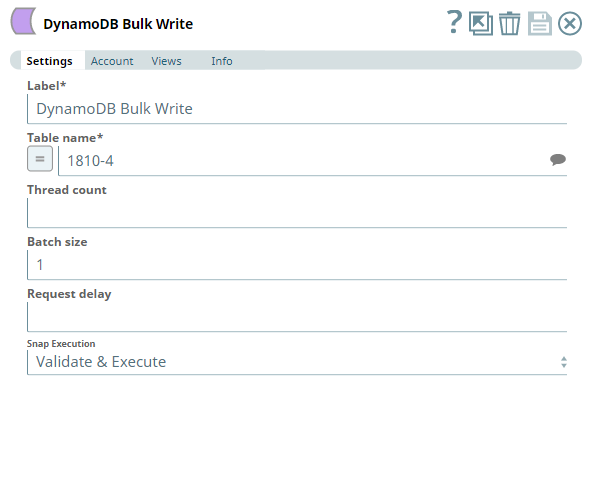

After selecting an appropriate account to connect to the DynamoDB database, define the target table name for the DynamoDB Bulk Write Snap.

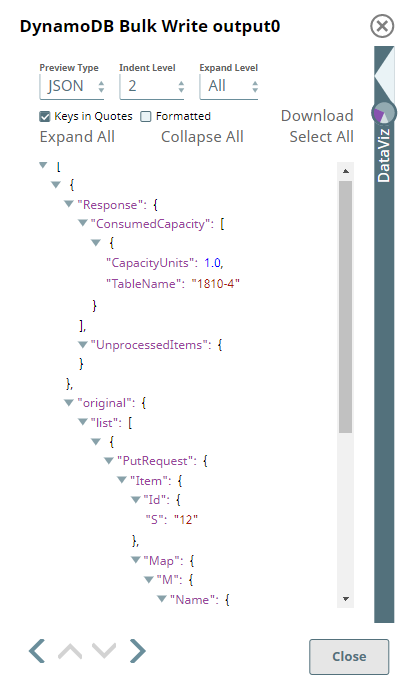

Upon validation, the DynamoDB Bulk Write Snap generates the output preview similar to the following image. It contains the name of the table to be updated, the columns, and the respective values.

Upon execution, the Pipeline inserts multiple records into the specified DynamoDB table, with values defined in the Mapper output.

Downloads

Important steps to successfully reuse Pipelines

- Download and import the pipeline into the SnapLogic application.

- Configure Snap accounts as applicable.

- Provide pipeline parameters as applicable.

Snap Pack History

Have feedback? Email documentation@snaplogic.com | Ask a question in the SnapLogic Community

© 2017-2025 SnapLogic, Inc.

.png?version=1&modificationDate=1489931210386&cacheVersion=1&api=v2&width=860&height=400)